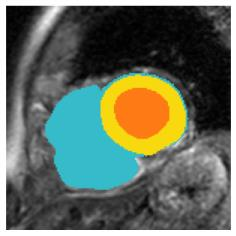

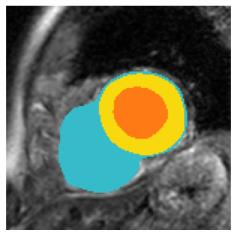

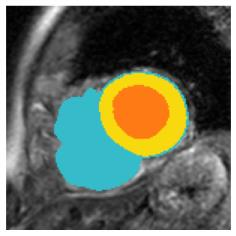

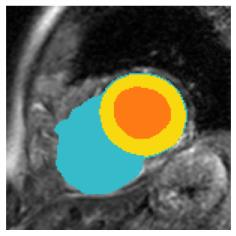

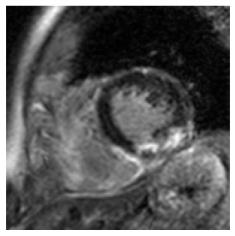

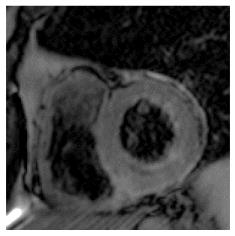

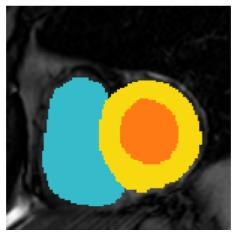

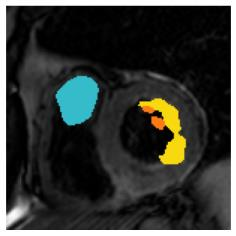

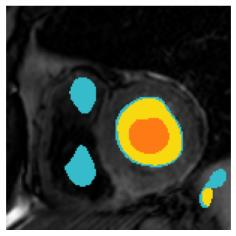

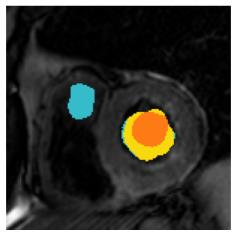

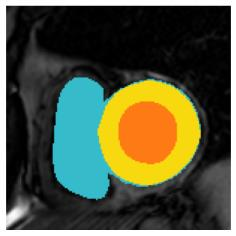

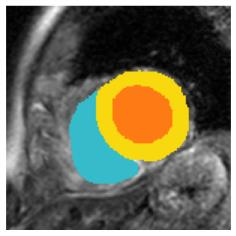

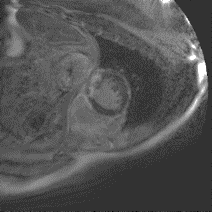

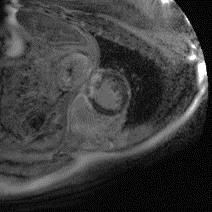

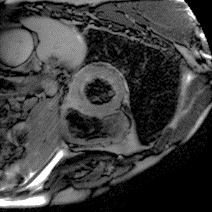

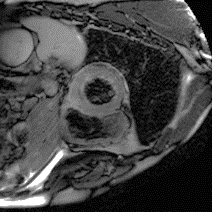

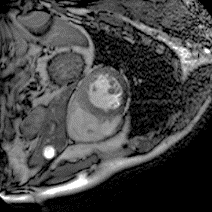

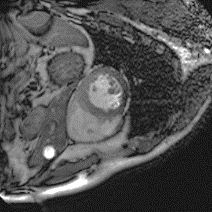

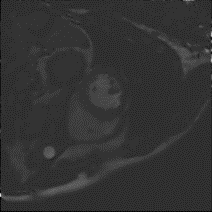

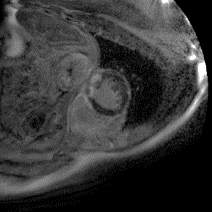

Although supervised deep-learning has achieved promising performance in medical image segmentation, many methods cannot generalize well on unseen data, limiting their real-world applicability. To address this problem, we propose a deep learning-based Bayesian framework, which jointly models image and label statistics, utilizing the domain-irrelevant contour of a medical image for segmentation. Specifically, we first decompose an image into components of contour and basis. Then, we model the expected label as a variable only related to the contour. Finally, we develop a variational Bayesian framework to infer the posterior distributions of these variables, including the contour, the basis, and the label. The framework is implemented with neural networks, thus is referred to as deep Bayesian segmentation. Results on the task of cross-sequence cardiac MRI segmentation show that our method set a new state of the art for model generalizability. Particularly, the BayeSeg model trained with LGE MRI generalized well on T2 images and outperformed other models with great margins, i.e., over 0.47 in terms of average Dice. Our code is available at https://zmiclab.github.io/projects.html.

翻译:虽然监督的深层学习在医学图像分割方面取得了有希望的成绩,但许多方法无法在隐蔽数据上一概而论,限制了其真实世界的适用性。为了解决这一问题,我们提议了一个深层次的学习基础巴伊西亚框架,这个框架共同模拟贝伊西亚图像和标签统计,利用与领域相关的医学图像的轮廓进行分解。具体地说,我们首先将图像分解成轮廓和基础的构件。然后,我们将预期的标签作为仅与轮廓有关的变量进行模拟。最后,我们开发了一个变异的巴伊西亚框架,以推断这些变量的后端分布,包括轮廓、基础和标签。这个框架是用神经网络共同实施的,因此被称为深巴伊西亚分解。关于交叉序列心脏分解任务的结果显示,我们的方法为模型的可通用性设定了一个新的状态。特别是,与LGE MRI 培训的ByeSege模型在T2图像上普遍普及,并优于大边距的其他模型,即0.47以上为平均的Dimicus/ https.