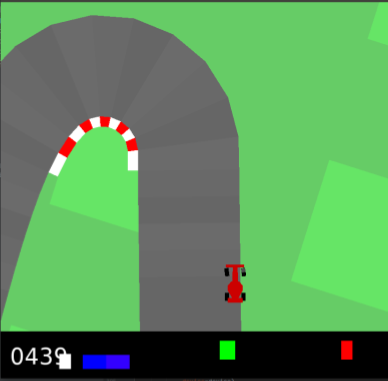

Reinforcement learning methods for continuous control tasks have evolved in recent years generating a family of policy gradient methods that rely primarily on a Gaussian distribution for modeling a stochastic policy. However, the Gaussian distribution has an infinite support, whereas real world applications usually have a bounded action space. This dissonance causes an estimation bias that can be eliminated if the Beta distribution is used for the policy instead, as it presents a finite support. In this work, we investigate how this Beta policy performs when it is trained by the Proximal Policy Optimization (PPO) algorithm on two continuous control tasks from OpenAI gym. For both tasks, the Beta policy is superior to the Gaussian policy in terms of agent's final expected reward, also showing more stability and faster convergence of the training process. For the CarRacing environment with high-dimensional image input, the agent's success rate was improved by 63% over the Gaussian policy.

翻译:连续控制任务强化学习方法近年来已经演变成一套政策梯度方法,主要依靠高斯分配法来模拟随机政策。 但是,高斯分配法有无限的支持, 而真实世界应用通常有约束的动作空间。 这种不协调导致一种估计偏差, 如果将贝塔分配法用于政策, 则可以消除这种偏差, 因为它是一种有限的支持。 在这项工作中, 我们调查贝塔政策在接受Proximal政策优化算法( PPPPO) 的训练后如何在OpenAI 健身房的两项连续控制任务上执行。 对于这两项任务, Beta 政策在代理最后预期的奖励方面都优于高斯政策, 同时显示培训过程的稳定性和更快的趋同。 对于具有高维图像投入的 CarRacing 环境, 代理人的成功率比高斯政策提高了63% 。