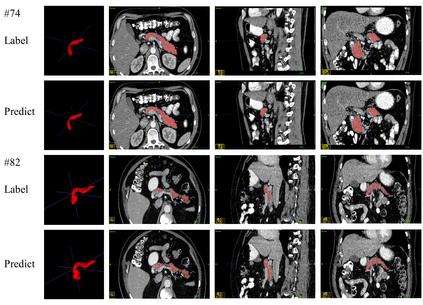

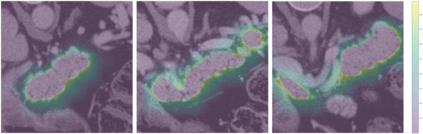

Transformer-based neural networks have surpassed promising performance on many biomedical image segmentation tasks due to a better global information modeling from the self-attention mechanism. However, most methods are still designed for 2D medical images while ignoring the essential 3D volume information. The main challenge for 3D transformer-based segmentation methods is the quadratic complexity introduced by the self-attention mechanism \cite{vaswani2017attention}. In this paper, we propose a novel transformer architecture for 3D medical image segmentation using an encoder-decoder style architecture with linear complexity. Furthermore, we newly introduce a dynamic token concept to further reduce the token numbers for self-attention calculation. Taking advantage of the global information modeling, we provide uncertainty maps from different hierarchy stages. We evaluate this method on multiple challenging CT pancreas segmentation datasets. Our promising results show that our novel 3D Transformer-based segmentor could provide promising highly feasible segmentation performance and accurate uncertainty quantification using single annotation. Code is available https://github.com/freshman97/LinTransUNet.

翻译:以变压器为基础的神经网络在许多生物医学图像分割任务上已经超过了有希望的绩效,原因是从自我注意机制中建立了更好的全球信息模型。然而,大多数方法仍然为2D医学图像设计,而忽略了基本的 3D 量信息。基于 3D 的变压器分割方法面临的主要挑战是自我注意机制\ cite{vaswani2017tenice} 引入的二次复杂情况。我们在本文件中提议为3D医学图像分割设计一个新型变压器结构,使用具有线性复杂性的编码-脱相机风格结构。此外,我们新引入了一个动态符号概念,以进一步减少用于自我注意计算的数字。我们利用全球信息模型,提供了不同等级阶段的不确定性地图。我们评估了这种具有多重挑战性的CT 板块分割数据集的方法。我们有希望的结果显示,我们新型的3D变压器分解器分解器能够提供充满希望的高度可行的分解功能和准确的不确定性量化,使用单一注释。代码可查到 https://github.com/stimman97/LintransyUN。