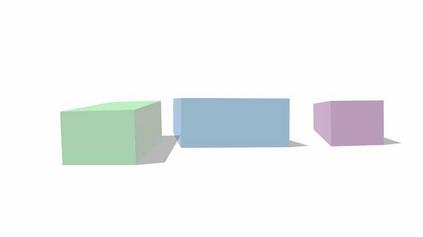

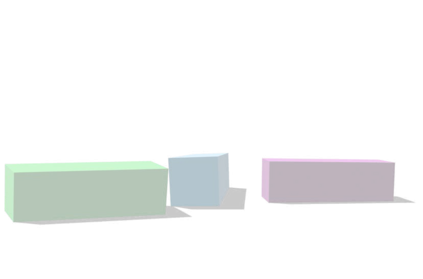

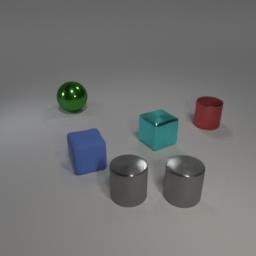

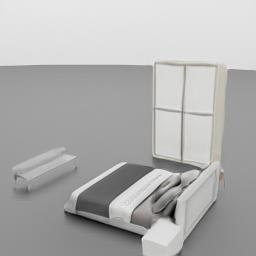

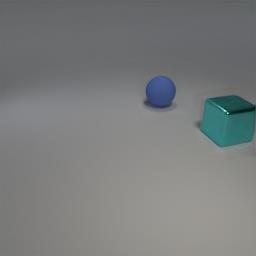

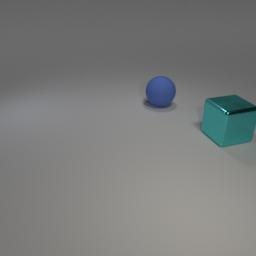

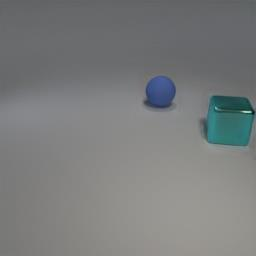

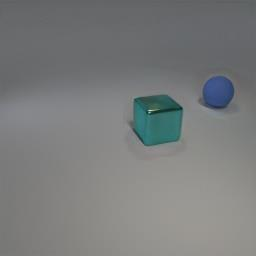

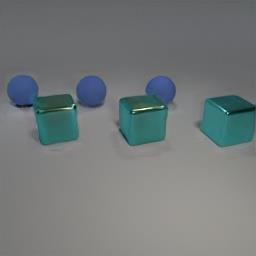

Existing 3D-aware image synthesis approaches mainly focus on generating a single canonical object and show limited capacity in composing a complex scene containing a variety of objects. This work presents DisCoScene: a 3Daware generative model for high-quality and controllable scene synthesis. The key ingredient of our method is a very abstract object-level representation (i.e., 3D bounding boxes without semantic annotation) as the scene layout prior, which is simple to obtain, general to describe various scene contents, and yet informative to disentangle objects and background. Moreover, it serves as an intuitive user control for scene editing. Based on such a prior, the proposed model spatially disentangles the whole scene into object-centric generative radiance fields by learning on only 2D images with the global-local discrimination. Our model obtains the generation fidelity and editing flexibility of individual objects while being able to efficiently compose objects and the background into a complete scene. We demonstrate state-of-the-art performance on many scene datasets, including the challenging Waymo outdoor dataset. Project page: https://snap-research.github.io/discoscene/

翻译:现有的 3D- 可见图像合成方法主要侧重于生成一个单一的圆形天体, 并显示在形成包含各种天体的复杂场景时能力有限。 这项工作展示了 DisCoScene : 3Daware 基因化模型, 用于高质量和可控场景合成。 我们方法的关键成分是作为场景布局之前的非常抽象的物体级代表( 3D 捆绑盒, 没有语义注释 ), 它简单易获取、 笼统地描述各种场景内容, 但却能显示解析对象和背景的有限能力。 此外, 它作为场景编辑的直观用户控制。 基于此之前的, 提议的模型将整个场景在空间上分解成以天为中心, 仅通过学习 2D 图像进行全局歧视 。 我们的模型在能够将对象和背景有效地配置成完整场景时获得了生成的忠诚度和编辑灵活性 。 我们展示了许多场景数据集的状态, 包括具有挑战性的户外数据集/ 。 项目页面 : http://nfors searmas- searmax