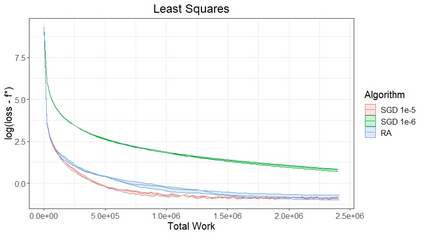

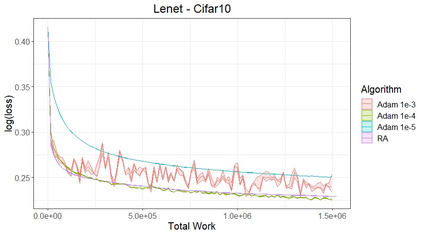

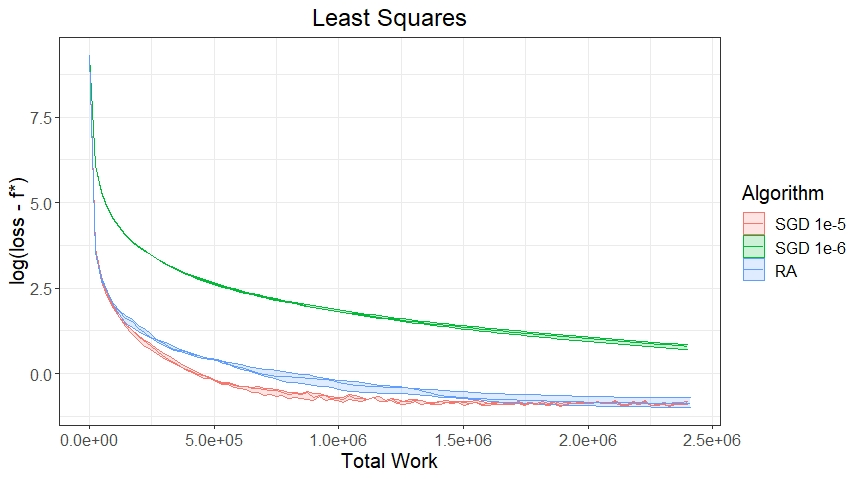

Stochastic Gradient (SG) is the defacto iterative technique to solve stochastic optimization (SO) problems with a smooth (non-convex) objective $f$ and a stochastic first-order oracle. SG's attractiveness is due in part to its simplicity of executing a single step along the negative subsampled gradient direction to update the incumbent iterate. In this paper, we question SG's choice of executing a single step as opposed to multiple steps between subsample updates. Our investigation leads naturally to generalizing SG into Retrospective Approximation (RA) where, during each iteration, a "deterministic solver" executes possibly multiple steps on a subsampled deterministic problem and stops when further solving is deemed unnecessary from the standpoint of statistical efficiency. RA thus rigorizes what is appealing for implementation -- during each iteration, "plug in" a solver, e.g., L-BFGS line search or Newton-CG, as is, and solve only to the extent necessary. We develop a complete theory using relative error of the observed gradients as the principal object, demonstrating that almost sure and $L_1$ consistency of RA are preserved under especially weak conditions when sample sizes are increased at appropriate rates. We also characterize the iteration and oracle complexity (for linear and sub-linear solvers) of RA, and identify a practical termination criterion leading to optimal complexity rates. To subsume non-convex $f$, we present a certain "random central limit theorem" that incorporates the effect of curvature across all first-order critical points, demonstrating that the asymptotic behavior is described by a certain mixture of normals. The message from our numerical experiments is that the ability of RA to incorporate existing second-order deterministic solvers in a strategic manner might be important from the standpoint of dispensing with hyper-parameter tuning.

翻译:Stochastegradient (SG) 是用来用平滑(非convex) 目标$f$美元和直观第一阶阶梯解决透析优化(SO)问题的一种变相迭接技术。 SG的吸引力部分是由于它简单地沿负的子复制梯度方向执行单步, 以更新现任的梯度。 在本文中, 我们质疑SG 选择执行单步, 而不是次抽样更新之间的多个步骤。 我们的调查自然导致将SG 概括为 Retropeop Approntical (RA) 问题, 在每次迭代( 非convex) 目标期间, “ 确定性解析解析器” 可能执行多个步骤, 部分是由于在从统计效率的角度认为进一步解决没有必要时, 简单化的确定性梯度问题。 因此, rodicorminalmentalalalalal 可能会在每次试算中, 解算一个“ ” (例如,L- BFI- 线搜索或Newton-G(RA) 的直径直径直径直径直径搜索), 显示一个比直径直径直径直径直径直径直径, 直径, 标准是用来显示一个必要的, 直径标, 。