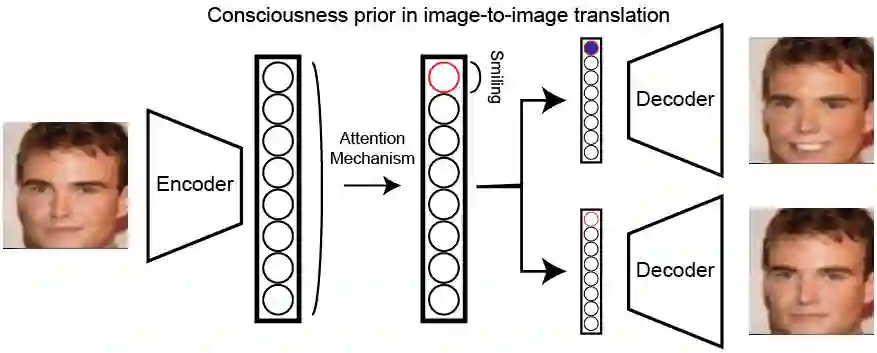

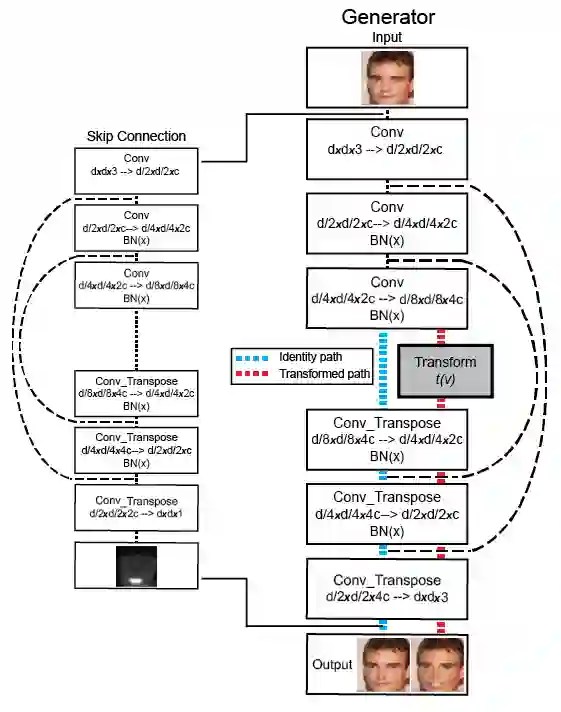

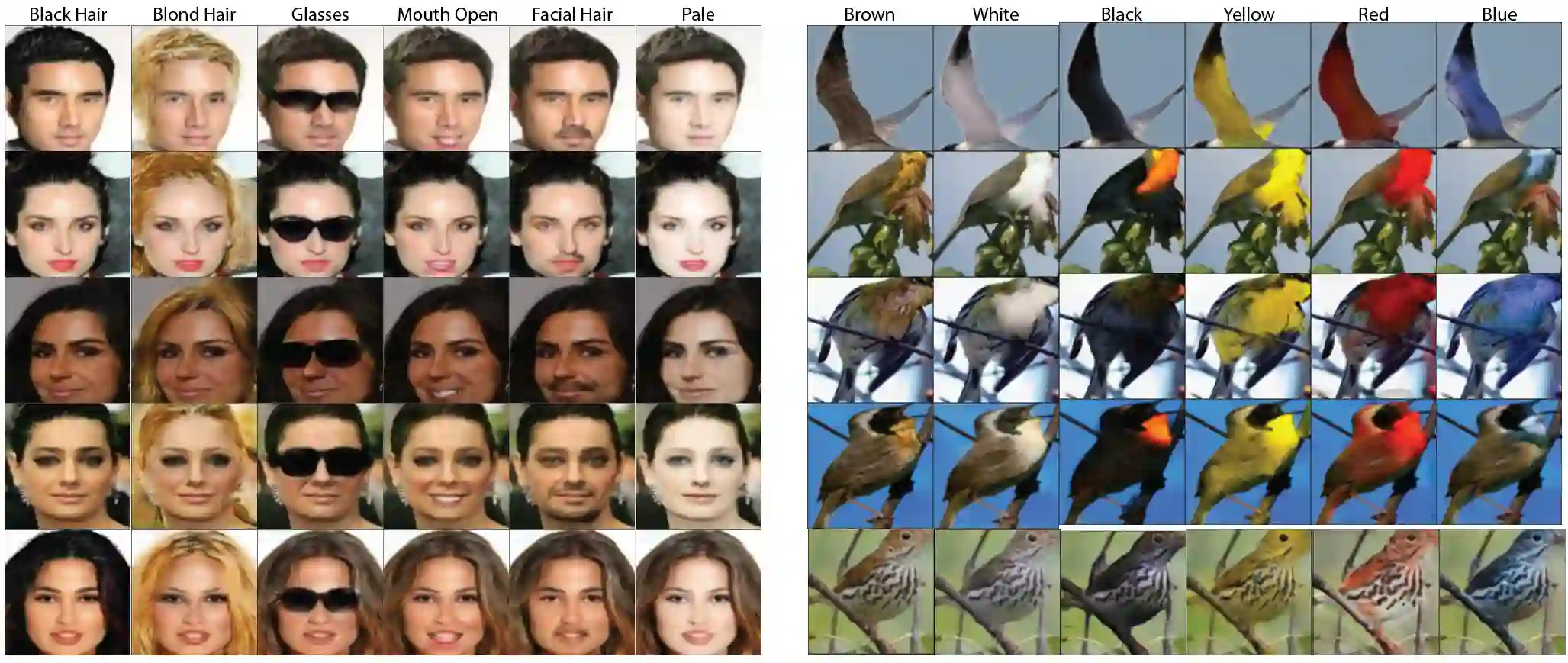

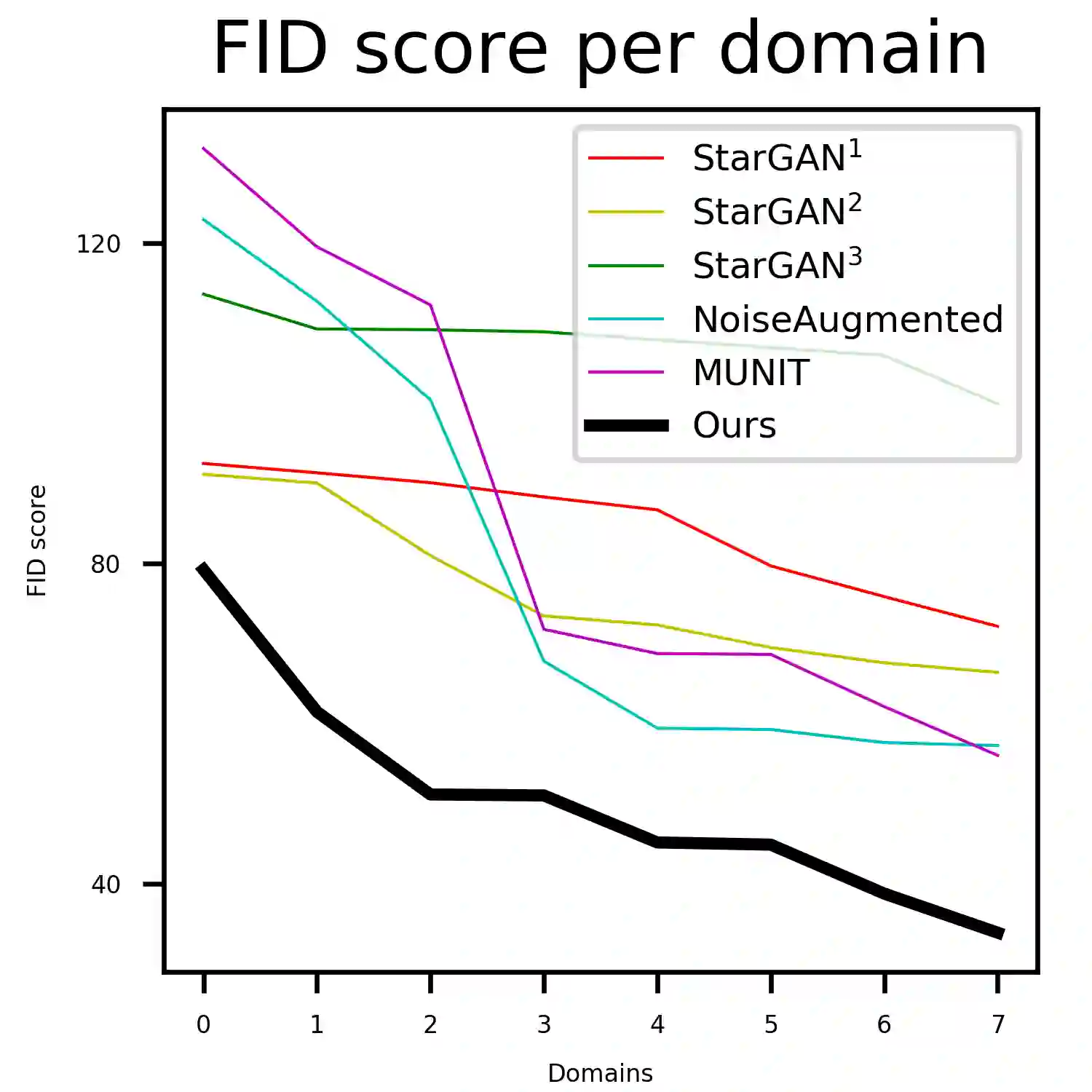

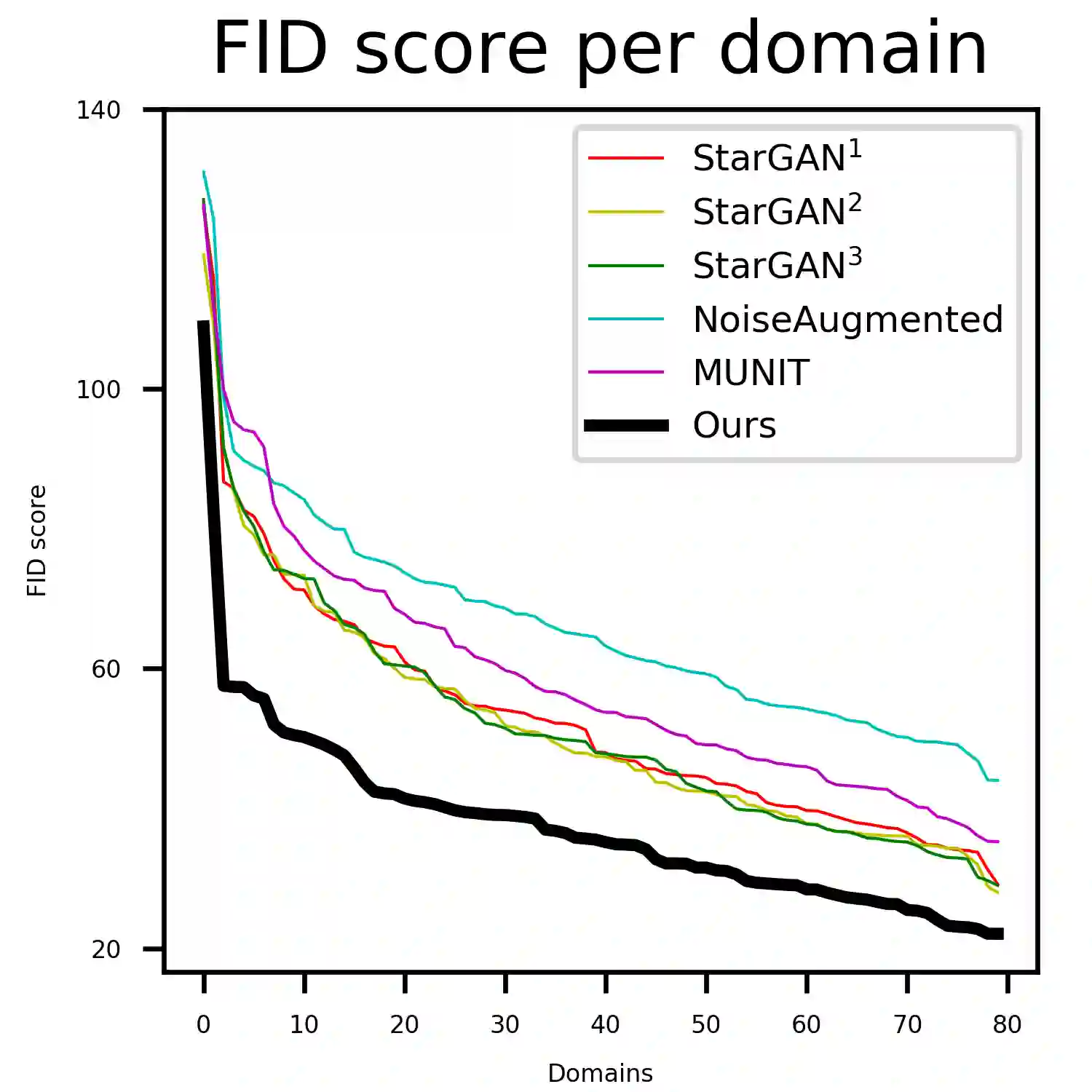

Unsupervised image-to-image translation consists of learning a pair of mappings between two domains without known pairwise correspondences between points. The current convention is to approach this task with cycle-consistent GANs: using a discriminator to encourage the generator to change the image to match the target domain, while training the generator to be inverted with another mapping. While ending up with paired inverse functions may be a good end result, enforcing this restriction at all times during training can be a hindrance to effective modeling. We propose an alternate approach that directly restricts the generator to performing a simple sparse transformation in a latent layer, motivated by recent work from cognitive neuroscience suggesting an architectural prior on representations corresponding to consciousness. Our biologically motivated approach leads to representations more amenable to transformation by disentangling high-level abstract concepts in the latent space. We demonstrate that image-to-image domain translation with many different domains can be learned more effectively with our architecturally constrained, simple transformation than with previous unconstrained architectures that rely on a cycle-consistency loss.

翻译:未经监督的图像到图像翻译包括学习两个域间一对没有已知的两对相对相匹配点之间的匹配。 当前的公约是用循环一致的 GAN 来对待这项任务: 使用歧视器鼓励生成者改变图像以匹配目标域, 同时训练生成者将另一个映射倒置。 虽然与反向函数相结合的结果可能是一个良好的最终结果, 但是在培训期间始终执行这一限制会阻碍有效的建模。 我们提议了一种替代方法, 直接限制生成者在潜层进行简单的稀疏变换, 其动机是最新的认知神经科学工作, 在与意识相对的演示之前就提出建筑性变换。 我们的生物学动机方法导致通过在潜在空间分离高层次的抽象概念而更便于变换。 我们证明, 与许多不同域的图像到映射域的翻译可以通过我们的建筑限制来更有效地学习, 简单的变换比以前依赖循环一致性损失的未受控制的结构更简单。