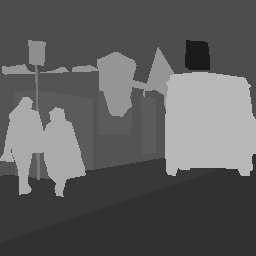

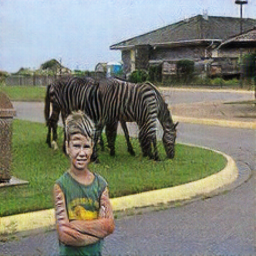

Unpaired image-to-image translation is the problem of mapping an image in the source domain to one in the target domain, without requiring corresponding image pairs. To ensure the translated images are realistically plausible, recent works, such as Cycle-GAN, demands this mapping to be invertible. While, this requirement demonstrates promising results when the domains are unimodal, its performance is unpredictable in a multi-modal scenario such as in an image segmentation task. This is because, invertibility does not necessarily enforce semantic correctness. To this end, we present a semantically-consistent GAN framework, dubbed Sem-GAN, in which the semantics are defined by the class identities of image segments in the source domain as produced by a semantic segmentation algorithm. Our proposed framework includes consistency constraints on the translation task that, together with the GAN loss and the cycle-constraints, enforces that the images when translated will inherit the appearances of the target domain, while (approximately) maintaining their identities from the source domain. We present experiments on several image-to-image translation tasks and demonstrate that Sem-GAN improves the quality of the translated images significantly, sometimes by more than 20% on the FCN score. Further, we show that semantic segmentation models, trained with synthetic images translated via Sem-GAN, leads to significantly better segmentation results than other variants.

翻译:未映射图像到映像翻译是将源域图像映射成目标域图像的问题,不需要相应的图像配对。 为确保翻译图像真实可信, 最近的工作( 如循环 GAN) 要求此映射不可倒置。 虽然这一要求显示了当域不以模形形式显示时, 其性能在图像分割任务等多模式情景中难以预测。 这是因为, 不可视性并不一定强制实施语义正确的正确性。 为此, 我们提出了一个语义一致的 GAN 框架, 称为 Sem- GAN, 其中语义由源域图像部分的类别身份定义, 由语义分解算法生成。 我们提议的框架包括翻译任务的一致性限制, 加上 GAN 损失和循环控制, 强制翻译时图像将继承目标域的外观, 同时( 约) 维护源域的身份 。 我们通过多个图像模型到图像部分进行实验, 由源域域域的图像转换质量大大高于 Sem- AN 的分类, 显示我们经过培训的Sem- GAN 的分级的图像翻译质量,, 显示我们经过进一步翻译的Sem- GAN 。