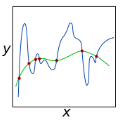

Parameter regularization or allocation methods are effective in overcoming catastrophic forgetting in lifelong learning. However, they solve all tasks in a sequence uniformly and ignore the differences in the learning difficulty of different tasks. So parameter regularization methods face significant forgetting when learning a new task very different from learned tasks, and parameter allocation methods face unnecessary parameter overhead when learning simple tasks. In this paper, we propose the Parameter Allocation & Regularization (PAR), which adaptively select an appropriate strategy for each task from parameter allocation and regularization based on its learning difficulty. A task is easy for a model that has learned tasks related to it and vice versa. We propose a divergence estimation method based on the Nearest-Prototype distance to measure the task relatedness using only features of the new task. Moreover, we propose a time-efficient relatedness-aware sampling-based architecture search strategy to reduce the parameter overhead for allocation. Experimental results on multiple benchmarks demonstrate that, compared with SOTAs, our method is scalable and significantly reduces the model's redundancy while improving the model's performance. Further qualitative analysis indicates that PAR obtains reasonable task-relatedness.

翻译:参数正则化或分配方法可以有效地克服终身学习中的灾难性遗忘。然而,它们在统一解决所有任务的序列时忽略了不同任务的学习难度差异。因此,当学习与已学任务非常不同的新任务时,参数正则化方法会面临重大遗忘问题,而参数分配方法则会在学习简单任务时面临不必要的参数开销。在本文中,我们提出了参数分配与正则化(PAR),它基于学习难度从参数分配和正则化中自适应地选择适当的策略。对于已经学习过相关任务的模型来说,一项任务是容易的,反之亦然。我们提出了一种基于最近原型距离的散度估计方法,仅使用新任务的特征来测量任务相关性。此外,我们提出了一种时间效率高的基于相关性感知的采样式结构搜索策略,以减少分配的参数开销。在多个基准测试中的实验结果表明,与SOTAs相比,我们的方法是可扩展的,可以显著减少模型的冗余同时提高模型的性能。进一步的定性分析表明,PAR可以获得合理的任务相关性。