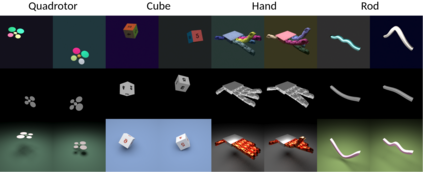

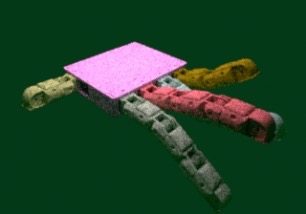

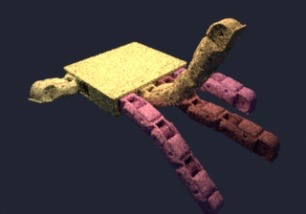

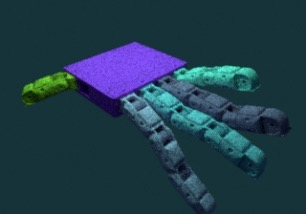

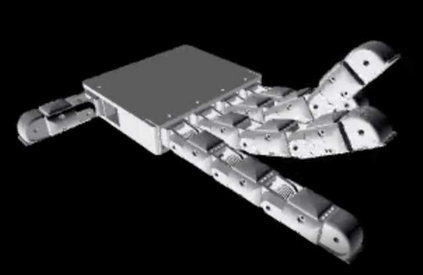

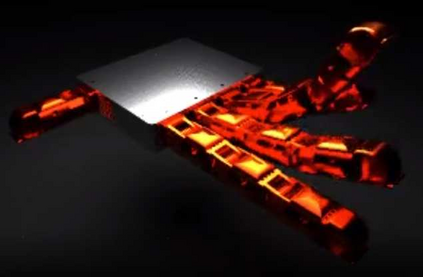

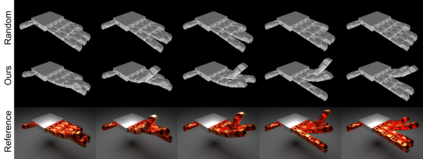

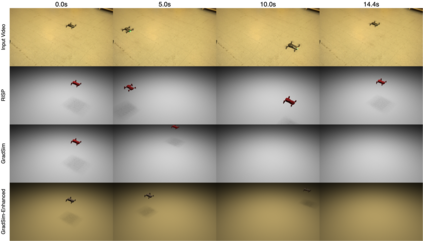

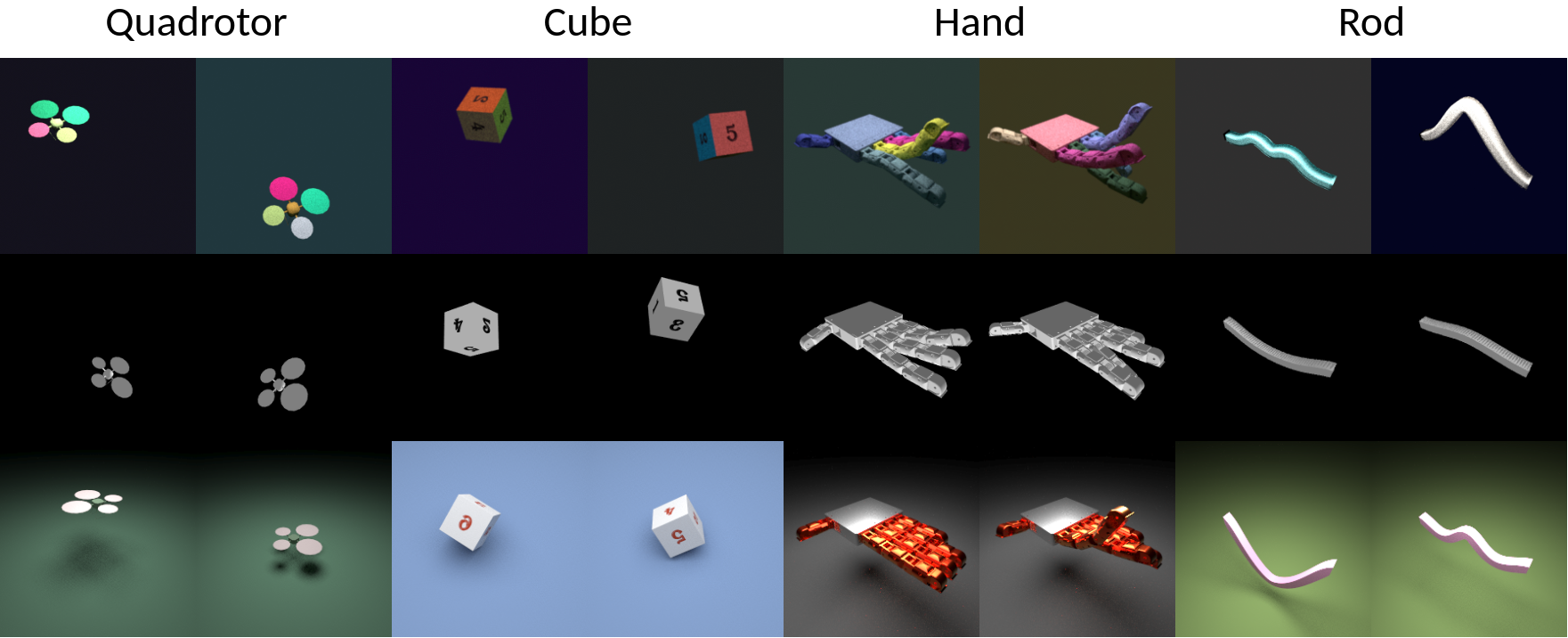

This work considers identifying parameters characterizing a physical system's dynamic motion directly from a video whose rendering configurations are inaccessible. Existing solutions require massive training data or lack generalizability to unknown rendering configurations. We propose a novel approach that marries domain randomization and differentiable rendering gradients to address this problem. Our core idea is to train a rendering-invariant state-prediction (RISP) network that transforms image differences into state differences independent of rendering configurations, e.g., lighting, shadows, or material reflectance. To train this predictor, we formulate a new loss on rendering variances using gradients from differentiable rendering. Moreover, we present an efficient, second-order method to compute the gradients of this loss, allowing it to be integrated seamlessly into modern deep learning frameworks. We evaluate our method in rigid-body and deformable-body simulation environments using four tasks: state estimation, system identification, imitation learning, and visuomotor control. We further demonstrate the efficacy of our approach on a real-world example: inferring the state and action sequences of a quadrotor from a video of its motion sequences. Compared with existing methods, our approach achieves significantly lower reconstruction errors and has better generalizability among unknown rendering configurations.

翻译:这项工作考虑确定物理系统动态运动的参数,这些参数直接来自一个无法制作配置的视频。现有的解决方案需要大量的培训数据,或者缺乏通用性或未知的配置配置。我们提出了一种新颖的方法,将域随机化和可变化的梯度结合起来,以解决这一问题。我们的核心想法是训练一个变异状态预测(RISP)网络,将图像差异转化为状态差异,独立于图像配置,如光、阴影或材料反射。为了训练这个预测器,我们用不同变异的变异来计算差异。此外,我们提出了一种高效的、二级的方法来计算这一损失的梯度,使其与现代深层学习框架紧密结合。我们用四个任务来评估我们硬体和变异体模拟环境的方法:状态估计、系统识别、模拟学习和透光机控制。我们进一步展示了我们采用的方法在现实世界实例上的有效性:从一个变异的变异式变异的变异的变的变异性中推断出一个二次变异的方位和动作顺序。此外,我们提出了一种高效的计算方法,使这种变异的变异性能够更精确地进行。