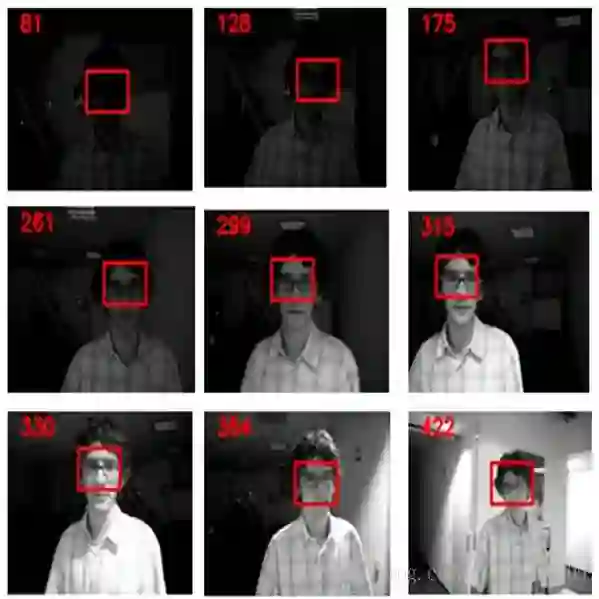

Modern deep convolutional neural networks (CNNs) for image classification and object detection are often trained offline on large static datasets. Some applications, however, will require training in real-time on live video streams with a human-in-the-loop. We refer to this class of problem as time-ordered online training (ToOT). These problems will require a consideration of not only the quantity of incoming training data, but the human effort required to annotate and use it. We demonstrate and evaluate a system tailored to training an object detector on a live video stream with minimal input from a human operator. We show that we can obtain bounding box annotation from weakly-supervised single-point clicks through interactive segmentation. Furthermore, by exploiting the time-ordered nature of the video stream through object tracking, we can increase the average training benefit of human interactions by 3-4 times.

翻译:用于图像分类和天体探测的现代深演神经网络(CNNs)往往在大型静态数据集上进行离线培训,但有些应用需要实时的实时视频流培训,使用人流中人流进行实时培训。我们称这一类问题为按时间排序的在线培训(ToOT)。这些问题不仅需要考虑收到的培训数据的数量,还需要考虑对数据进行说明和使用所需的人力努力。我们演示和评价一个系统,该系统专门用来在现场视频流上培训一个物体探测器,而人类操作员的投入极少。我们显示,我们可以通过互动截断,从微弱的监视单点点击中获得捆绑式方框注。此外,通过物体跟踪,利用视频流的时间顺序性质,我们可以将人类互动的平均培训好处增加3-4次。