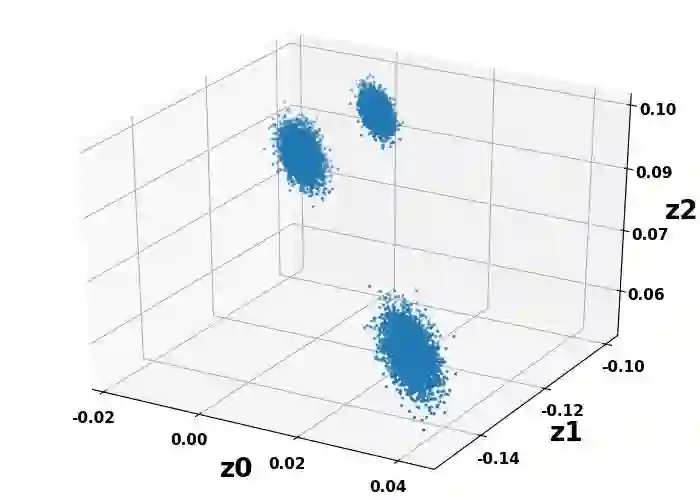

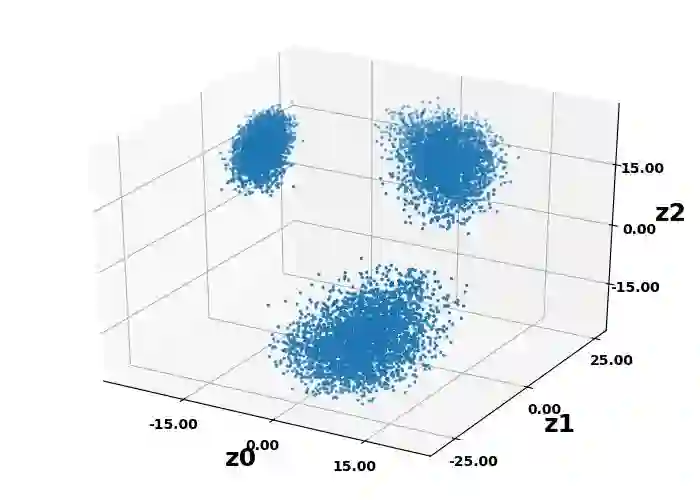

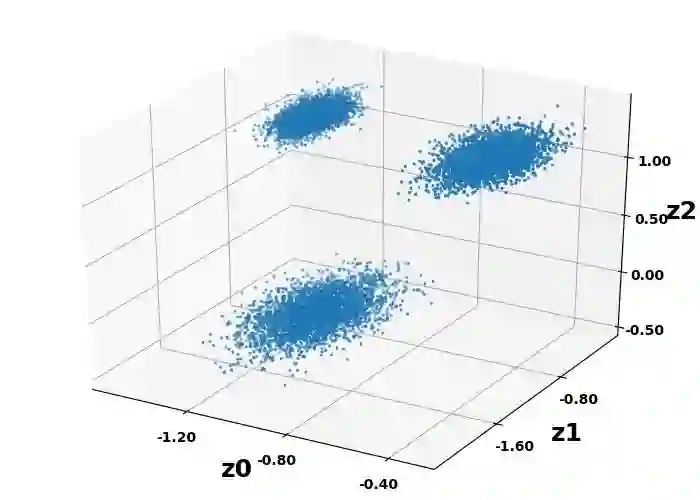

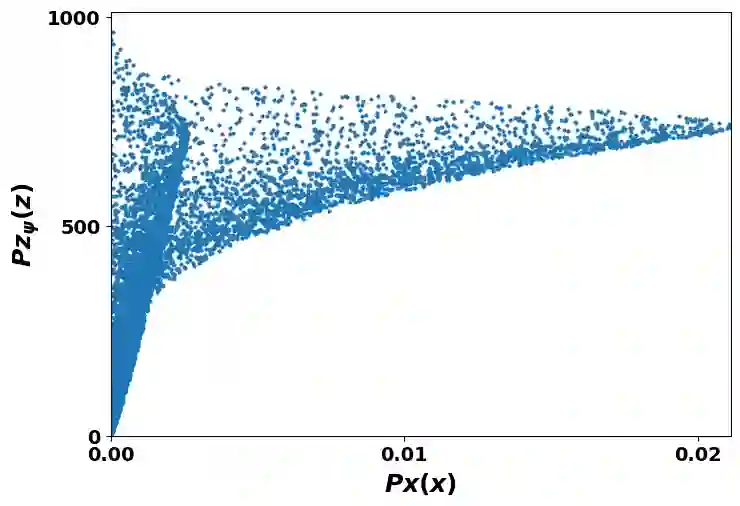

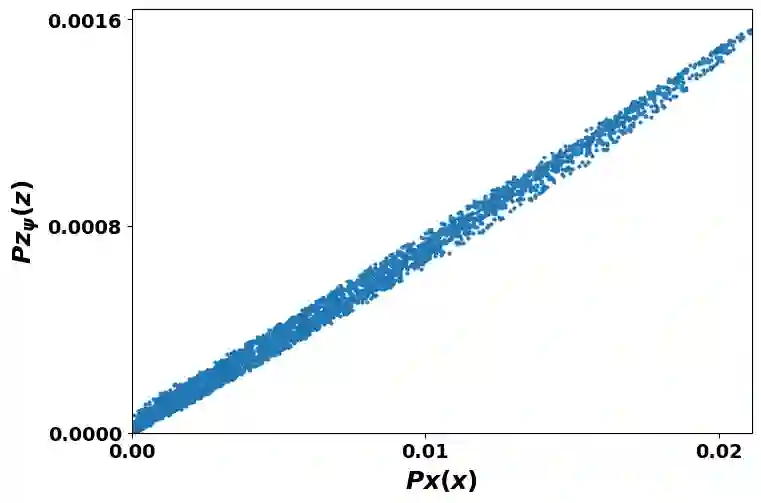

In the generative model approach of machine learning, it is essential to acquire an accurate probabilistic model and compress the dimension of data for easy treatment. However, in the conventional deep-autoencoder based generative model such as VAE, the probability of the real space cannot be obtained correctly from that of in the latent space, because the scaling between both spaces is not controlled. This has also been an obstacle to quantifying the impact of the variation of latent variables on data. In this paper, we propose Rate-Distortion Optimization guided autoencoder, in which the Jacobi matrix from real space to latent space has orthonormality. It is proved theoretically and experimentally that (i) the probability distribution of the latent space obtained by this model is proportional to the probability distribution of the real space because Jacobian between two spaces is constant; (ii) our model behaves as non-linear PCA, where energy of acquired latent space is concentrated on several principal components and the influence of each component can be evaluated quantitatively. Furthermore, to verify the usefulness on the practical application, we evaluate its performance in unsupervised anomaly detection and it outperforms current state-of-the-art methods.

翻译:在机器学习的基因模型方法中,必须获得准确的概率模型,并压缩数据层面,以便于处理。然而,在常规的深自动coder基基因模型(如VAE)中,实际空间的概率无法从潜层空间的概率中正确获得,因为两个空间之间的缩放没有受到控制。这也阻碍了量化潜在变量变化对数据的影响。在本文中,我们提议了由实际空间到潜层空间的雅各比基矩阵具有异常性,在理论上和实验上证明:(一)该模型获得的潜在空间的概率分布与实际空间的概率分布成正比,因为雅各基在两个空间之间的比例是恒定的;(二)我们的模型表现为非线性五氯苯甲醚,获得的潜层的能量集中在几个主要组成部分,每个组成部分的影响都可以进行定量评估。此外,为了核实实际应用的有用性,我们用未校准的异常异常现象探测和外形的状态方法评估其性能。