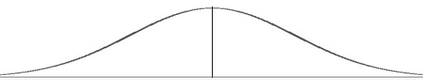

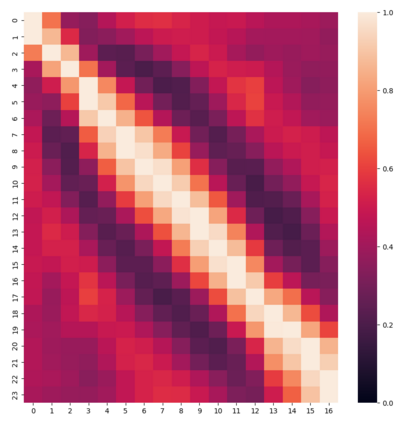

Prior works on action representation learning mainly focus on designing various architectures to extract the global representations for short video clips. In contrast, many practical applications such as video alignment have strong demand for learning dense representations for long videos. In this paper, we introduce a novel contrastive action representation learning (CARL) framework to learn frame-wise action representations, especially for long videos, in a self-supervised manner. Concretely, we introduce a simple yet efficient video encoder that considers spatio-temporal context to extract frame-wise representations. Inspired by the recent progress of self-supervised learning, we present a novel sequence contrastive loss (SCL) applied on two correlated views obtained through a series of spatio-temporal data augmentations. SCL optimizes the embedding space by minimizing the KL-divergence between the sequence similarity of two augmented views and a prior Gaussian distribution of timestamp distance. Experiments on FineGym, PennAction and Pouring datasets show that our method outperforms previous state-of-the-art by a large margin for downstream fine-grained action classification. Surprisingly, although without training on paired videos, our approach also shows outstanding performance on video alignment and fine-grained frame retrieval tasks. Code and models are available at https://github.com/minghchen/CARL_code.

翻译:先前的行动代表学习工作主要侧重于设计各种结构,以提取全球展示短视频片段的视频片段。相反,许多实际应用,如视频校正等,都强烈要求学习内容密集的长视频片段。在本文中,我们引入了一个新的对比性行动代表学习框架(CARL)框架(CARL),以自我监督的方式学习框架性行动代表,特别是长视频。具体地说,我们引入了一个简单而高效的视频编码器,考虑时空背景来提取框架性演示。在自我监督学习最近进展的启发下,我们展示了一种新颖的排序对比性损失(SCL),用于通过一系列sparto-temoral 数据增强来获取的两种相关观点。SCLL优化嵌入空间,通过将两种观点相近的相近相近的顺序和先前的标语段的距离的分布间距进行最小化。关于 FineGymym、PennAction 和 Pouring数据集显示我们的方法超越了先前的状态状态,而没有大幅度地用于下游校程/图像校正校正。