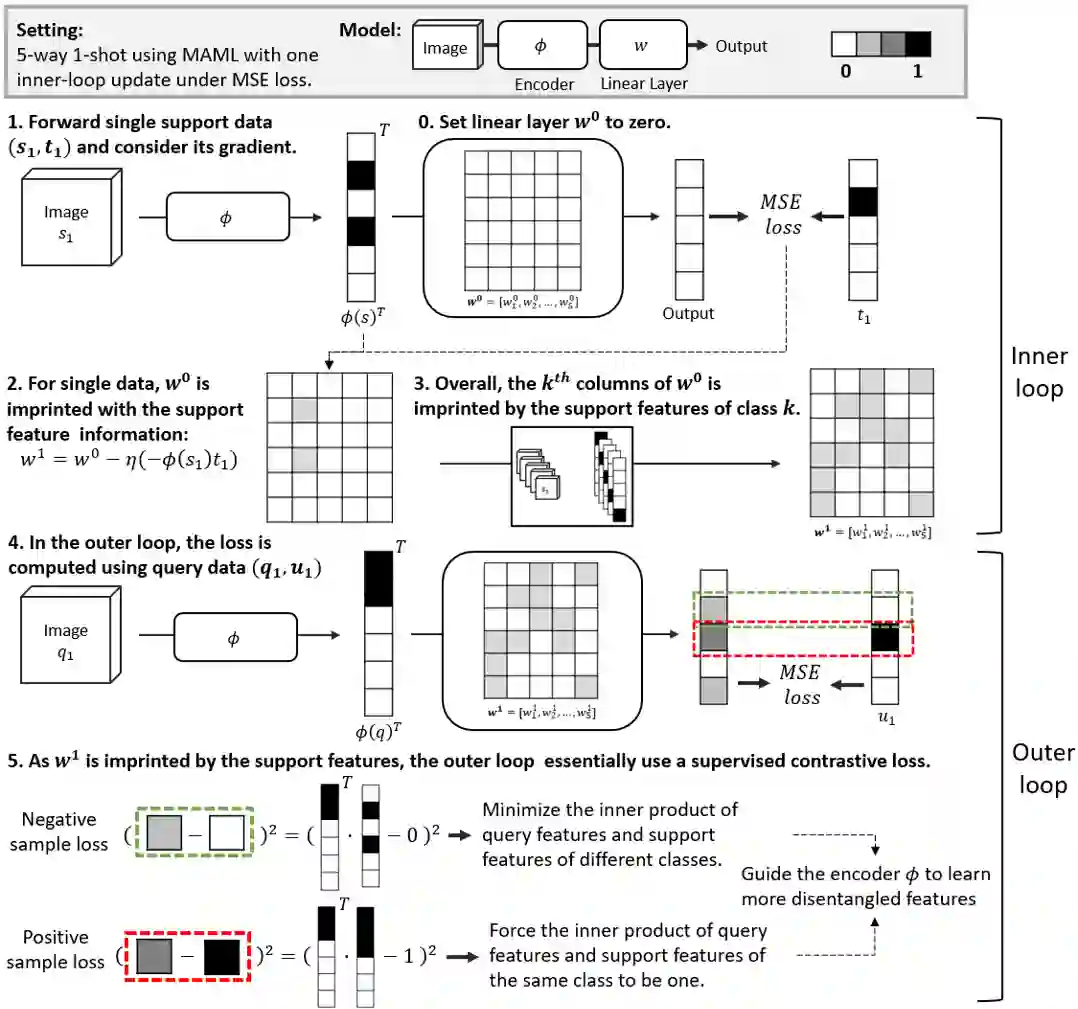

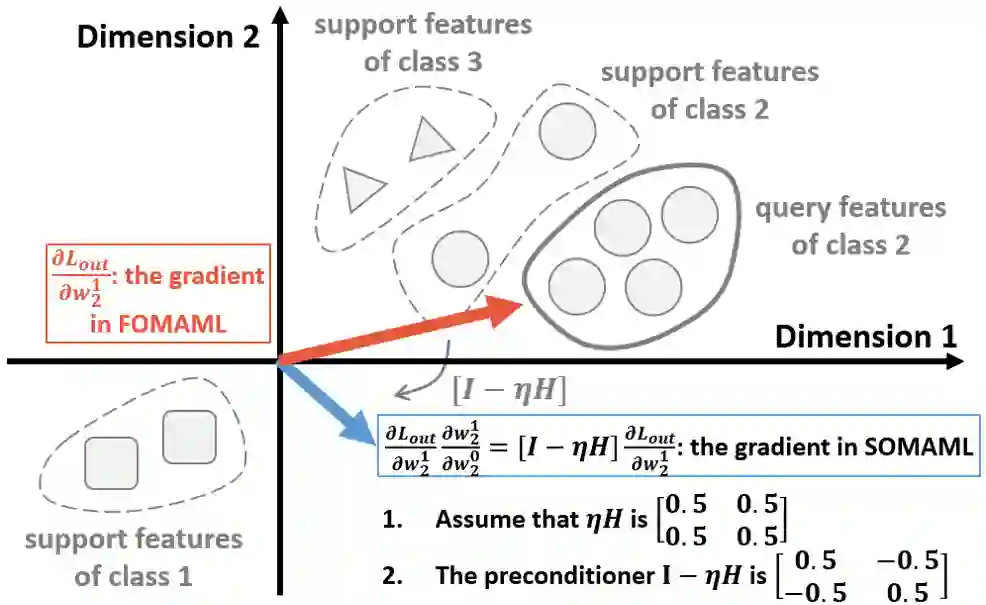

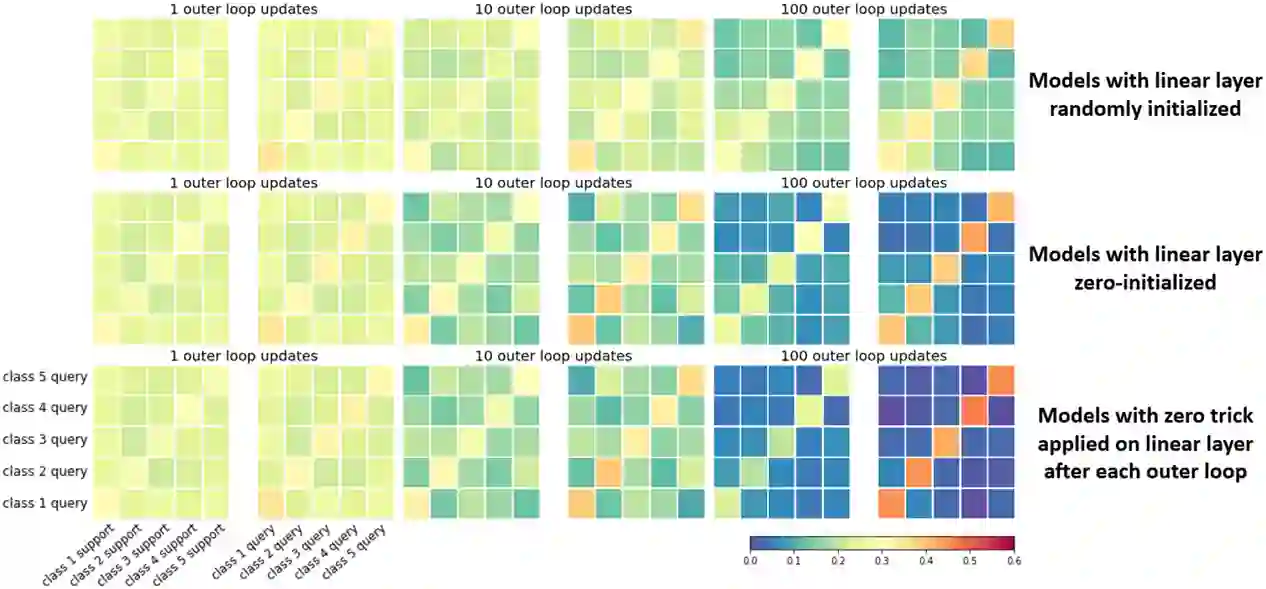

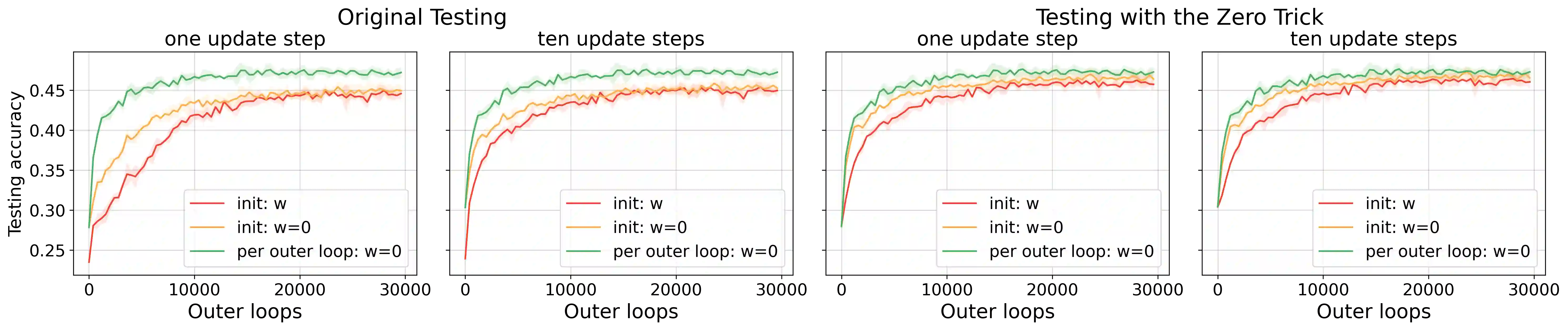

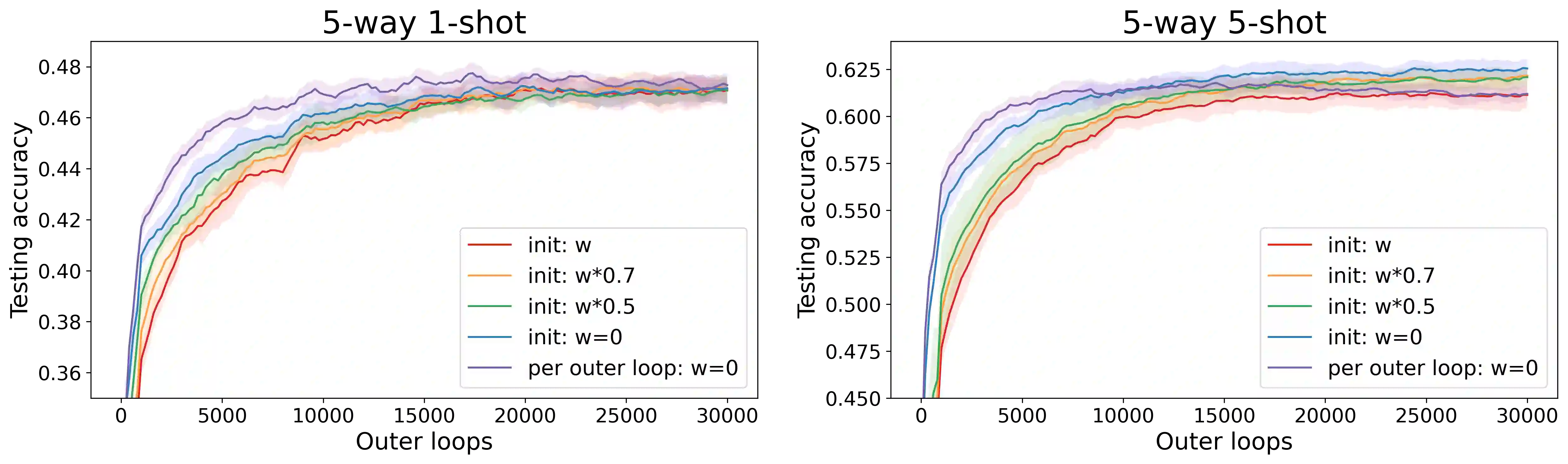

Model-agnostic meta-learning (MAML) is one of the most popular and widely-adopted meta-learning algorithms nowadays, which achieves remarkable success in various learning problems. Yet, with the unique design of nested inner-loop and outer-loop updates which respectively govern the task-specific and meta-model-centric learning, the underlying learning objective of MAML still remains implicit and thus impedes a more straightforward understanding of it. In this paper, we provide a new perspective to the working mechanism of MAML and discover that: MAML is analogous to a meta-learner using a supervised contrastive objective function, where the query features are pulled towards the support features of the same class and against those of different classes, in which such contrastiveness is experimentally verified via an analysis based on the cosine similarity. Moreover, our analysis reveals that the vanilla MAML algorithm has an undesirable interference term originating from the random initialization and the cross-task interaction. We therefore propose a simple but effective technique, zeroing trick, to alleviate such interference, where the extensive experiments are then conducted on both miniImagenet and Omniglot datasets to demonstrate the consistent improvement brought by our proposed technique thus well validating its effectiveness.

翻译:模型-不可知元学习(MAML)是当今最受欢迎和最广泛采用的元学习算法之一,在各种学习问题中取得了显著的成功。然而,随着嵌套内环和外环更新的独特设计,分别指导特定任务和以元模为中心的学习,MAML的基本学习目标仍然隐含,从而妨碍对它更直接的理解。在本文件中,我们为MAML的工作机制提供了一个新视角,发现:MAML类似于使用受监督的对比性目标功能的元激光器,其查询功能被拉向同一类和不同类的支持特征,其中这种对比性通过基于同系相似性的分析实验性加以验证。此外,我们的分析表明,香草MAML算法有一个来自随机初始化和跨任务互动的不良干扰术语。因此,我们建议一种简单而有效的技术,即零化技巧,以缓解这种干扰,然后在微型Imagenet和Omniglot两个类别中进行广泛的实验,然后通过有效的技术来显示我们提议的一致的改进。