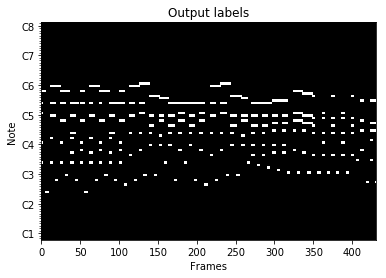

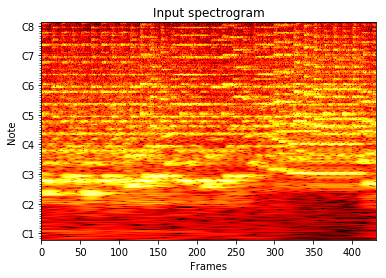

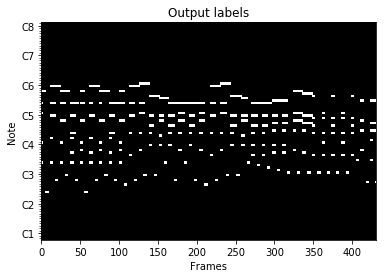

Recent directions in automatic speech recognition (ASR) research have shown that applying deep learning models from image recognition challenges in computer vision is beneficial. As automatic music transcription (AMT) is superficially similar to ASR, in the sense that methods often rely on transforming spectrograms to symbolic sequences of events (e.g. words or notes), deep learning should benefit AMT as well. In this work, we outline an online polyphonic pitch detection system that streams audio to MIDI by ConvLSTMs. Our system achieves state-of-the-art results on the 2007 MIREX multi-F0 development set, with an F-measure of 83\% on the bassoon, clarinet, flute, horn and oboe ensemble recording without requiring any musical language modelling or assumptions of instrument timbre.

翻译:自动语音识别(ASR)研究的近期方向表明,应用从计算机视觉图像识别挑战中发现图像识别挑战的深层次学习模型是有益的。 由于自动音乐抄录(AMT)与ASR表面上类似,因为方法往往依赖将光谱转换为事件符号序列(如文字或笔记),深层次学习也应有益于AMT。在这项工作中,我们概述了一个在线多声声道探测系统,该系统通过ConvLSTMs向MIDI输送音频。我们的系统在2007年MIREX多频-F0开发集上取得了最先进的结果,对低音、单簧、笛子、喇叭和双声调共奏记录采取了83°的F度测量法,而不需要任何音乐语言建模或仪器缩微假设。