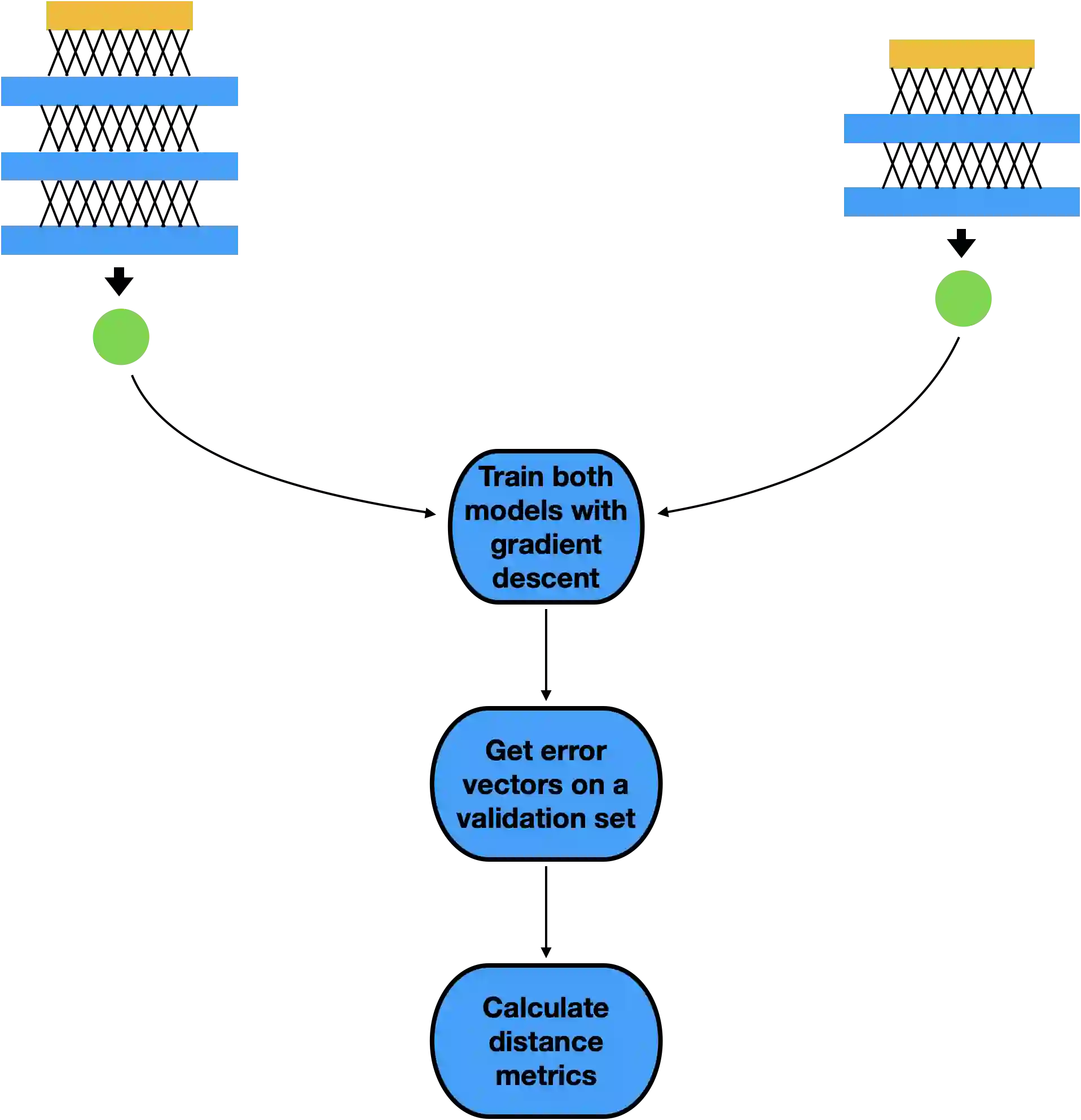

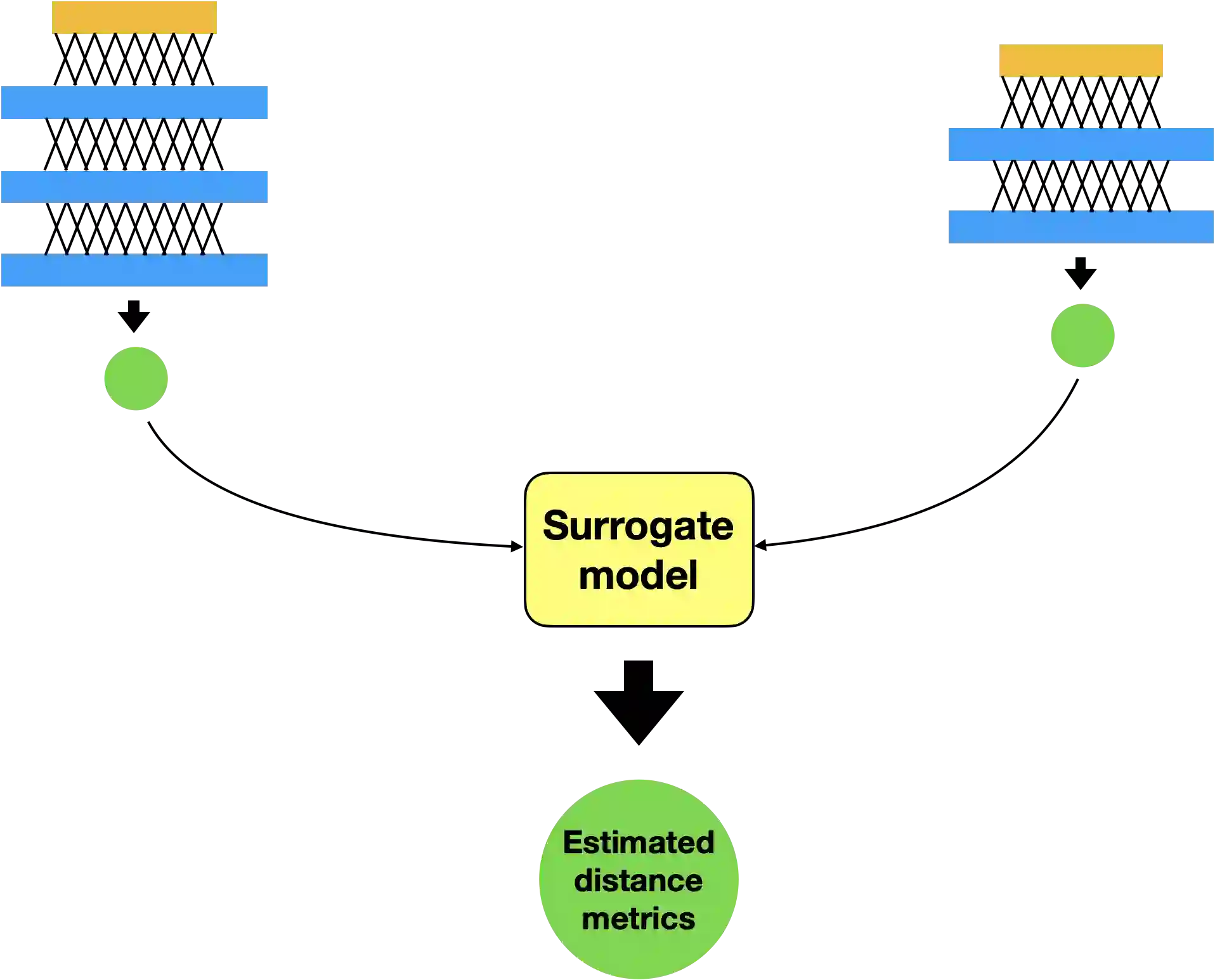

Using Neuroevolution combined with Novelty Search to promote behavioural diversity is capable of constructing high-performing ensembles for classification. However, using gradient descent to train evolved architectures during the search can be computationally prohibitive. Here we propose a method to overcome this limitation by using a surrogate model which estimates the behavioural distance between two neural network architectures required to calculate the sparseness term in Novelty Search. We demonstrate a speedup of 10 times over previous work and significantly improve on previous reported results on three benchmark datasets from Computer Vision -- CIFAR-10, CIFAR-100, and SVHN. This results from the expanded architecture search space facilitated by using a surrogate. Our method represents an improved paradigm for implementing horizontal scaling of learning algorithms by making an explicit search for diversity considerably more tractable for the same bounded resources.

翻译:利用神经革命和新发现搜索促进行为多样性,可以建立高性能的集合,进行分类。然而,在搜索过程中使用梯度下降来训练进化的建筑,在计算上可能令人望而却步。在这里,我们提出一种方法,通过使用替代模型来克服这一限制,该替代模型估计两个神经网络结构之间的行为距离,以计算新发现搜索中的稀疏术语。我们展示了比以往工作速度加快了10倍,并大大改进了以前报告的计算机视野的三个基准数据集 -- -- CIFAR-10、CIFAR-100和SVHN -- -- 上三个基准数据集 -- CIFAR-10、CIFAR-100和SVHN -- -- 的成果。这是通过使用一个替代模型而促成的扩大建筑搜索空间的结果。我们的方法代表了一个更好的模式,通过对相同的捆绑资源进行清晰的多样化搜索来实施横向扩展学习算法。