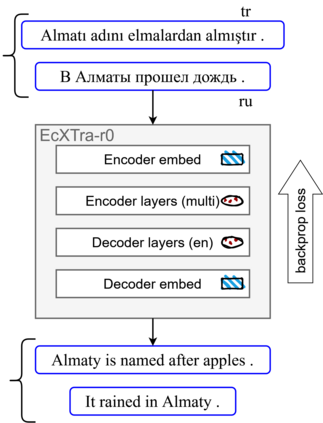

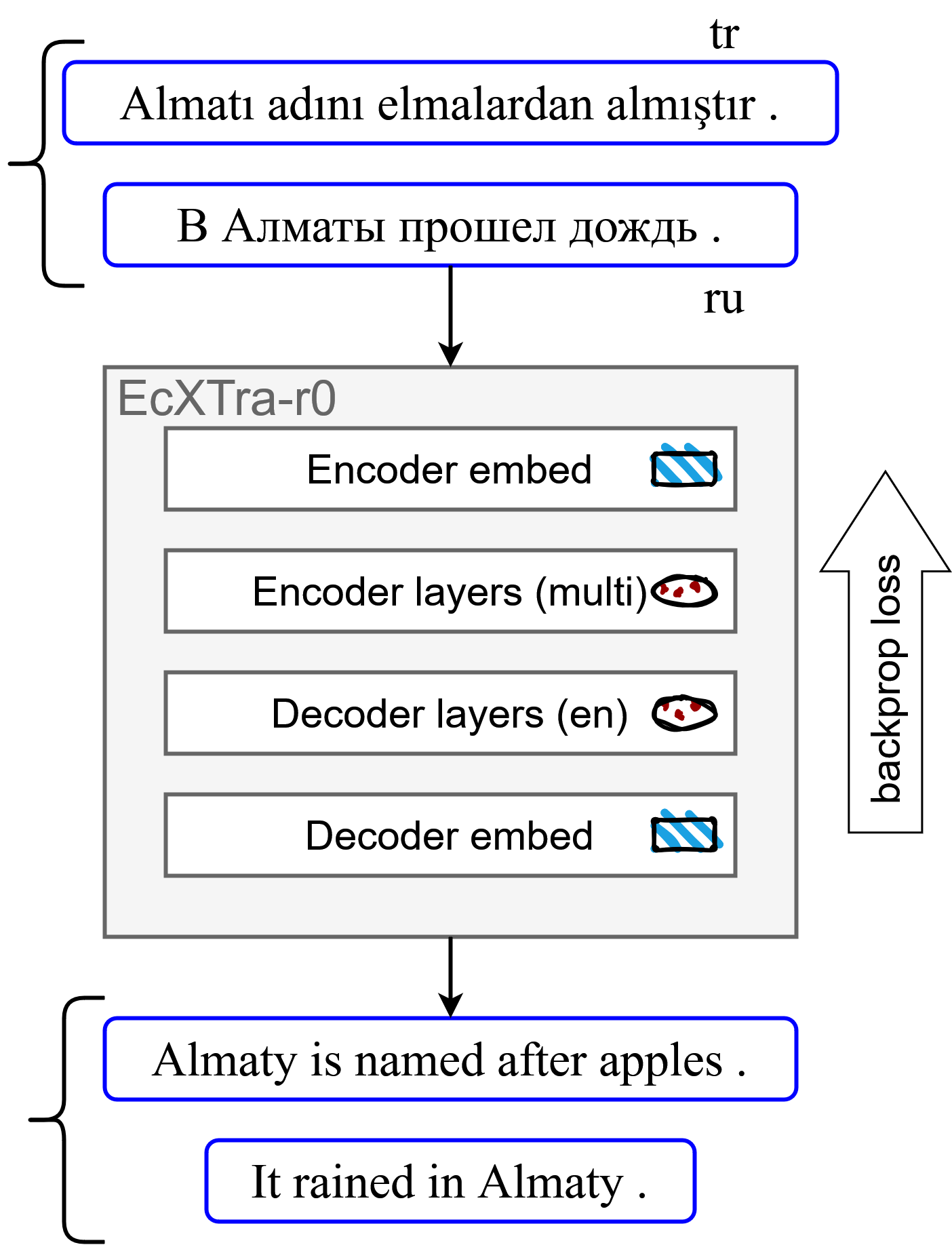

We propose a two-stage approach for training a single NMT model to translate unseen languages both to and from English. For the first stage, we initialize an encoder-decoder model to pretrained XLM-R and RoBERTa weights, then perform multilingual fine-tuning on parallel data in 40 languages to English. We find this model can generalize to zero-shot translations on unseen languages. For the second stage, we leverage this generalization ability to generate synthetic parallel data from monolingual datasets, then train with successive rounds of bidirectional back-translation. We term our approach EcXTra ({E}nglish-{c}entric Crosslingual ({X}) {Tra}nsfer). Our approach is conceptually simple, only using a standard cross-entropy objective throughout, and also is data-driven, sequentially leveraging auxiliary parallel data and monolingual data. We evaluate our unsupervised NMT results on 7 low-resource languages, and find that each round of back-translation training further refines bidirectional performance. Our final single EcXTra-trained model achieves competitive translation performance in all translation directions, notably establishing a new state-of-the-art for English-to-Kazakh (22.9 > 10.4 BLEU).

翻译:我们提出了一种两阶段的方法,用于训练单个神经机器翻译模型,使其能够将未见过的语言翻译成英语,并从英语翻译回这些语言。在第一阶段,我们将编码器-解码器模型初始化为预训练的 XLM-R 和 RoBERTa 权重,然后对 40 种语言的并行数据进行多语言微调,以从中文翻译英语。我们发现这个模型可以推广到未见过的语言的零-shot翻译。对于第二个阶段,我们利用这种广义能力来从单语数据生成合成并行数据(synthetic parallel data),然后用连续的双向回译训练。我们将我们的方法称为 EcXTra(英语为中心的跨语言转换)。我们的方法在概念上很简单,只使用标准的交叉熵目标函数,同时也是数据驱动的,串行地利用辅助并行数据和单语数据。我们在 7 种低资源语言上评估了我们的无监督神经机器翻译结果,并发现每个回译训练轮次进一步精细双向表现。我们最终单个 EcXTra 训练的模型在所有翻译方向上都实现了竞争性的翻译表现,特别是在英语到哈萨克语的翻译中,建立了新的最高水平(22.9 > 10.4 BLEU)。