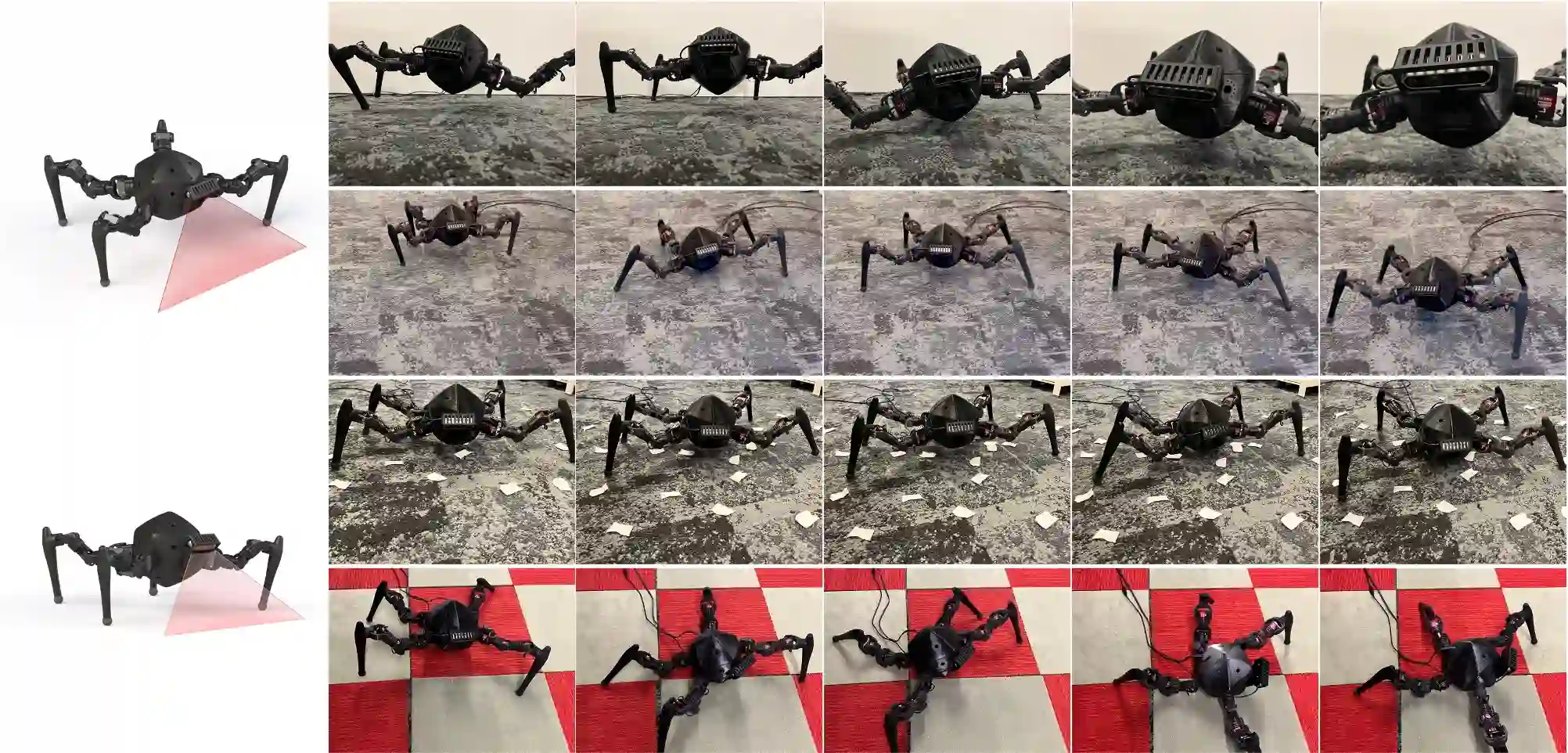

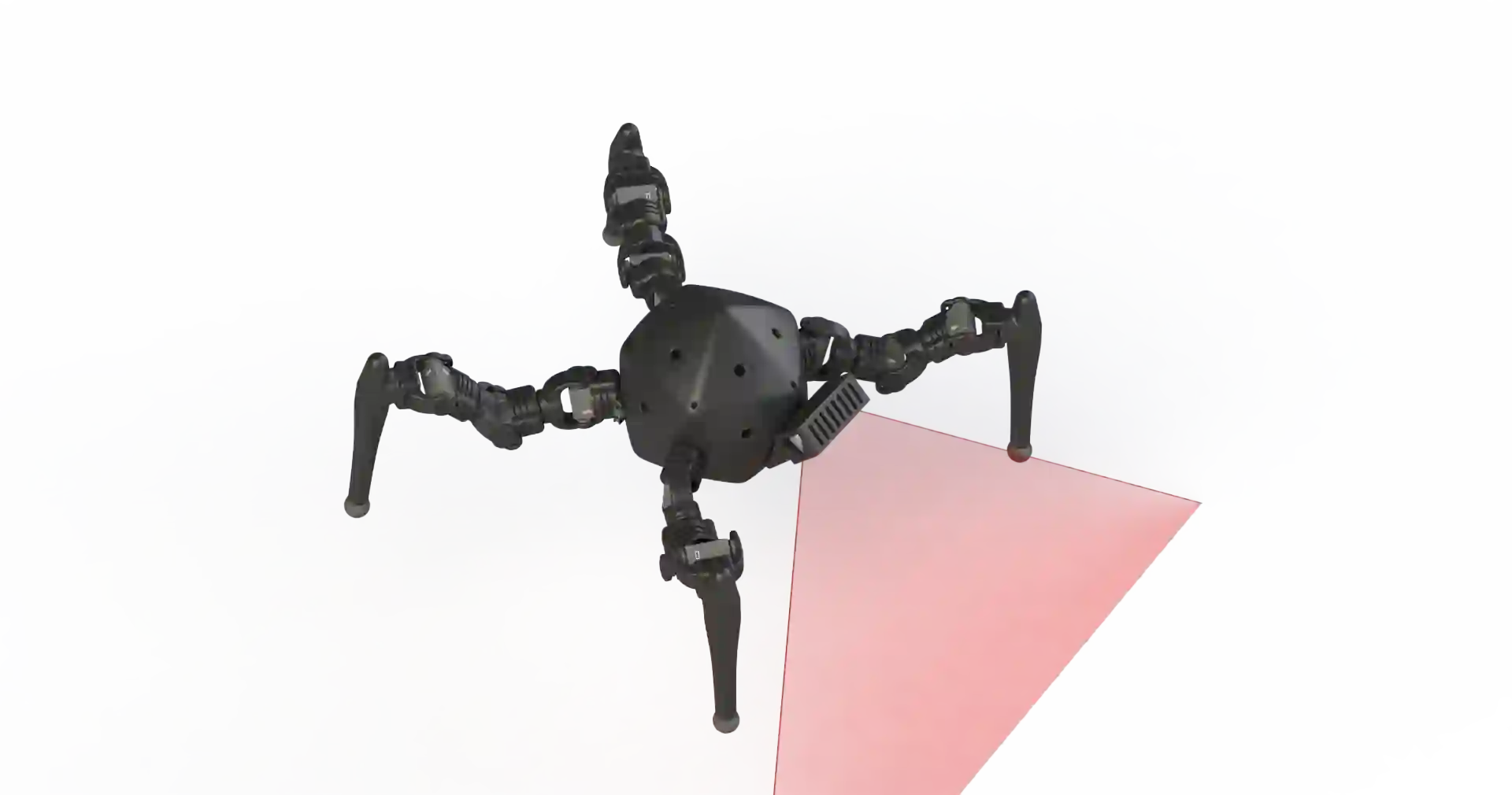

Inertial Measurement Unit (IMU) is ubiquitous in robotic research. It provides posture information for robots to realize balance and navigation. However, humans and animals can perceive the movement of their bodies in the environment without precise orientation or position values. This interaction inherently involves a fast feedback loop between perception and action. This work proposed an end-to-end approach that uses high dimension visual observation and action commands to train a visual self-model for legged locomotion. The visual self-model learns the spatial relationship between the robot body movement and the ground texture changes from image sequences. We demonstrate that the robot can leverage the visual self-model to achieve various locomotion tasks in the real-world environment that the robot does not see during training. With our proposed method, robots can do locomotion without IMU or in an environment with no GPS or weak geomagnetic fields like the indoor and urban canyons in the city.

翻译:惰性测量单位(IMU)在机器人研究中无处不在。 它为机器人提供姿态信息, 以实现平衡和导航。 但是, 人类和动物可以在没有精确方向或位置值的情况下感知到他们身体在环境中的移动。 这种互动必然涉及感知和行动之间的快速反馈环环。 这项工作提出了一个端对端方法, 使用高维视觉观察和动作命令来训练脚动的视觉自我模型。 视觉自我模型学习机器人身体运动与图像序列的地面纹理变化之间的空间关系。 我们证明机器人可以利用视觉自我模型在真实世界环境中完成机器人在训练中看不到的各种移动任务。 以我们提议的方法, 机器人可以在没有IMU或城市室内和城市峡谷等没有全球定位系统或薄弱的地磁场的环境中进行移动。