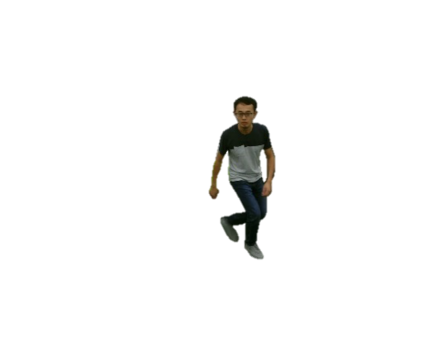

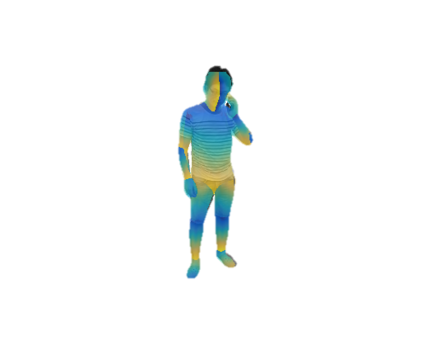

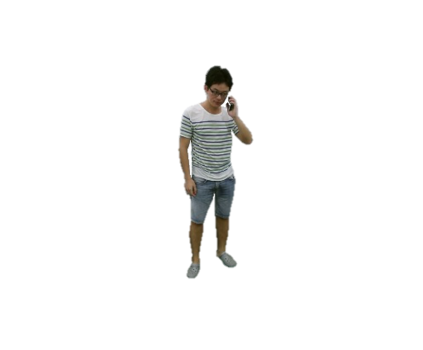

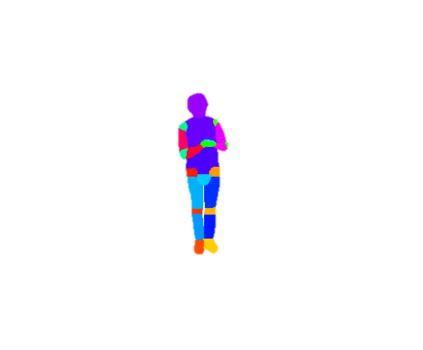

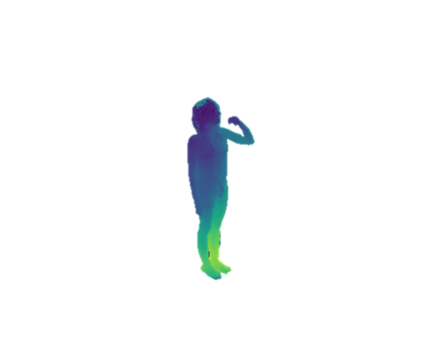

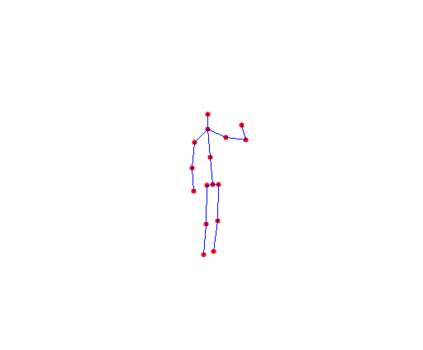

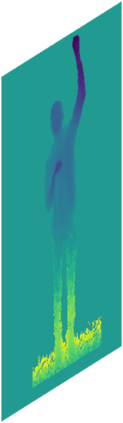

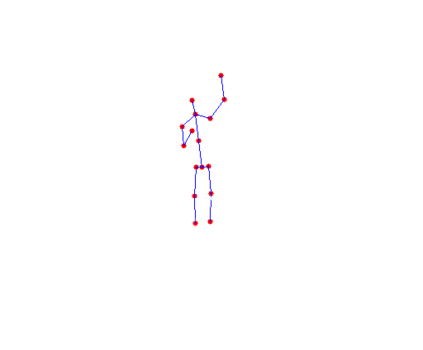

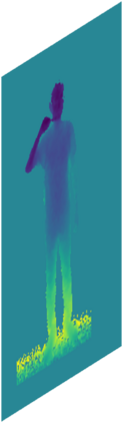

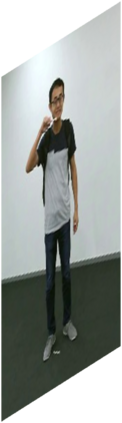

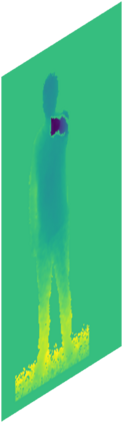

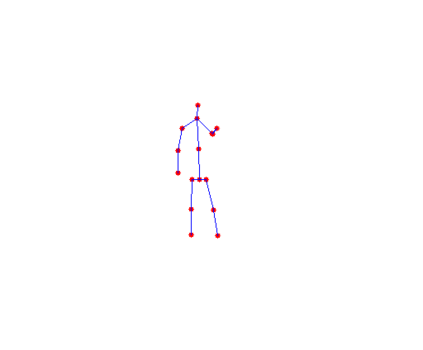

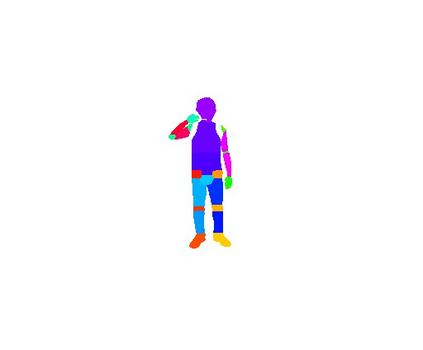

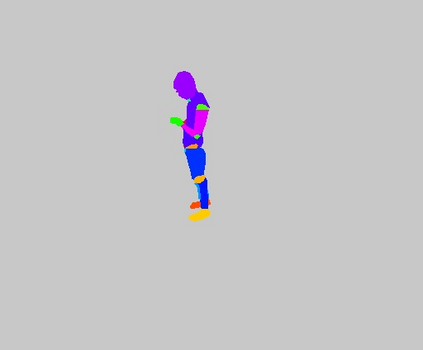

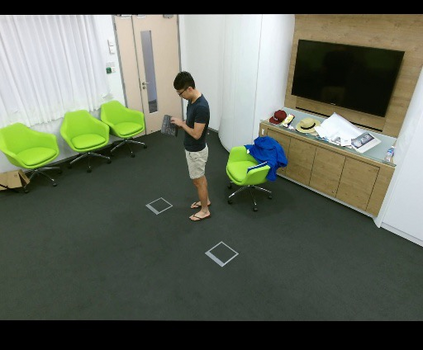

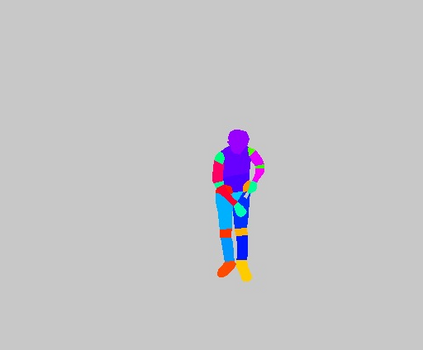

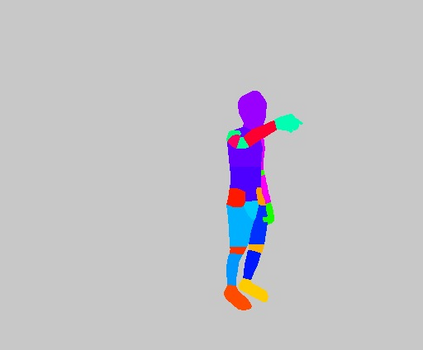

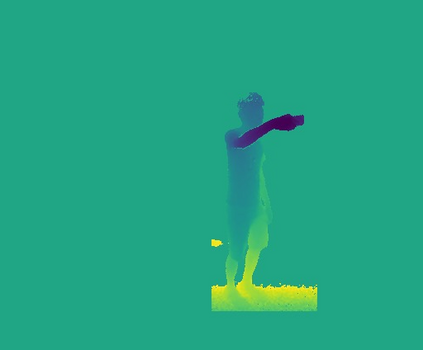

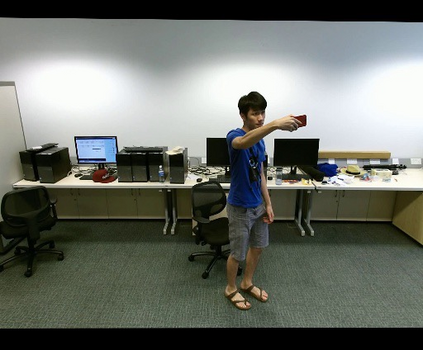

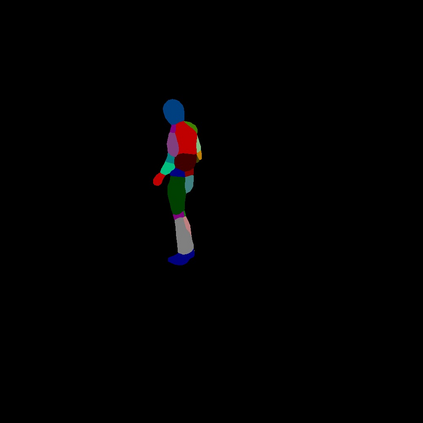

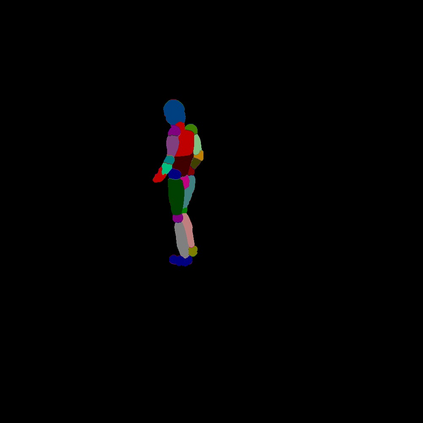

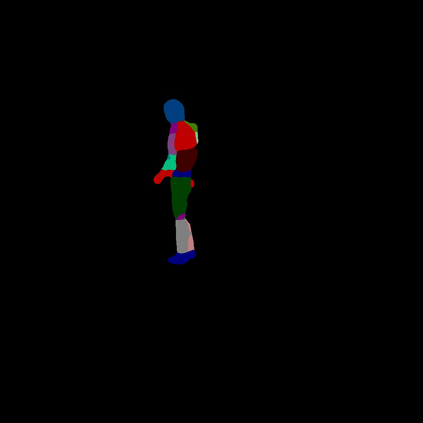

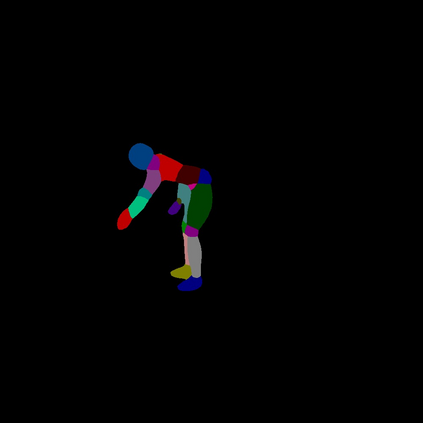

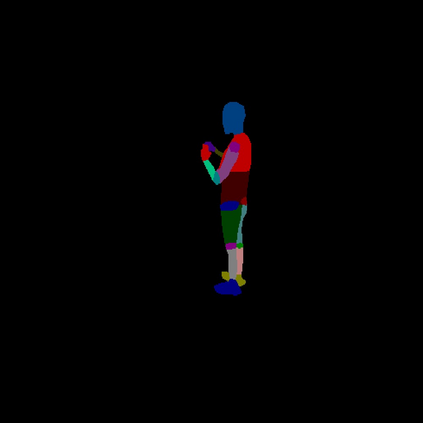

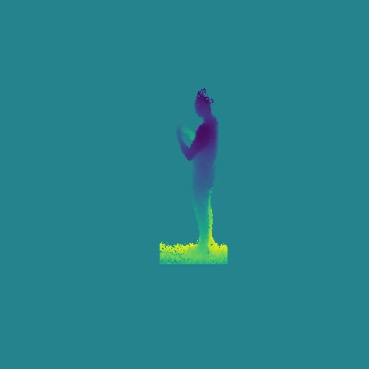

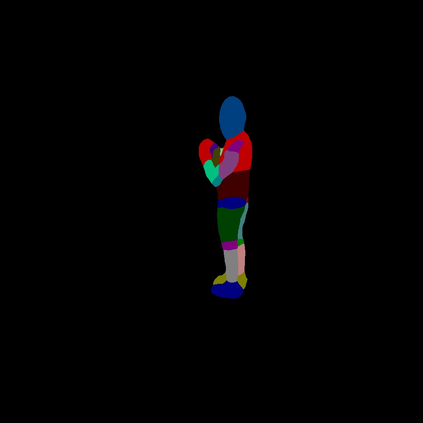

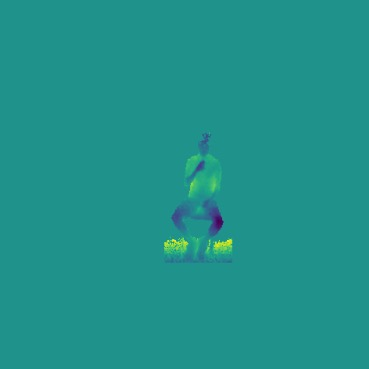

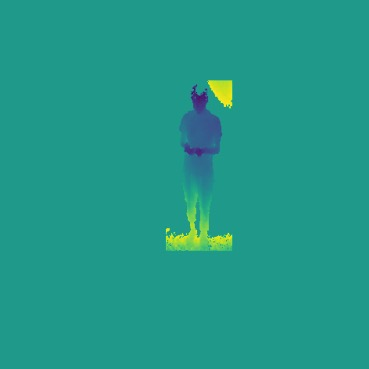

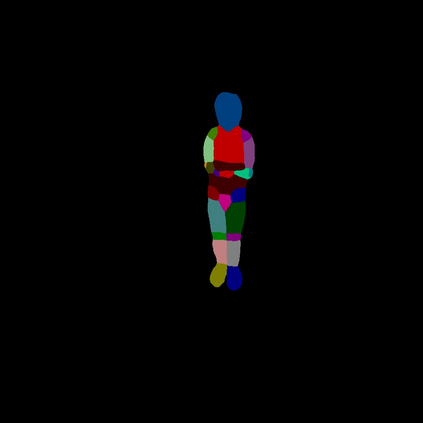

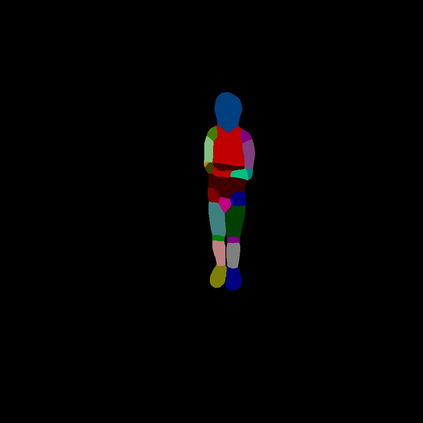

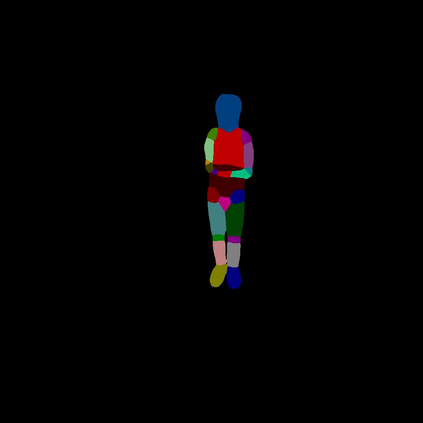

Human-centric perception plays a vital role in vision and graphics. But their data annotations are prohibitively expensive. Therefore, it is desirable to have a versatile pre-train model that serves as a foundation for data-efficient downstream tasks transfer. To this end, we propose the Human-Centric Multi-Modal Contrastive Learning framework HCMoCo that leverages the multi-modal nature of human data (e.g. RGB, depth, 2D keypoints) for effective representation learning. The objective comes with two main challenges: dense pre-train for multi-modality data, efficient usage of sparse human priors. To tackle the challenges, we design the novel Dense Intra-sample Contrastive Learning and Sparse Structure-aware Contrastive Learning targets by hierarchically learning a modal-invariant latent space featured with continuous and ordinal feature distribution and structure-aware semantic consistency. HCMoCo provides pre-train for different modalities by combining heterogeneous datasets, which allows efficient usage of existing task-specific human data. Extensive experiments on four downstream tasks of different modalities demonstrate the effectiveness of HCMoCo, especially under data-efficient settings (7.16% and 12% improvement on DensePose Estimation and Human Parsing). Moreover, we demonstrate the versatility of HCMoCo by exploring cross-modality supervision and missing-modality inference, validating its strong ability in cross-modal association and reasoning.

翻译:以人为中心的认识在视觉和图形中发挥着关键作用。但是,它们的数据说明却非常昂贵。因此,最好有一个多用途的预培训模型,作为数据高效下游任务转移的基础。为此,我们建议采用人类核心多式多式对立学习框架HCMoco,该框架利用人类数据多式(如RGB、深度、2D关键点)的多式性质,进行有效的代议学习。这个目标有两个主要挑战:多式数据的密集前导、对稀释人类前科的高效使用。为了应对挑战,我们设计了新的多式多式反动学习和超式结构反动学习目标,为此,我们从等级上学习了以连续和多式地特征分布和结构对立性一致性为特点的模型性潜伏空间。 HCMoco提供不同模式前导,将混合数据集结合起来,从而能够高效使用现有特定任务的人类数据,高效地使用稀释人类前导。在四种次次次次式的跨式监督中,我们设计了新式的多式反式结构学习和扭曲结构差异学习目标,以展示了HC格式上的数据的有效性。