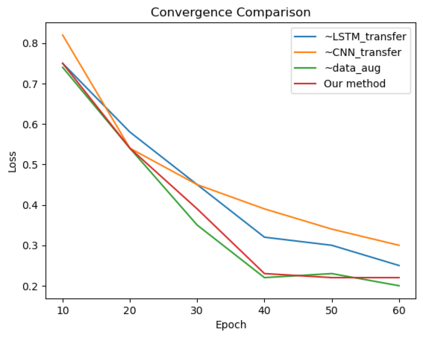

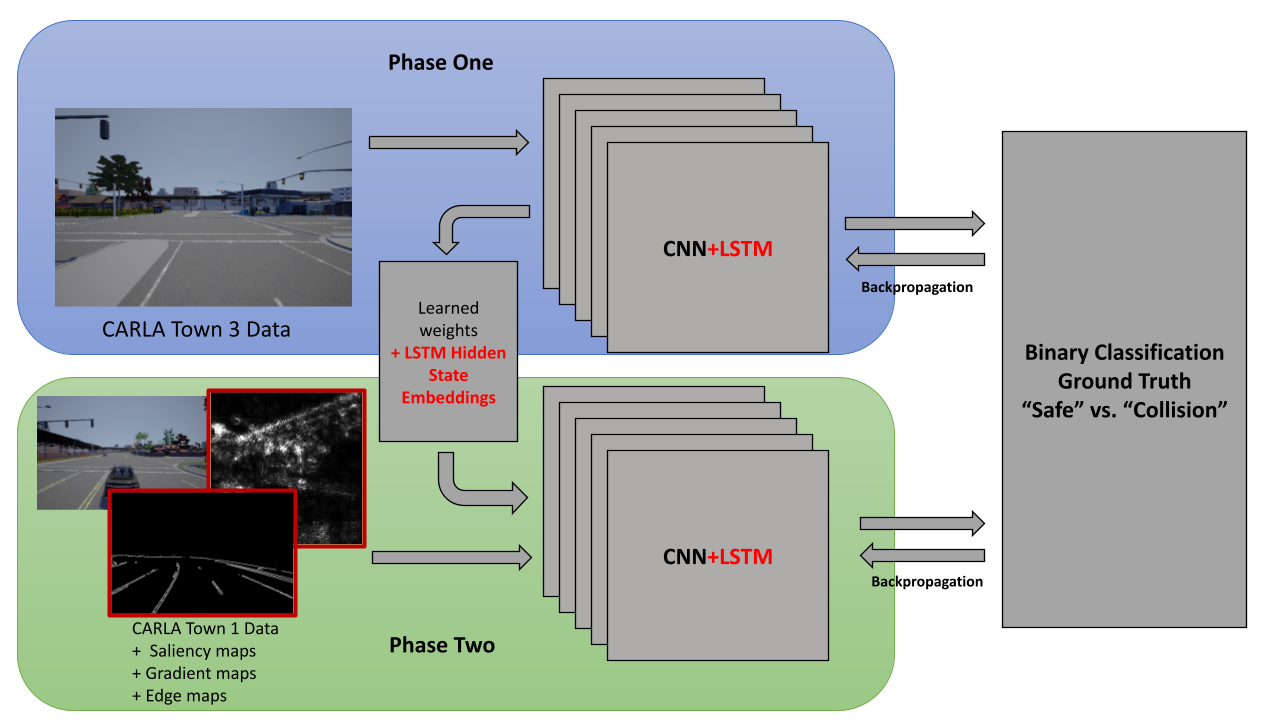

Practical learning-based autonomous driving models must be capable of generalizing learned behaviors from simulated to real domains, and from training data to unseen domains with unusual image properties. In this paper, we investigate transfer learning methods that achieve robustness to domain shifts by taking advantage of the invariance of spatio-temporal features across domains. In this paper, we propose a transfer learning method to improve generalization across domains via transfer of spatio-temporal features and salient data augmentation. Our model uses a CNN-LSTM network with Inception modules for image feature extraction. Our method runs in two phases: Phase 1 involves training on source domain data, while Phase 2 performs training on target domain data that has been supplemented by feature maps generated using the Phase 1 model. Our model significantly improves performance in unseen test cases for both simulation-to-simulation transfer as well as simulation-to-real transfer by up to +37.3\% in test accuracy and up to +40.8\% in steering angle prediction, compared to other SOTA methods across multiple datasets.

翻译:实践学习自主驱动模型必须能够将从模拟领域到真实领域以及从培训数据到具有不同图像特性的无形领域所学到的行为普遍化。 在本文中,我们研究利用跨领域时空特征的变异性,将实现稳健性的学习方法转移至领域转移。在本文件中,我们提议了一种转让学习方法,通过传输时空特征和突出的数据增强,改善跨领域的通用。我们的模型使用CNN-LSTM网络和感知模块来提取图像特征。我们的方法分两个阶段进行:第一阶段是源域数据培训,第二阶段是目标域数据培训,由第一阶段模型生成的特征地图加以补充。我们的模型极大地改进了模拟模拟到模拟模拟传输以及模拟到实际传输在测试精度和引导角度预测中最高为+37.3 ⁇ +40.8 ⁇,与多个数据集的其他SOTA方法相比,在模拟到模拟到实际传输方面的情况。