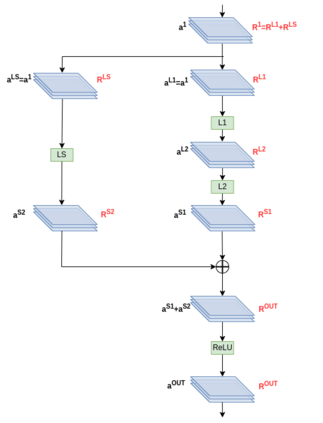

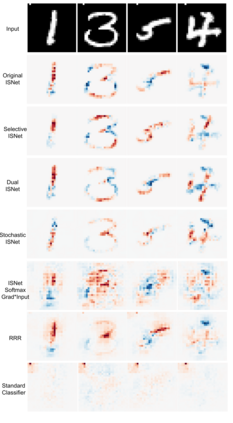

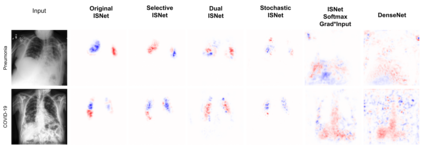

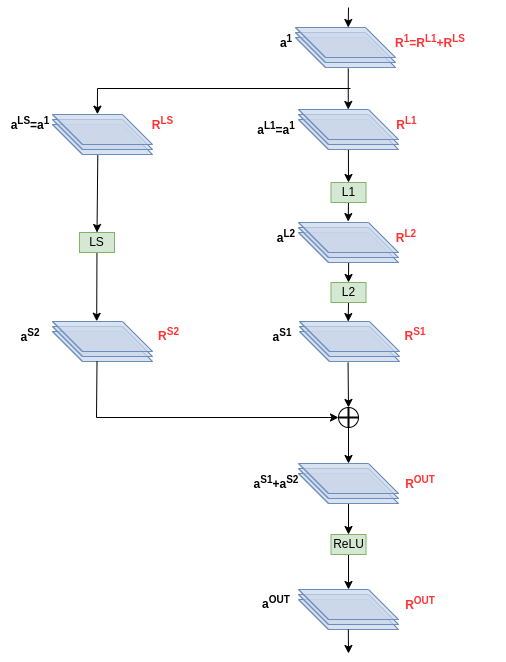

Image background features can constitute background bias (spurious correlations) and impact deep classifiers decisions, causing shortcut learning (Clever Hans effect) and reducing the generalization skill on real-world data. The concept of optimizing Layer-wise Relevance Propagation (LRP) heatmaps, to improve classifier behavior, was recently introduced by a neural network architecture named ISNet. It minimizes background relevance in LRP maps, to mitigate the influence of image background features on deep classifiers decisions, hindering shortcut learning and improving generalization. For each training image, the original ISNet produces one heatmap per possible class in the classification task, hence, its training time scales linearly with the number of classes. Here, we introduce reformulated architectures that allow the training time to become independent from this number, rendering the optimization process much faster. We challenged the enhanced models utilizing the MNIST dataset with synthetic background bias, and COVID-19 detection in chest X-rays, an application that is prone to shortcut learning due to background bias. The trained models minimized background attention and hindered shortcut learning, while retaining high accuracy. Considering external (out-of-distribution) test datasets, they consistently proved more accurate than multiple state-of-the-art deep neural network architectures, including a dedicated image semantic segmenter followed by a classifier. The architectures presented here represent a potentially massive improvement in training speed over the original ISNet, thus introducing LRP optimization into a gamut of applications that could not be feasibly handled by the original model.

翻译:暂无翻译