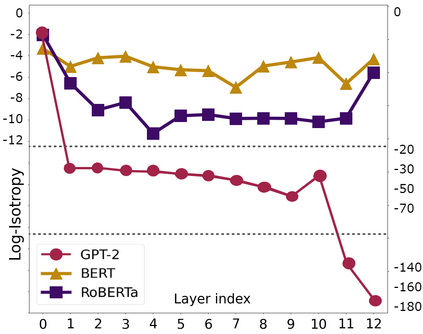

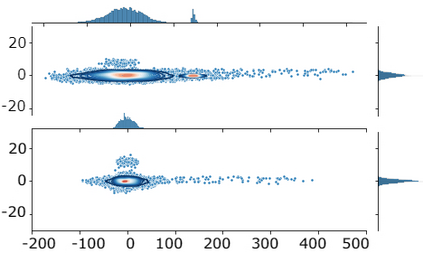

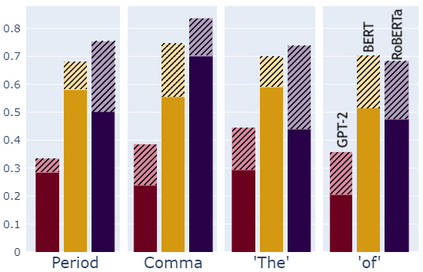

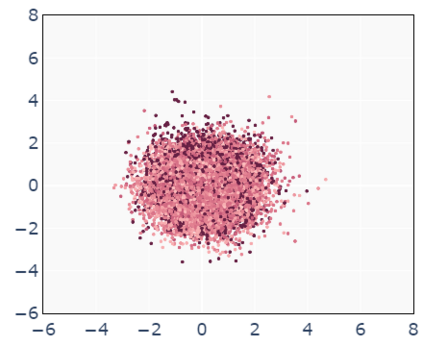

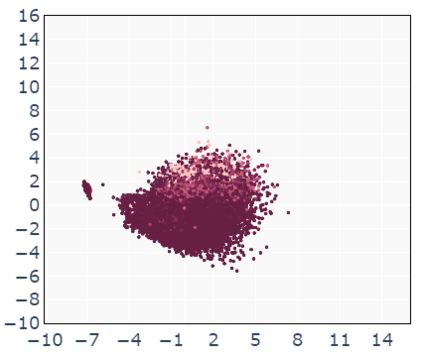

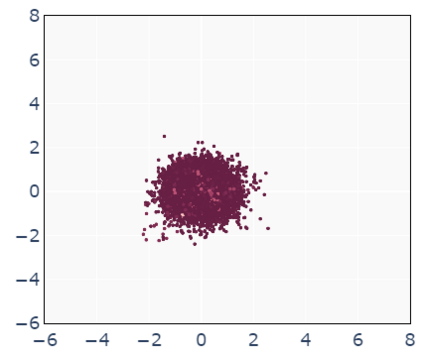

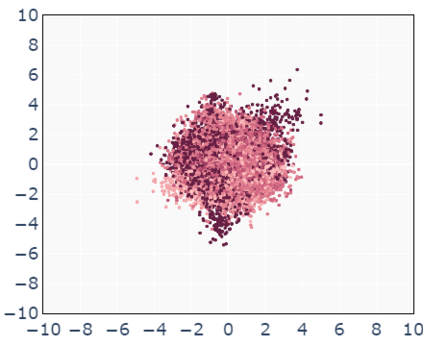

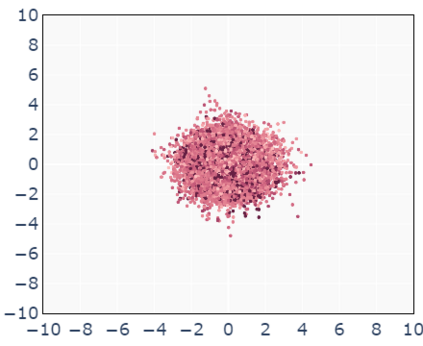

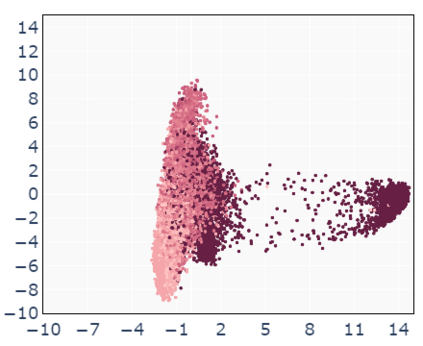

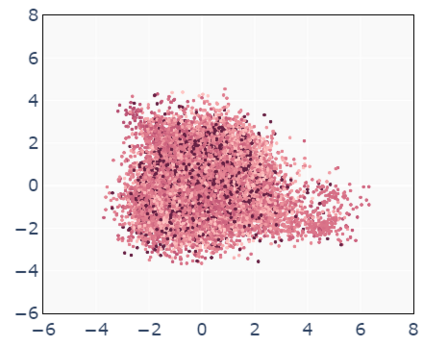

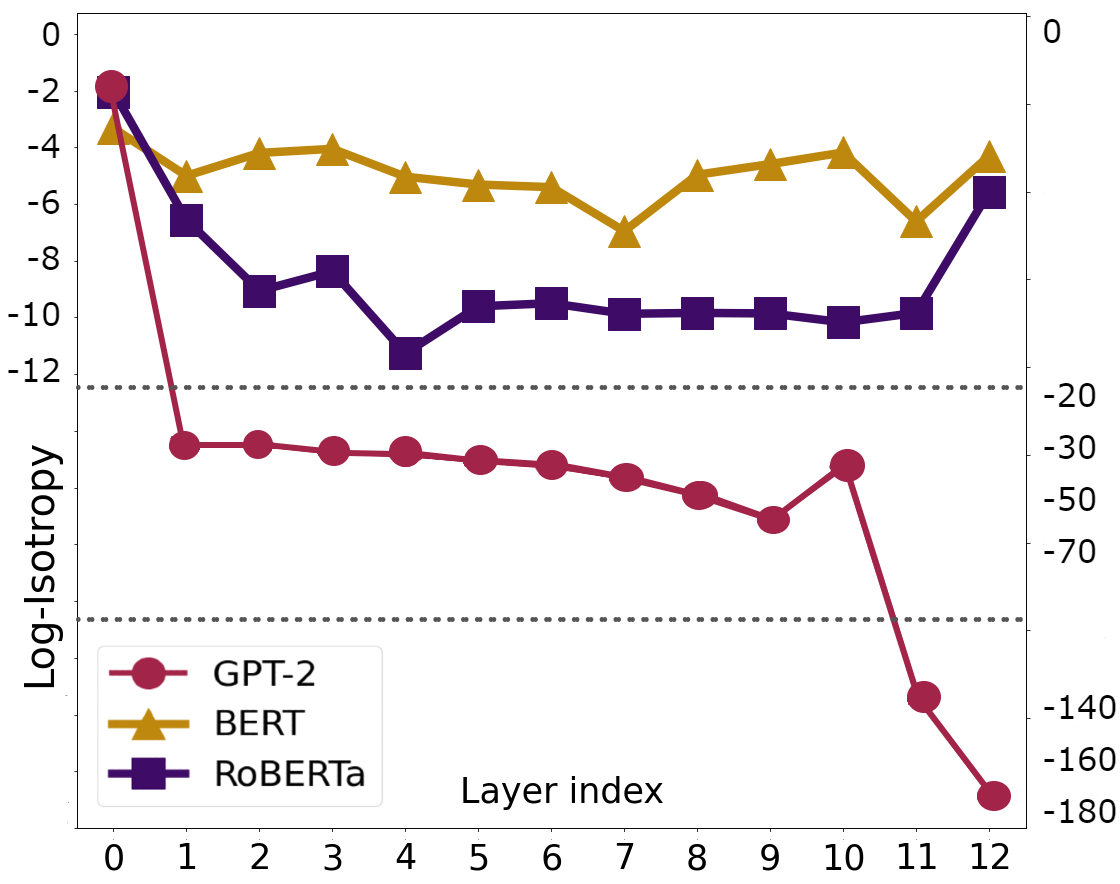

The representation degeneration problem in Contextual Word Representations (CWRs) hurts the expressiveness of the embedding space by forming an anisotropic cone where even unrelated words have excessively positive correlations. Existing techniques for tackling this issue require a learning process to re-train models with additional objectives and mostly employ a global assessment to study isotropy. Our quantitative analysis over isotropy shows that a local assessment could be more accurate due to the clustered structure of CWRs. Based on this observation, we propose a local cluster-based method to address the degeneration issue in contextual embedding spaces. We show that in clusters including punctuations and stop words, local dominant directions encode structural information, removing which can improve CWRs performance on semantic tasks. Moreover, we find that tense information in verb representations dominates sense semantics. We show that removing dominant directions of verb representations can transform the space to better suit semantic applications. Our experiments demonstrate that the proposed cluster-based method can mitigate the degeneration problem on multiple tasks.

翻译:上下文文字代表(CWRs)中的描述退化问题通过形成一个即使是不相干的词也具有过分正相关关系的厌食锥体,伤害了嵌入空间的清晰度。 解决这一问题的现有技术需要学习过程,对具有额外目标的模型进行再培训,并主要采用全球评估来研究异质。 我们对异质图解的定量分析表明,由于CPWs的集群结构,本地评估可能更加准确。 基于这一观察,我们建议了一种基于本地集群的方法来解决环境嵌入空间的退化问题。 我们的实验表明,在包括标点和停止字在内的集群中,地方主导方向对结构信息进行编码,可以提高CPWs在语义任务上的性能。 此外,我们发现,动词表达中的紧张信息支配感官语义学。我们发现,消除动词表达的主导方向可以使空间更适合语义应用。我们提出的基于集群的方法可以缓解多重任务的退化问题。