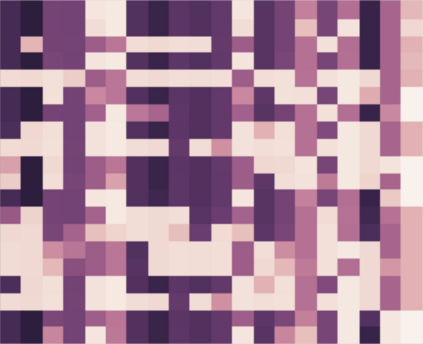

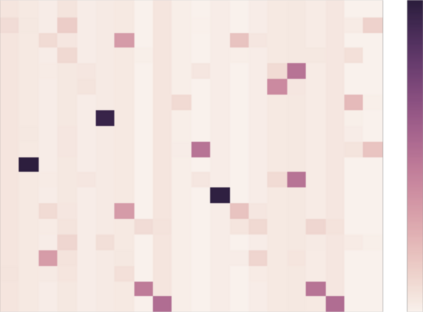

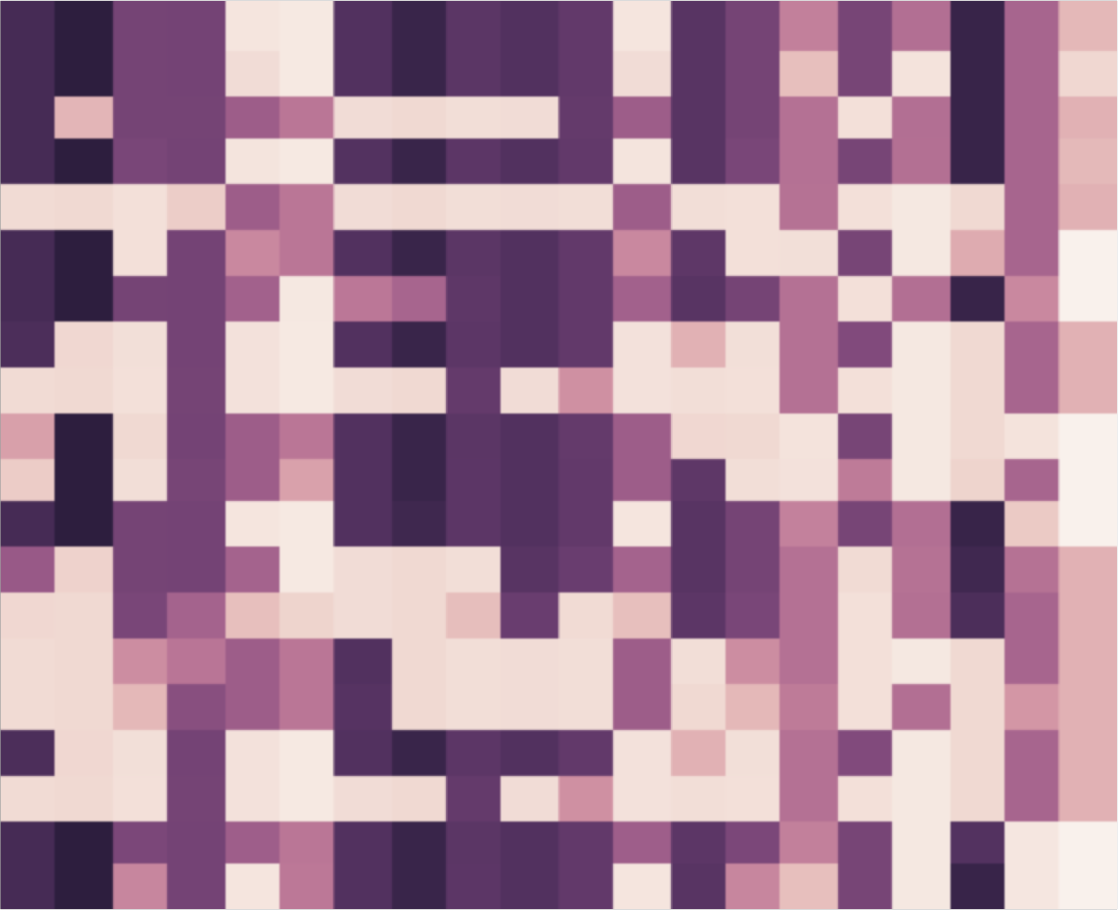

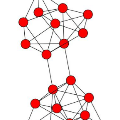

Constituting highly informative network embeddings is an important tool for network analysis. It encodes network topology, along with other useful side information, into low-dimensional node-based feature representations that can be exploited by statistical modeling. This work focuses on learning context-aware network embeddings augmented with text data. We reformulate the network-embedding problem, and present two novel strategies to improve over traditional attention mechanisms: ($i$) a content-aware sparse attention module based on optimal transport, and ($ii$) a high-level attention parsing module. Our approach yields naturally sparse and self-normalized relational inference. It can capture long-term interactions between sequences, thus addressing the challenges faced by existing textual network embedding schemes. Extensive experiments are conducted to demonstrate our model can consistently outperform alternative state-of-the-art methods.

翻译:建立高度信息化的网络嵌入是网络分析的一个重要工具。它将网络地形学和其他有用的侧面信息编码为低维节点特征表示,可通过统计建模加以利用。这项工作侧重于学习以文本数据为支撑的环境意识网络嵌入。我们重新塑造网络构成问题,并提出了两个新颖的战略来改善传统关注机制:(美元)一个基于最佳运输的含量微薄的注意模块,另一个(美元)一个高层关注分析模块。我们的方法产生自然稀疏和自我规范的关系推断。它可以捕捉序列之间的长期互动,从而应对现有文本化网络嵌入计划面临的挑战。我们进行了广泛的实验,以展示我们的模型能够持续地超越其他的艺术状态方法。