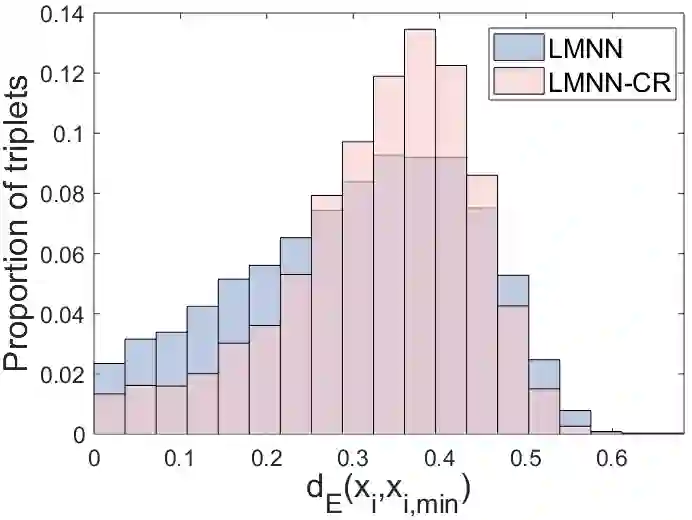

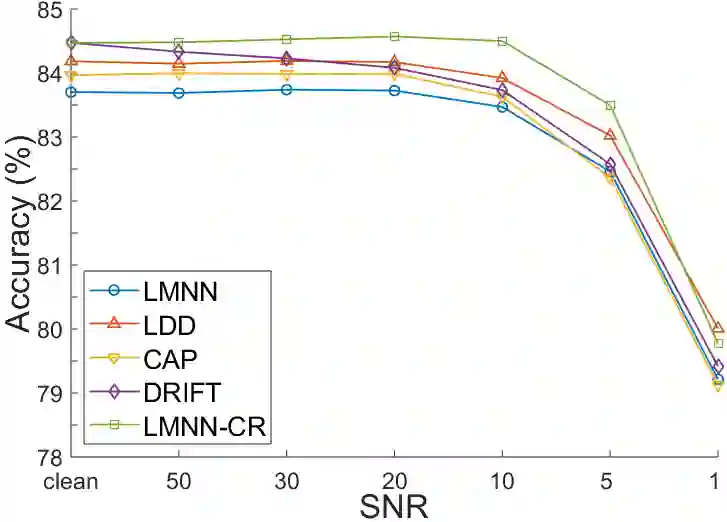

Metric learning aims to learn a distance metric such that semantically similar instances are pulled together while dissimilar instances are pushed away. Many existing methods consider maximizing or at least constraining a distance "margin" that separates similar and dissimilar pairs of instances to guarantee their performance on a subsequent k-nearest neighbor classifier. However, such a margin in the feature space does not necessarily lead to robustness certification or even anticipated generalization advantage, since a small perturbation of test instance in the instance space could still potentially alter the model prediction. To address this problem, we advocate penalizing small distance between training instances and their nearest adversarial examples, and we show that the resulting new approach to metric learning enjoys a larger certified neighborhood with theoretical performance guarantee. Moreover, drawing on an intuitive geometric insight, the proposed new loss term permits an analytically elegant closed-form solution and offers great flexibility in leveraging it jointly with existing metric learning methods. Extensive experiments demonstrate the superiority of the proposed method over the state-of-the-arts in terms of both discrimination accuracy and robustness to noise.

翻译:许多现有方法考虑尽量扩大或至少限制距离“边际”,将类似和不同的情况区分开来,以保证其在随后的K-近邻分类器上的表现;然而,地物空间的这种差幅并不一定导致稳健性认证,甚至预期的概括性优势,因为这种空间的微小扰动试验实例仍有可能改变模型预测。为了解决这一问题,我们主张对培训案例与其最近的敌对实例之间的小距离进行处罚,并表明由此形成的计量学习新方法具有较大的认证环境,并有理论性能保证。此外,根据直观的几何观察,拟议的新损失术语允许采用分析优雅的封闭式解决办法,并提供了与现有计量学习方法联合利用它的巨大灵活性。广泛的实验表明,在歧视准确性和噪音稳健度方面,拟议方法优于现状。