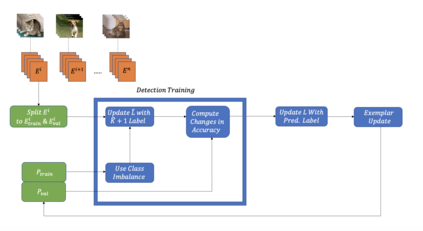

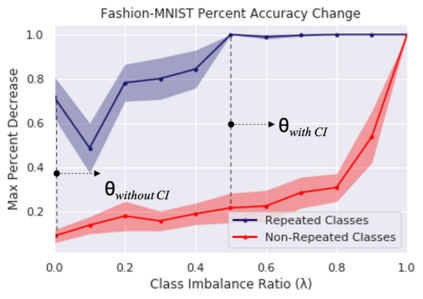

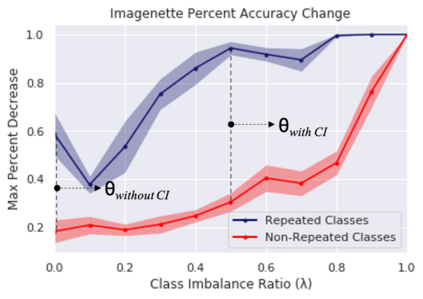

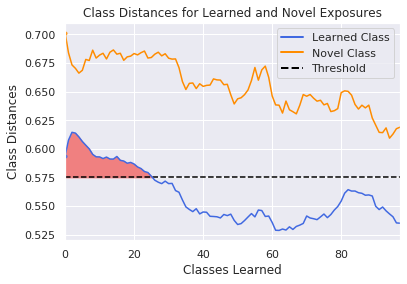

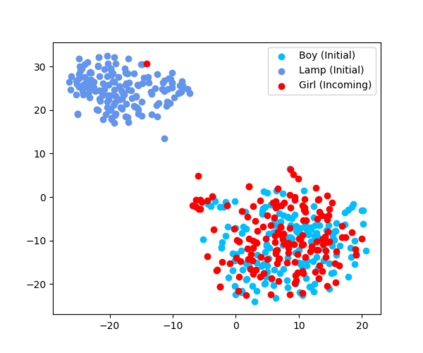

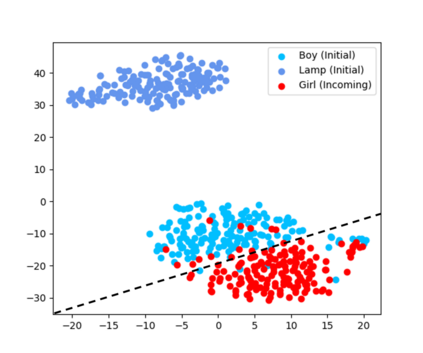

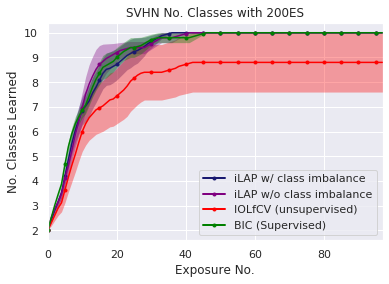

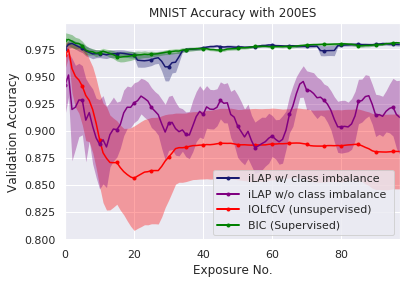

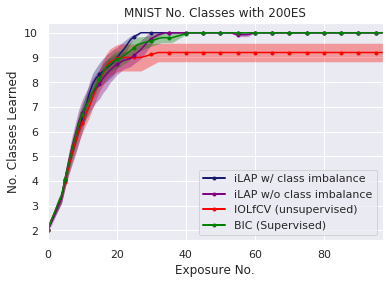

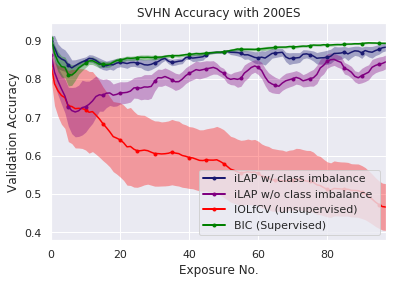

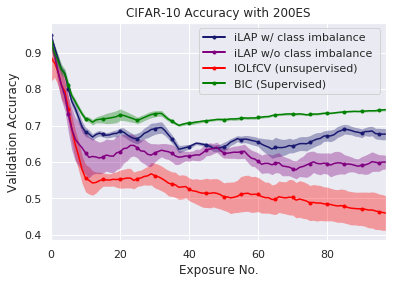

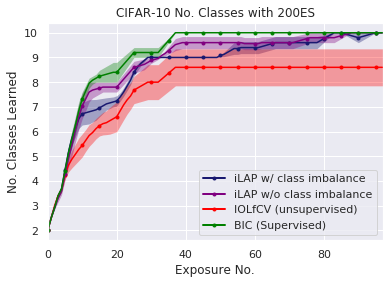

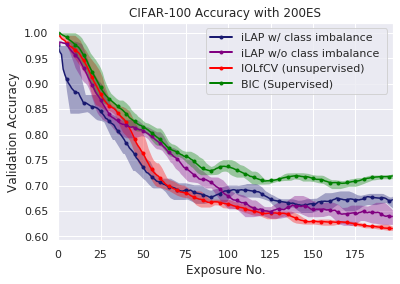

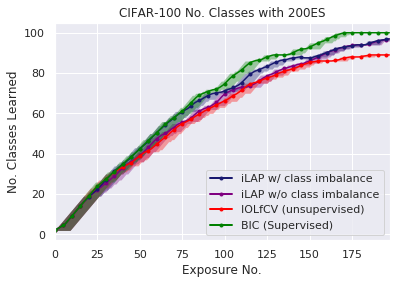

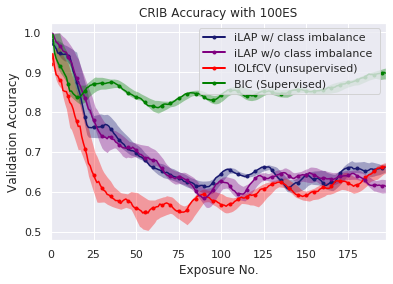

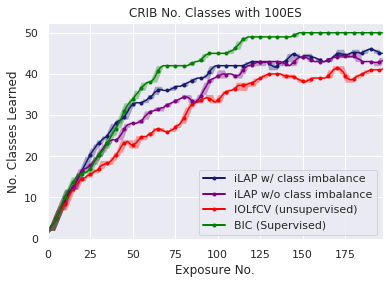

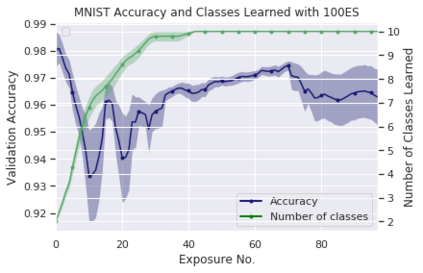

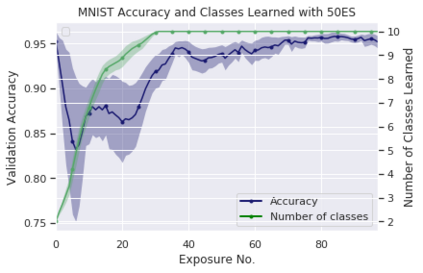

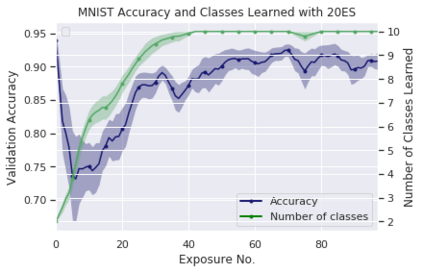

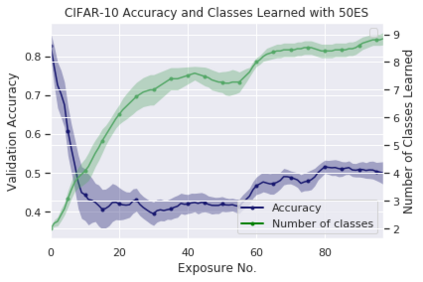

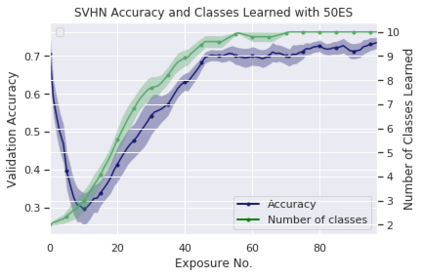

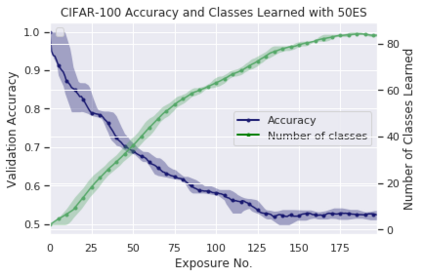

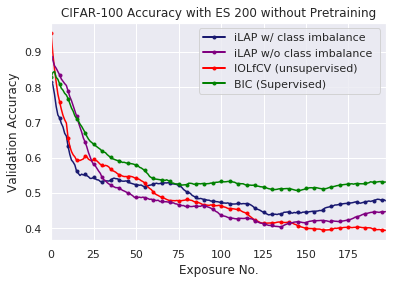

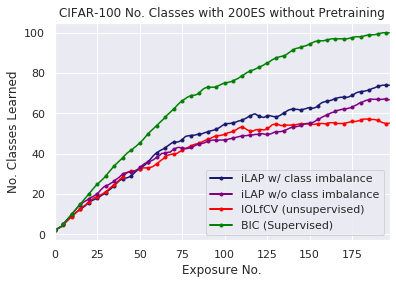

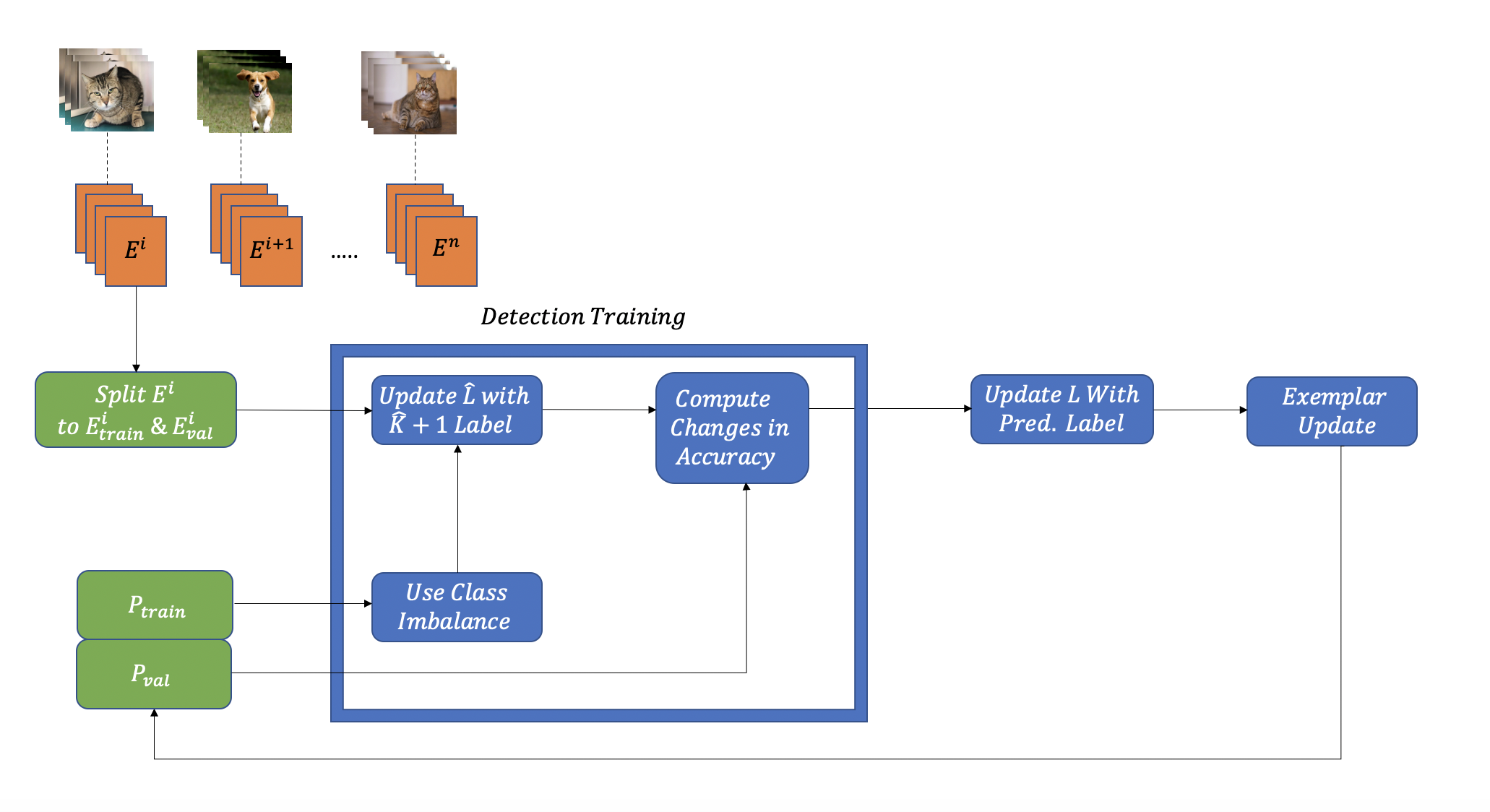

While many works on Continual Learning have shown promising results for mitigating catastrophic forgetting, they have relied on supervised training. To successfully learn in a label-agnostic incremental setting, a model must distinguish between learned and novel classes to properly include samples for training. We introduce a novelty detection method that leverages network confusion caused by training incoming data as a new class. We found that incorporating a class-imbalance during this detection method substantially enhances performance. The effectiveness of our approach is demonstrated across a set of image classification benchmarks: MNIST, SVHN, CIFAR-10, CIFAR-100, and CRIB.

翻译:虽然关于持续学习的许多工作在减轻灾难性的遗忘方面已经显示出有希望的结果,但它们依靠监督的培训。要成功地在标签-不可知性递增环境中学习,模型必须区分学到的和新颖的课程,以适当包括培训样本。我们采用了一种新颖的检测方法,利用将收到的数据培训为新课程而带来的网络混乱。我们发现,在这种检测方法中纳入一个等级平衡大大增强了绩效。我们的方法的效力体现在一套图像分类基准上:MNIST、SVHN、CIFAR-10、CIFAR-100和CRIB。