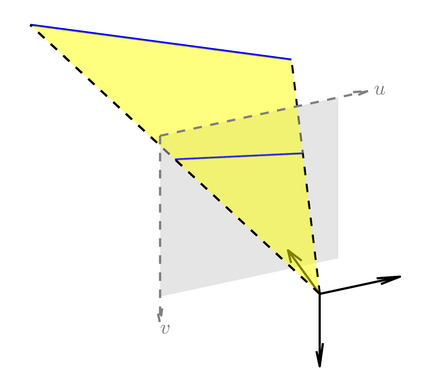

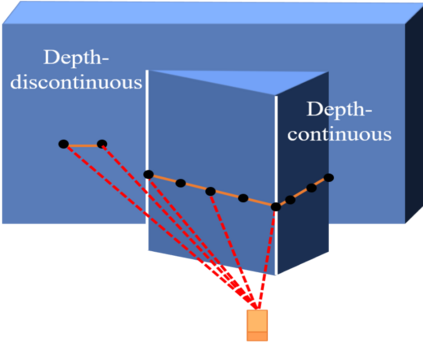

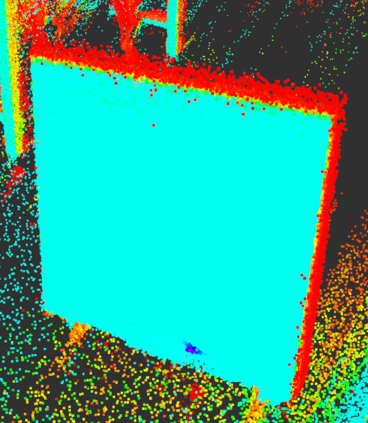

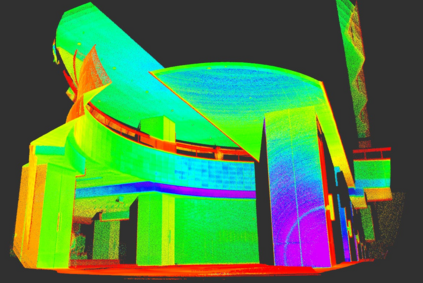

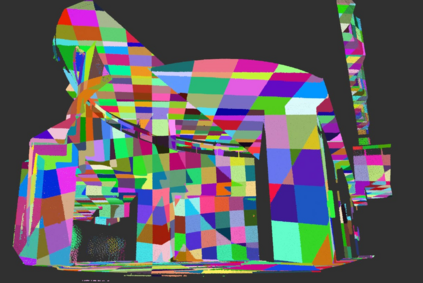

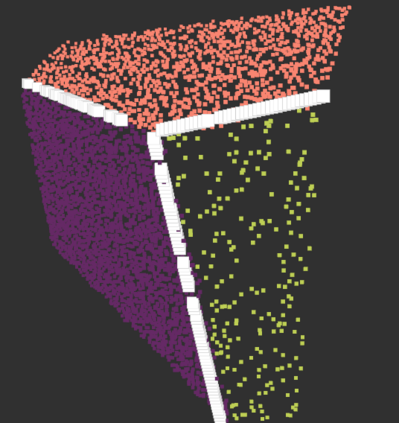

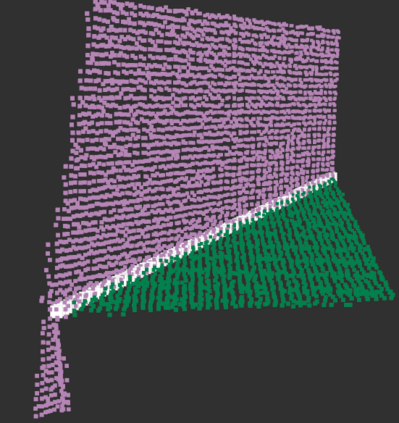

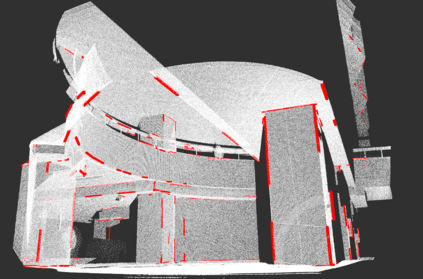

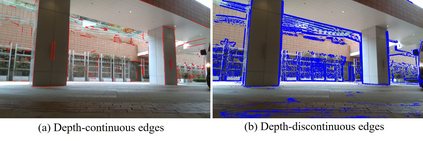

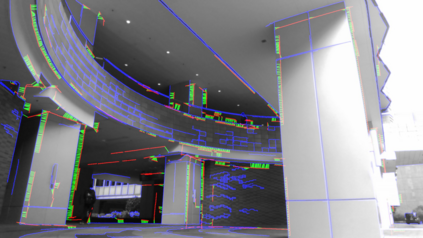

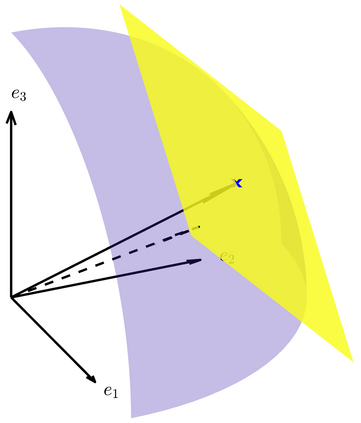

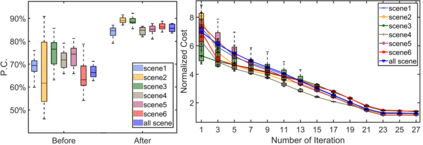

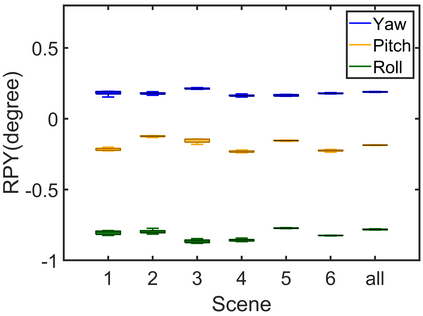

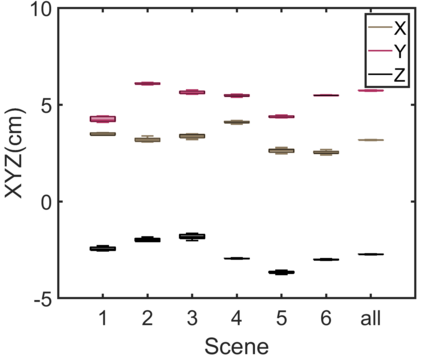

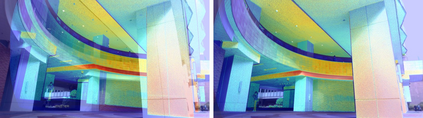

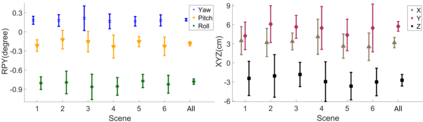

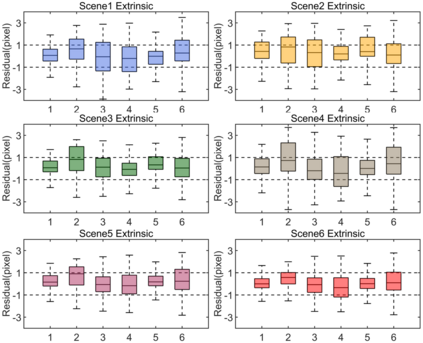

In this letter, we present a novel method for automatic extrinsic calibration of high-resolution LiDARs and RGB cameras in targetless environments. Our approach does not require checkerboards but can achieve pixel-level accuracy by aligning natural edge features in the two sensors. On the theory level, we analyze the constraints imposed by edge features and the sensitivity of calibration accuracy with respect to edge distribution in the scene. On the implementation level, we carefully investigate the physical measuring principles of LiDARs and propose an efficient and accurate LiDAR edge extraction method based on point cloud voxel cutting and plane fitting. Due to the edges' richness in natural scenes, we have carried out experiments in many indoor and outdoor scenes. The results show that this method has high robustness, accuracy, and consistency. It can promote the research and application of the fusion between LiDAR and camera. We have open-sourced our code on GitHub to benefit the community.

翻译:在这封信中,我们提出了一个在无目标环境中对高分辨率LIDARs和RGB相机进行自动外部校准的新方法。我们的方法不需要检查板,但可以通过对两个传感器的自然边缘特征进行校准来达到像素级的准确性。在理论层面,我们分析了边缘特征和校准精确度对现场边缘分布的敏感性造成的限制。在执行层面,我们仔细研究了LIDARs的物理测量原理,并提出了一种高效和准确的LIDAR边缘提取方法,其依据是点云Voxel切割和飞机装配。由于边缘在自然场景中的丰富性,我们在许多室内和室外场景中进行了实验。结果显示,这一方法具有很强的稳定性、准确性和一致性。它能够促进LDAR和相机之间融合的研究和应用。我们以GitHub为对象的代码是开放的,以造福社区。