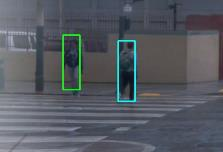

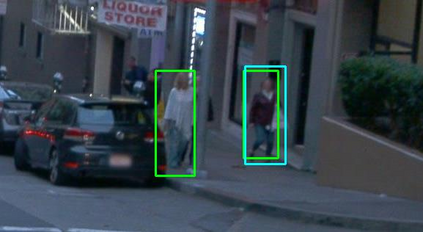

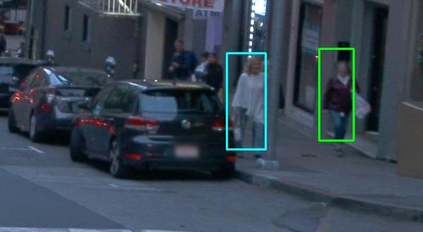

This paper presents a novel method for pedestrian detection and tracking by fusing camera and LiDAR sensor data. To deal with the challenges associated with the autonomous driving scenarios, an integrated tracking and detection framework is proposed. The detection phase is performed by converting LiDAR streams to computationally tractable depth images, and then, a deep neural network is developed to identify pedestrian candidates both in RGB and depth images. To provide accurate information, the detection phase is further enhanced by fusing multi-modal sensor information using the Kalman filter. The tracking phase is a combination of the Kalman filter prediction and an optical flow algorithm to track multiple pedestrians in a scene. We evaluate our framework on a real public driving dataset. Experimental results demonstrate that the proposed method achieves significant performance improvement over a baseline method that solely uses image-based pedestrian detection.

翻译:为了应对与自主驾驶情景有关的挑战,提议了一个综合跟踪和检测框架。检测阶段是通过将LiDAR流转换成可计算可移植深度图像,然后开发一个深神经网络,以在RGB和深度图像中识别行人候选人。为了提供准确信息,利用Kalman过滤器将多式传感器信息引信化,检测阶段得到进一步加强。跟踪阶段是卡尔曼过滤器预测和光学流程算法的结合,以跟踪现场多行人。我们评估了我们关于真实公共驾驶数据集的框架。实验结果显示,拟议方法在仅使用图像行人探测的基线方法基础上取得了显著的绩效改进。