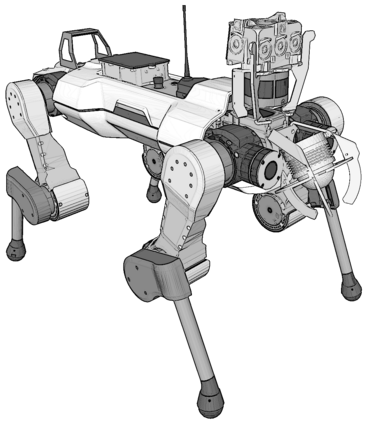

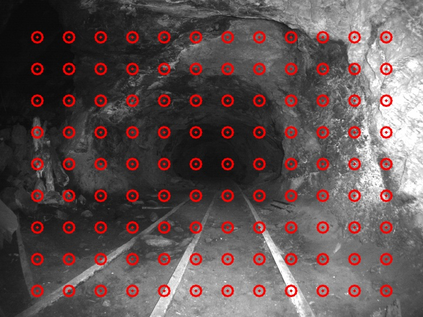

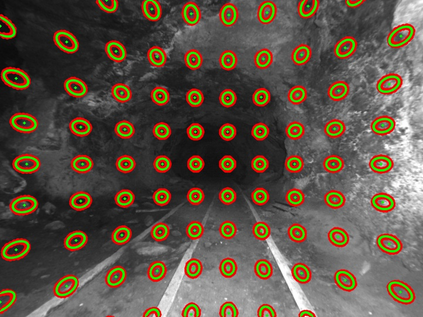

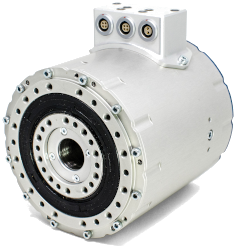

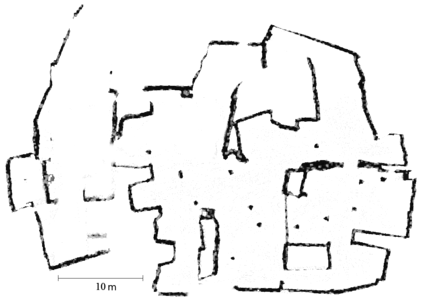

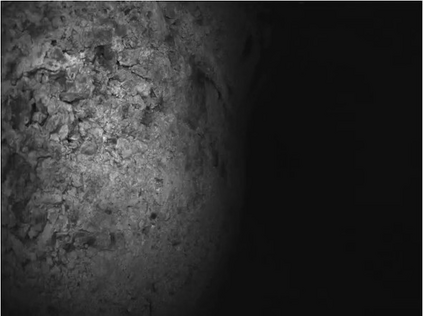

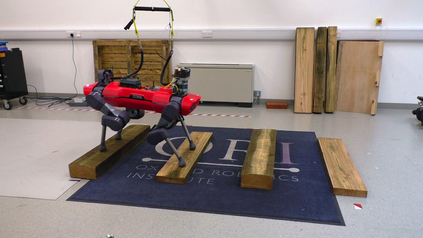

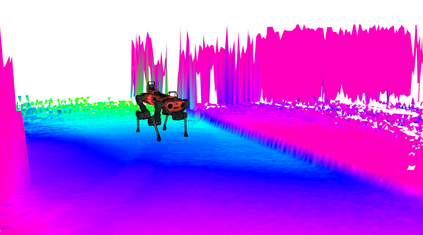

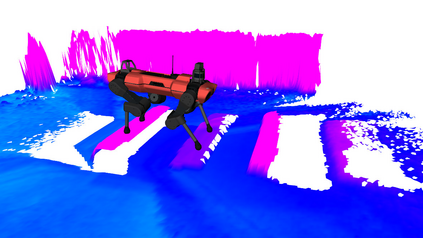

We present VILENS (Visual Inertial Lidar Legged Navigation System), an odometry system for legged robots based on factor graphs. The key novelty is the tight fusion of four different sensor modalities to achieve reliable operation when the individual sensors would otherwise produce degenerate estimation. To minimize leg odometry drift, we extend the robot's state with a linear velocity bias term which is estimated online. This bias is only observable because of the tight fusion of this preintegrated velocity factor with vision, lidar, and IMU factors. Extensive experimental validation on the ANYmal quadruped robots is presented, for a total duration of 2 h and 1.8 km traveled. The experiments involved dynamic locomotion over loose rocks, slopes, and mud; these included perceptual challenges, such as dark and dusty underground caverns or open, feature-deprived areas, as well as mobility challenges such as slipping and terrain deformation. We show an average improvement of 62% translational and 51% rotational errors compared to a state-of-the-art loosely coupled approach. To demonstrate its robustness, VILENS was also integrated with a perceptive controller and a local path planner.

翻译:我们以要素图形为基础,为腿部机器人推出VILENS(视觉惰性利达尔腿导航系统),这是一个基于要素图的腿部机器人的odography 系统。 关键的新颖之处是,在单个传感器否则会产生退化估计时,为了实现可靠的操作,四种不同传感器模式的紧密结合, 实现可靠运行。 为了尽量减少腿部软度漂移, 我们以在线估计的线性速度偏差术语扩展机器人的状态。 这种偏差之所以能观察到,是因为这个预集速度系数与视觉、 利达尔 和 IMU 系数的紧密结合。 对Anymal四重机器人进行了广泛的实验性验证, 整个时间为2小时和1.8公里。 实验涉及对松散岩石、 斜坡和泥土的动态移动; 其中包括感知性挑战, 如黑暗和尘埃的地下洞穴或开放、 特征偏差区域, 以及移动性挑战, 如滑坡和地形变形等。 我们显示, 相对于状态的松动方法而言, 62%的翻译和51%的旋转误差平均改进了62%。 为了显示,VILEN也与地方的中央控制路径进行了整合。