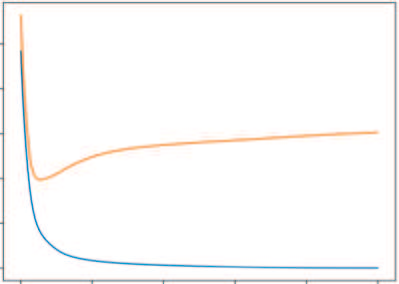

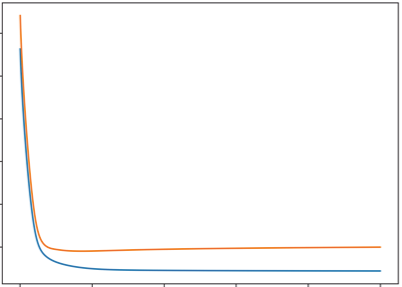

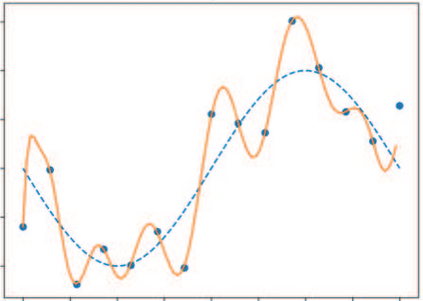

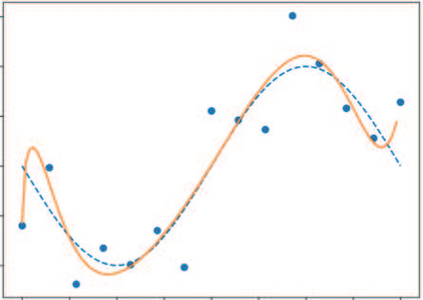

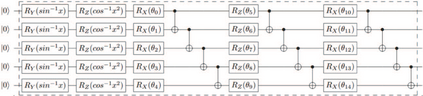

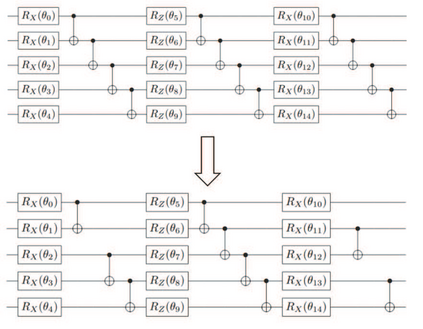

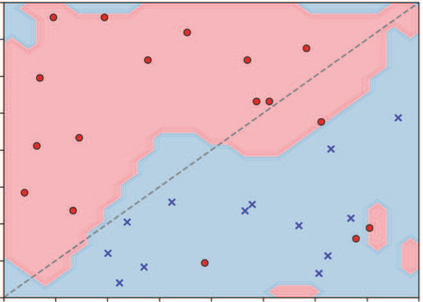

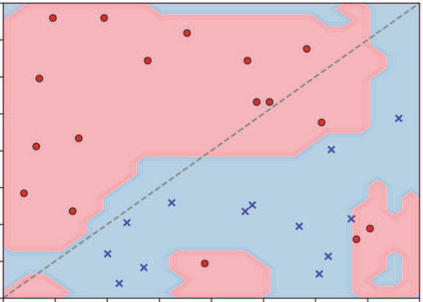

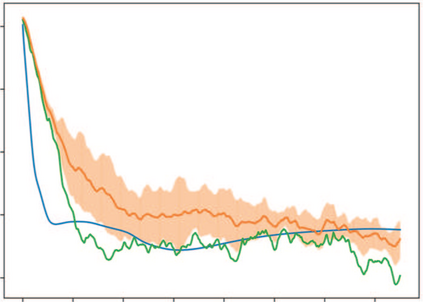

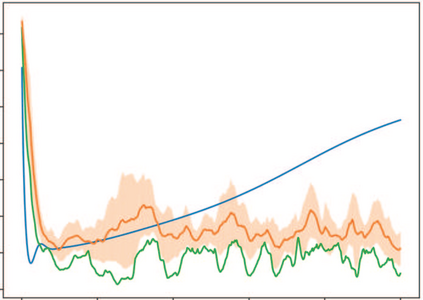

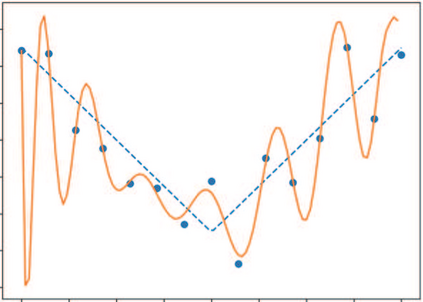

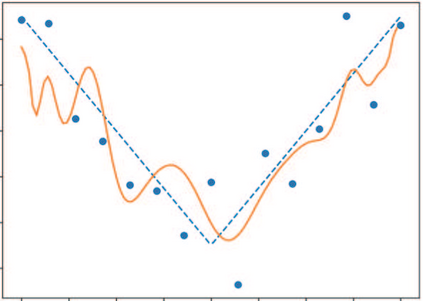

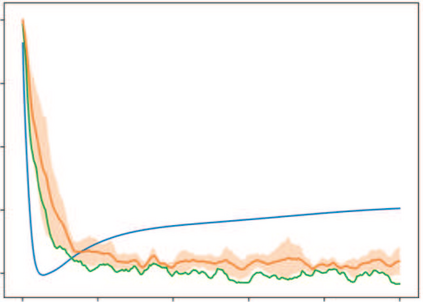

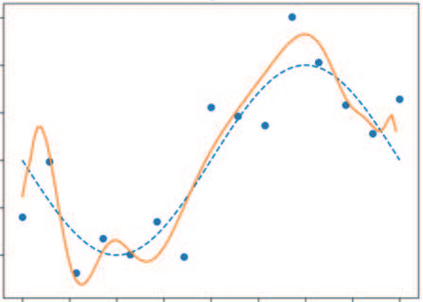

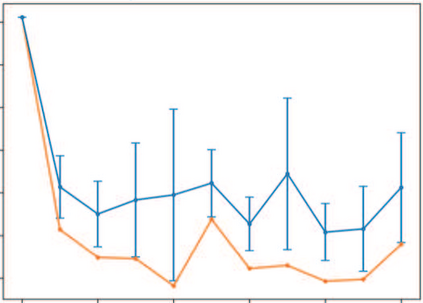

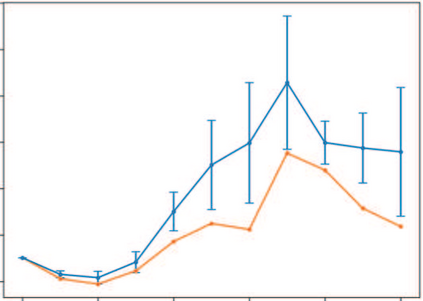

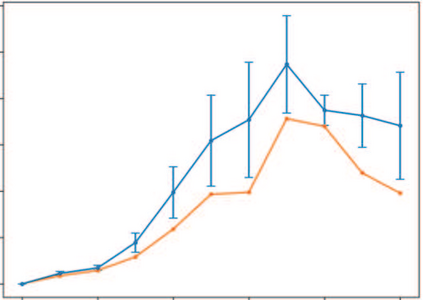

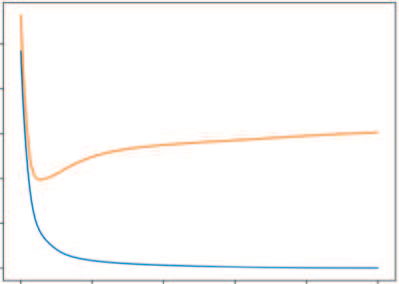

The ultimate goal in machine learning is to construct a model function that has a generalization capability for unseen dataset, based on given training dataset. If the model function has too much expressibility power, then it may overfit to the training data and as a result lose the generalization capability. To avoid such overfitting issue, several techniques have been developed in the classical machine learning regime, and the dropout is one such effective method. This paper proposes a straightforward analogue of this technique in the quantum machine learning regime, the entangling dropout, meaning that some entangling gates in a given parametrized quantum circuit are randomly removed during the training process to reduce the expressibility of the circuit. Some simple case studies are given to show that this technique actually suppresses the overfitting.

翻译:机器学习的最终目的是在特定的培训数据集的基础上,构建一个对看不见数据集具有通用能力的模型功能。 如果模型功能具有太多的可读性, 那么它可能会与培训数据过度匹配, 从而丧失一般化能力。 为了避免出现这种超配问题, 在古典机器学习制度中开发了几种技术, 辍学是这种有效方法之一。 本文建议在量子机器学习制度中, 即 聚合的退出中, 一种直接的类似技术, 意思是, 某个给定的对称化量子电路中的某些连接门在培训过程中被随机删除, 以减少电路的可读性。 一些简单的案例研究显示, 这种技术实际上抑制了超配。