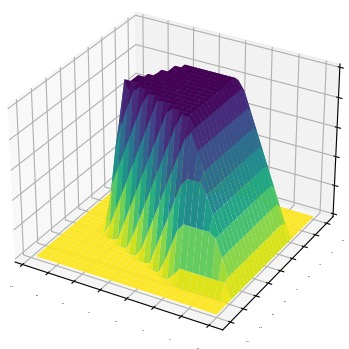

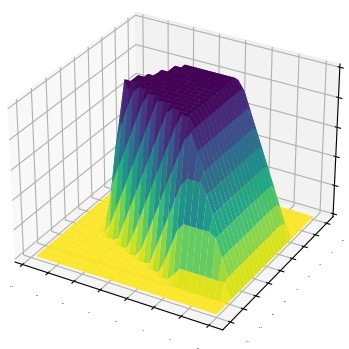

We present a novel methodology for repairing neural networks that use ReLU activation functions. Unlike existing methods that rely on modifying the weights of a neural network which can induce a global change in the function space, our approach applies only a localized change in the function space while still guaranteeing the removal of the buggy behavior. By leveraging the piecewise linear nature of ReLU networks, our approach can efficiently construct a patch network tailored to the linear region where the buggy input resides, which when combined with the original network, provably corrects the behavior on the buggy input. Our method is both sound and complete -- the repaired network is guaranteed to fix the buggy input, and a patch is guaranteed to be found for any buggy input. Moreover, our approach preserves the continuous piecewise linear nature of ReLU networks, automatically generalizes the repair to all the points including other undetected buggy inputs inside the repair region, is minimal in terms of changes in the function space, and guarantees that outputs on inputs away from the repair region are unaltered. On several benchmarks, we show that our approach significantly outperforms existing methods in terms of locality and limiting negative side effects.

翻译:我们提出了一个修复使用 ReLU 激活功能的神经网络的新方法。 与依赖改变神经网络重量的重力的现有方法不同, 我们的方法只应用功能空间的局部变化, 同时又能保证消除错误行为。 我们的方法可以通过利用ReLU 网络的片状线性性质, 有效地建立一个适合错误输入所在线性区域的补丁网络, 当与原始网络相结合时, 可以明显地纠正错误输入中的行为。 我们的方法既健全又完整 -- -- 修复网络保证纠正错误输入, 也保证为任何错误输入找到补丁。 此外, 我们的方法保存了ReLU 网络的持续的片状线性, 自动将修复内容概括到所有点上, 包括修复区域内其他未检测到的错误输入, 功能空间的变化极少, 并且保证修复区域外输入的输出没有改变。 在几个基准上, 我们显示我们的方法大大超越了当前在位置上的方法, 并限制了负面效果。