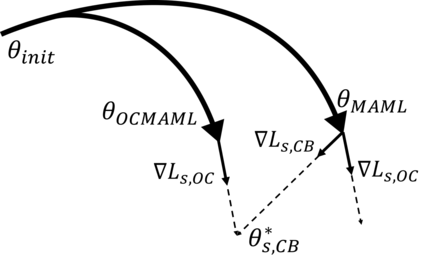

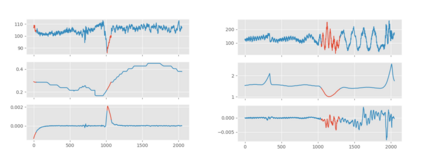

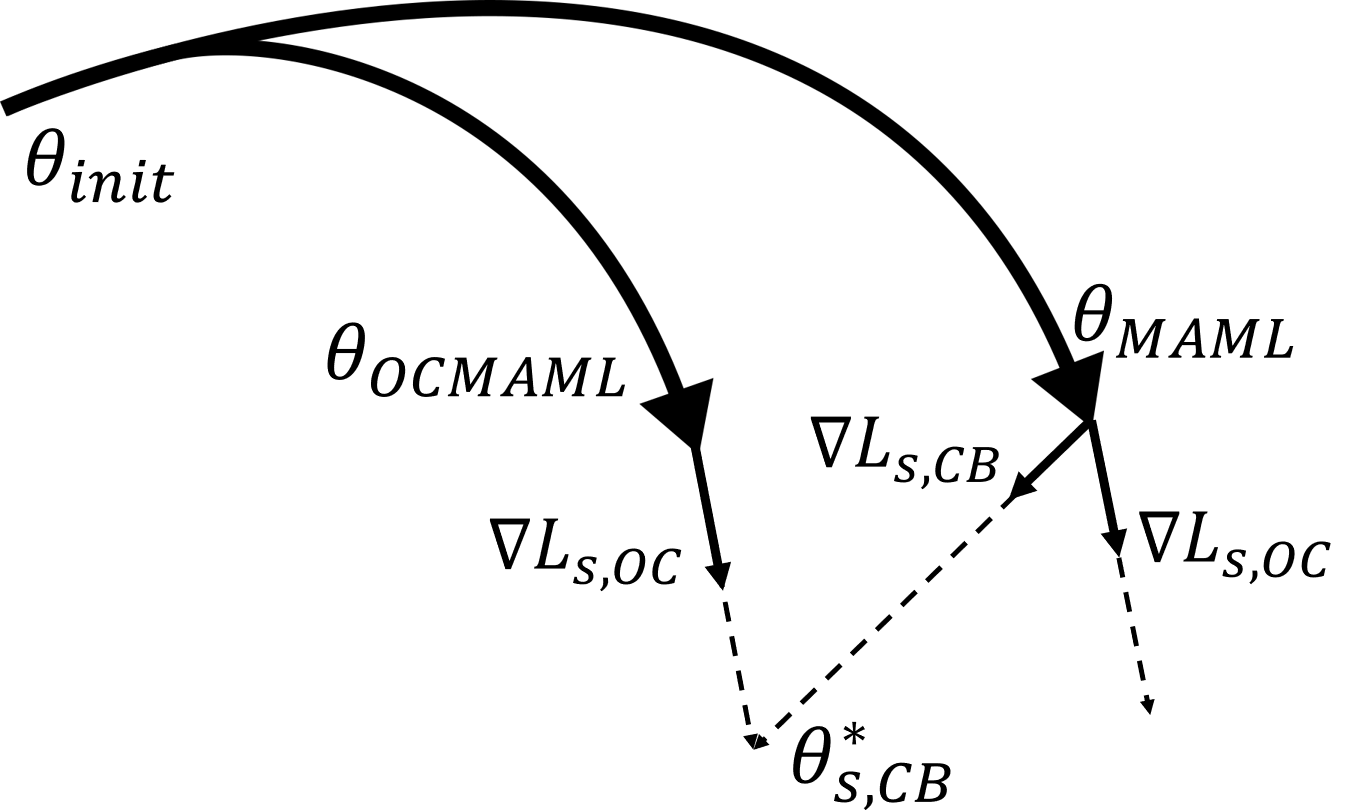

Although few-shot learning and one-class classification (OCC), i.e., learning a binary classifier with data from only one class, have been separately well studied, their intersection remains rather unexplored. Our work addresses the few-shot OCC problem and presents a method to modify the episodic data sampling strategy of the model-agnostic meta-learning (MAML) algorithm to learn a model initialization particularly suited for learning few-shot OCC tasks. This is done by explicitly optimizing for an initialization which only requires few gradient steps with one-class minibatches to yield a performance increase on class-balanced test data. We provide a theoretical analysis that explains why our approach works in the few-shot OCC scenario, while other meta-learning algorithms fail, including the unmodified MAML. Our experiments on eight datasets from the image and time-series domains show that our method leads to better results than classical OCC and few-shot classification approaches, and demonstrate the ability to learn unseen tasks from only few normal class samples. Moreover, we successfully train anomaly detectors for a real-world application on sensor readings recorded during industrial manufacturing of workpieces with a CNC milling machine, by using few normal examples. Finally, we empirically demonstrate that the proposed data sampling technique increases the performance of more recent meta-learning algorithms in few-shot OCC and yields state-of-the-art results in this problem setting.

翻译:虽然很少的学习和一等分类(OCC),即学习一个只来自一个类别的数据的二进制分类器(OCC),已经分别得到很好地研究,但是它们的交叉点仍然相当没有探索。我们的工作解决了少发的OCC问题,并提出了一个方法来修改模型-不可知的元学习(MAML)算法的偶发数据抽样战略,以学习一种特别适合学习少发的OCC任务的模式初始化方法。这是通过明确优化初始化的方法实现的,这种初始化只需要几个梯级步骤,只有一等小囊小囊,才能提高班级平衡测试数据的性能。我们提供了理论分析,解释了为什么我们的方法在少数发的OCC假设中起作用,而其他元学习算法却失败了,包括未修改的MAML。我们从图像和时间序列域的八个数据集实验显示,我们的方法比经典OCC和几分级分类方法更能取得更好的结果,并展示从少数普通类样本中学习看不见的任务的能力。此外,我们成功地为一些传感器应用了异常的异常探测器应用,在工业取样中读取了最新的标准,我们最后用了正常的模型学习方法,我们用Simalmax的成绩模型,我们用了更多的学习方法来展示。