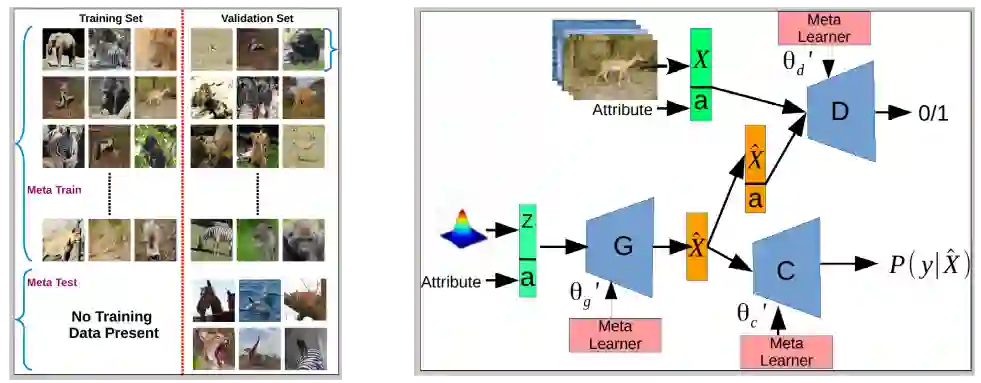

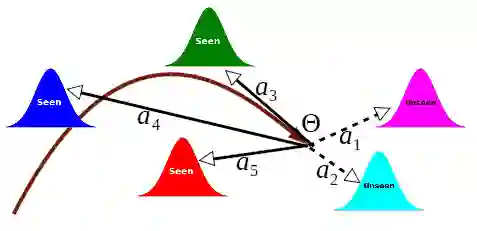

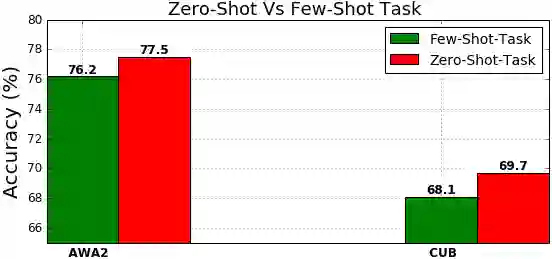

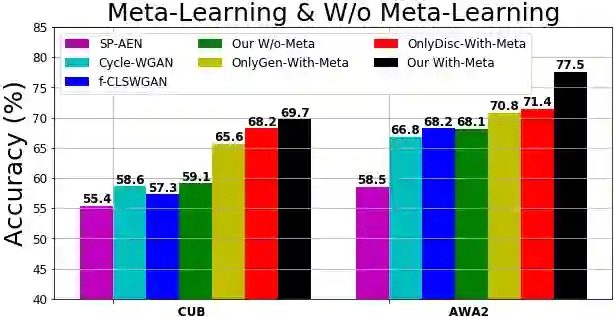

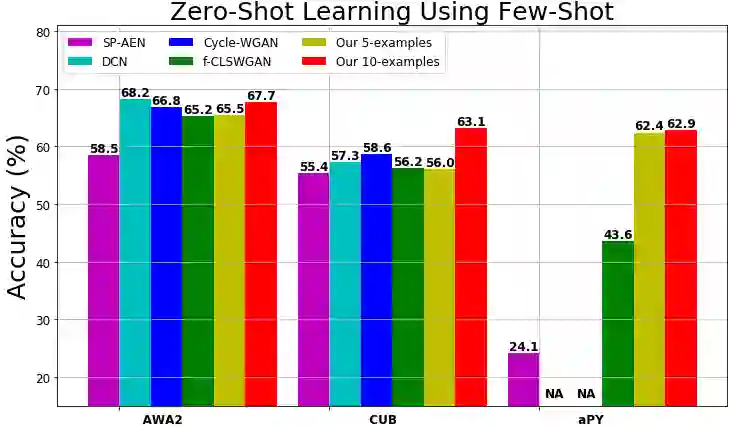

Learning to classify unseen class samples at test time is popularly referred to as zero-shot learning (ZSL). If test samples can be from training (seen) as well as unseen classes, it is a more challenging problem due to the existence of strong bias towards seen classes. This problem is generally known as \emph{generalized} zero-shot learning (GZSL). Thanks to the recent advances in generative models such as VAEs and GANs, sample synthesis based approaches have gained considerable attention for solving this problem. These approaches are able to handle the problem of class bias by synthesizing unseen class samples. However, these ZSL/GZSL models suffer due to the following key limitations: $(i)$ Their training stage learns a class-conditioned generator using only \emph{seen} class data and the training stage does not \emph{explicitly} learn to generate the unseen class samples; $(ii)$ They do not learn a generic optimal parameter which can easily generalize for both seen and unseen class generation; and $(iii)$ If we only have access to a very few samples per seen class, these models tend to perform poorly. In this paper, we propose a meta-learning based generative model that naturally handles these limitations. The proposed model is based on integrating model-agnostic meta learning with a Wasserstein GAN (WGAN) to handle $(i)$ and $(iii)$, and uses a novel task distribution to handle $(ii)$. Our proposed model yields significant improvements on standard ZSL as well as more challenging GZSL setting. In ZSL setting, our model yields 4.5\%, 6.0\%, 9.8\%, and 27.9\% relative improvements over the current state-of-the-art on CUB, AWA1, AWA2, and aPY datasets, respectively.

翻译:在测试时学习对隐蔽类样本进行分类,通常被称为零光学习(ZSL)。如果测试样本来自培训(见)和隐蔽类,那么由于存在对可见类的强烈偏向,这是一个更具挑战性的问题。这个问题通常被称为“emph{fcfcfcfcfccd 零光学习(GZSL) ” 。由于VAEs和GANs等基因化模型的最近进步,基于抽样合成的方法在解决这一问题上获得了相当大的关注。这些方法能够通过合成不可见类样本来处理课堂偏差问题。然而,这些ZSL/GZSL的模型由于以下关键限制而受害:$(一) 其培训阶段只用\emph{ffcfcen} 零光学习(GZSLS) 。由于VAE和NSLIC的模型最近进步, $(二) 它们不会在所见和不见的课堂上很容易地使用。