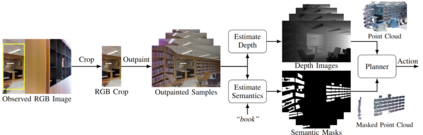

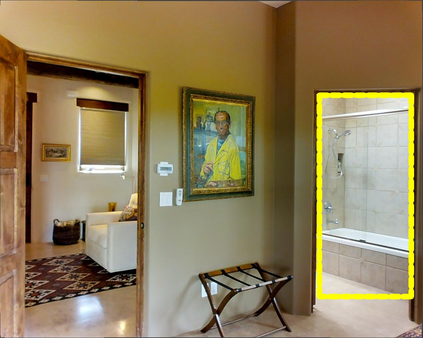

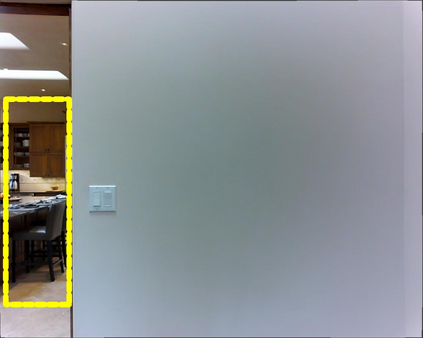

Priors are vital for planning under partial observability, yet difficult to obtain in practice. We present a sampling-based pipeline that leverages large-scale pretrained generative models to produce probabilistic priors capturing environmental uncertainty and spatio-semantic relationships in a zero-shot manner. Conditioned on partial observations, the pipeline recovers complete RGB-D point cloud samples with occupancy and target semantics, formulated to be directly useful in configuration-space planning. We establish a Matterport3D benchmark of rooms partially visible through doorways, where a robot must navigate to an unobserved target object. Effective priors for this setting must represent both occupancy and target-location uncertainty in unobserved regions. Experiments show that our approach recovers commonsense spatial semantics consistent with ground truth, yielding diverse, clean 3D point clouds usable in motion planning, highlight the promise of generative models as a rich source of priors for robotic planning.

翻译:暂无翻译