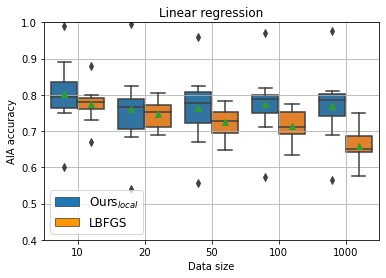

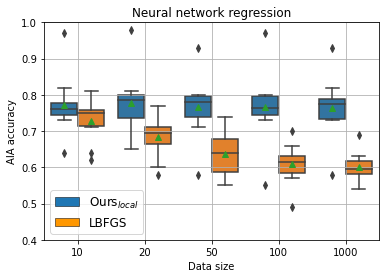

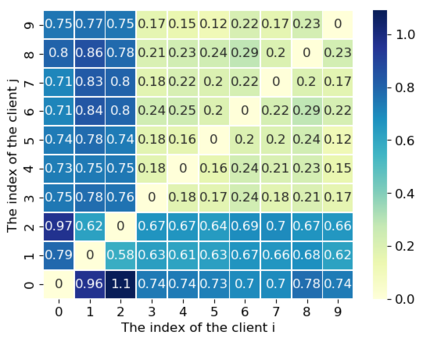

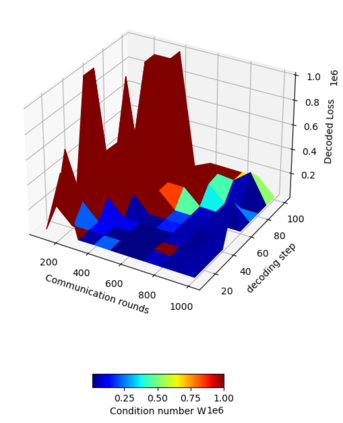

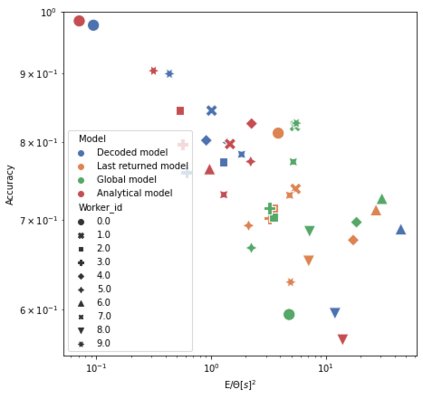

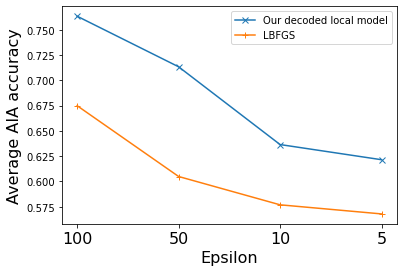

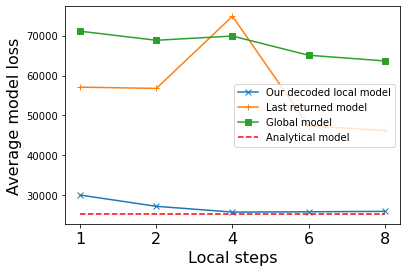

In this paper, we initiate the study of local model reconstruction attacks for federated learning, where a honest-but-curious adversary eavesdrops the messages exchanged between a targeted client and the server, and then reconstructs the local/personalized model of the victim. The local model reconstruction attack allows the adversary to trigger other classical attacks in a more effective way, since the local model only depends on the client's data and can leak more private information than the global model learned by the server. Additionally, we propose a novel model-based attribute inference attack in federated learning leveraging the local model reconstruction attack. We provide an analytical lower-bound for this attribute inference attack. Empirical results using real world datasets confirm that our local reconstruction attack works well for both regression and classification tasks. Moreover, we benchmark our novel attribute inference attack against the state-of-the-art attacks in federated learning. Our attack results in higher reconstruction accuracy especially when the clients' datasets are heterogeneous. Our work provides a new angle for designing powerful and explainable attacks to effectively quantify the privacy risk in FL.

翻译:在本文中,我们开始研究当地重建攻击模式,以进行联合学习,在其中,一个诚实但有说服力的对手窃听目标客户和服务器之间交换的信息,然后重建受害者的地方/个性化模式。当地重建攻击模式允许对手以更有效的方式触发其他古典攻击,因为当地模式只取决于客户的数据,而且可以泄露比服务器所学全球模式更多的私人信息。此外,我们提议在利用当地模式重建攻击时,在联合学习中,进行基于新颖的模型属性推断攻击。我们为这种属性推断攻击提供了较低的分析。使用真实世界数据集的经验证明,我们当地的重建攻击在回归和分类任务方面都效果良好。此外,我们衡量我们的新颖的推论,即攻击是针对联邦学习中最先进的攻击。我们的攻击导致重建的准确性更高,特别是当客户的数据集是异质的时。我们的工作为设计强大和可解释的攻击提供了一个新的角度,以便有效地量化FL的隐私风险。