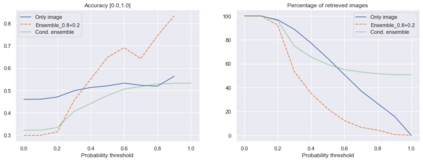

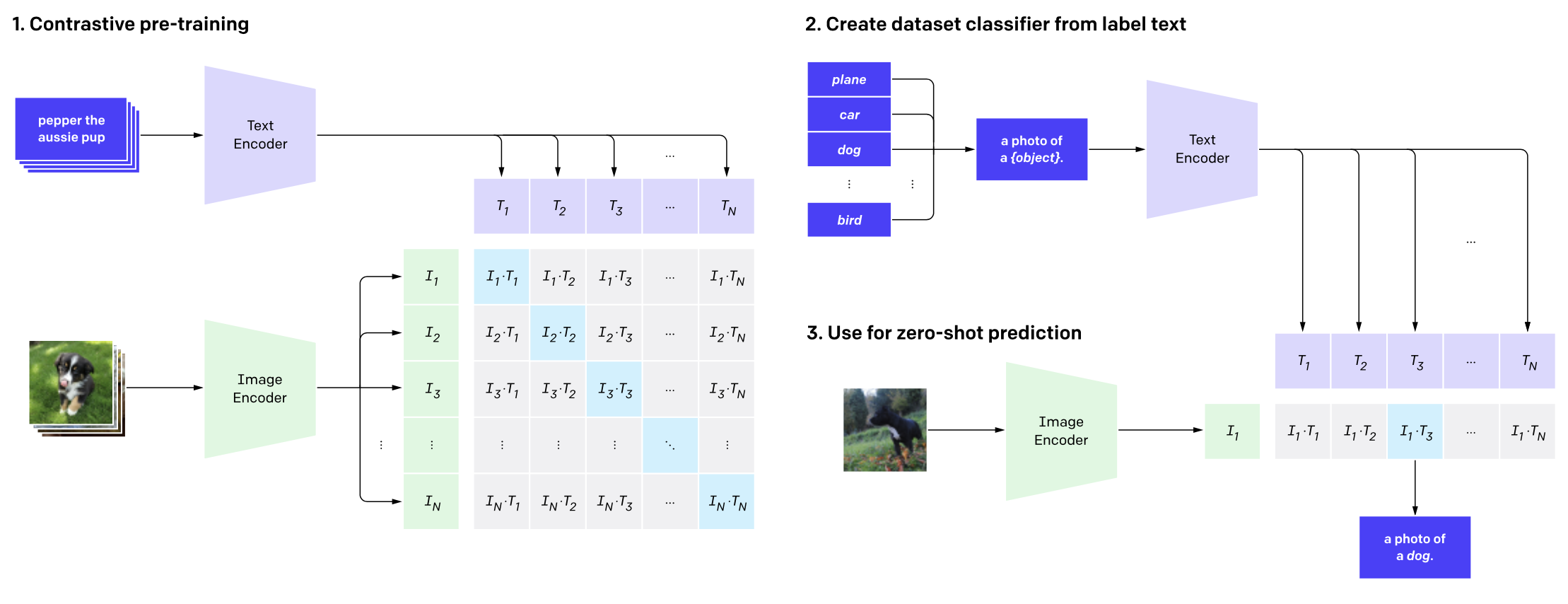

One of the main issues related to unsupervised machine learning is the cost of processing and extracting useful information from large datasets. In this work, we propose a classifier ensemble based on the transferable learning capabilities of the CLIP neural network architecture in multimodal environments (image and text) from social media. For this purpose, we used the InstaNY100K dataset and proposed a validation approach based on sampling techniques. Our experiments, based on image classification tasks according to the labels of the Places dataset, are performed by first considering only the visual part, and then adding the associated texts as support. The results obtained demonstrated that trained neural networks such as CLIP can be successfully applied to image classification with little fine-tuning, and considering the associated texts to the images can help to improve the accuracy depending on the goal. The results demonstrated what seems to be a promising research direction.

翻译:与无人监督的机器学习有关的主要问题之一是从大型数据集中处理和提取有用信息的成本。在这项工作中,我们建议根据CLIP神经网络结构在社会媒体的多式联运环境中(图像和文字)的可转让学习能力(图像和文字)进行分类,为此,我们使用InstaNY100K数据集,并根据取样技术提出验证办法。我们根据Places数据集标签的图像分类任务进行的实验首先考虑视觉部分,然后添加相关文本作为支持。获得的结果表明,经过培训的神经网络如CLIP可以成功应用到图像分类中,但很少微调,考虑图像的相关文本有助于根据目标提高准确性。结果显示,似乎是一个有希望的研究方向。