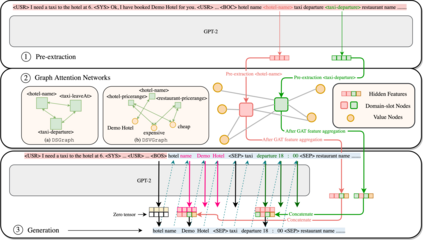

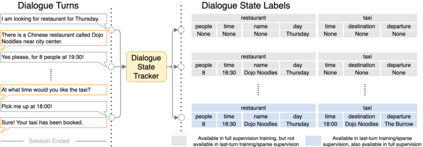

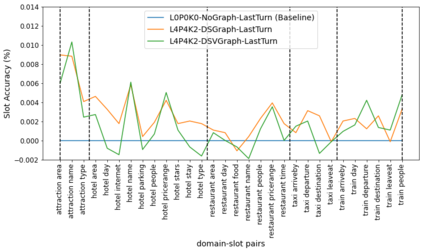

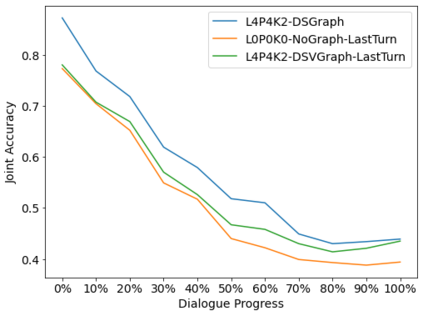

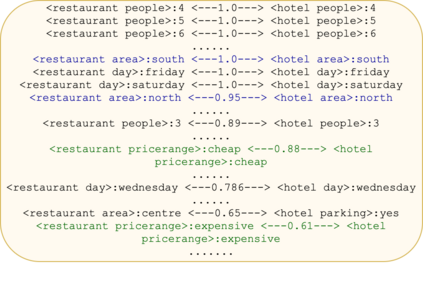

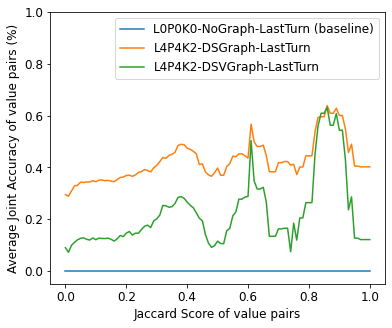

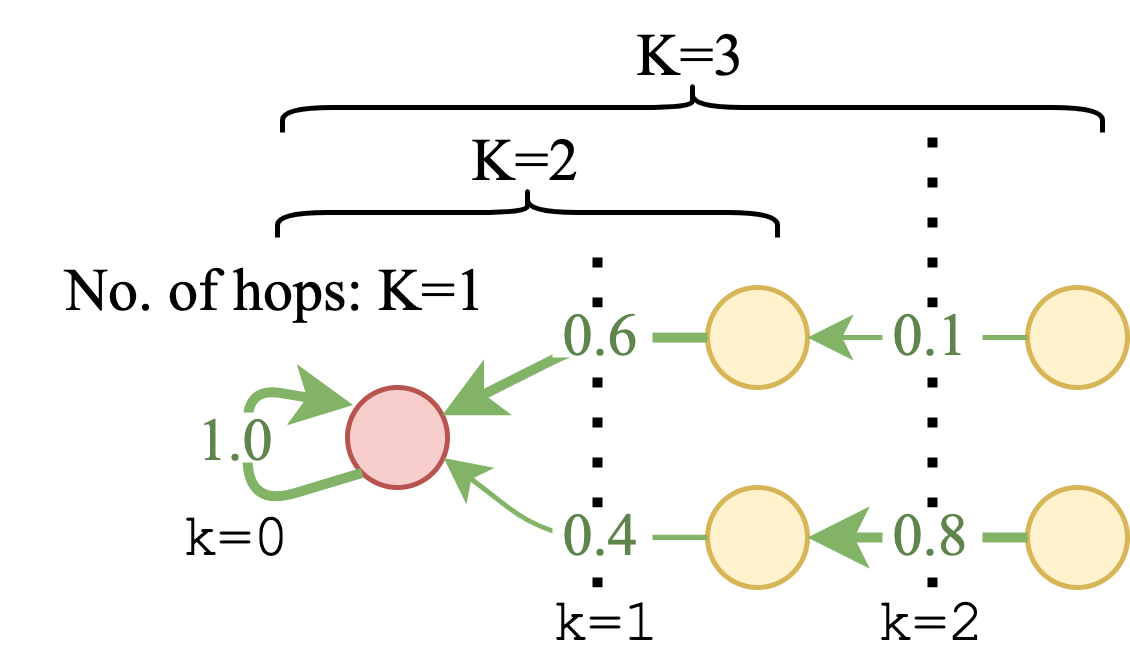

Dialogue State Tracking is central to multi-domain task-oriented dialogue systems, responsible for extracting information from user utterances. We present a novel hybrid architecture that augments GPT-2 with representations derived from Graph Attention Networks in such a way to allow causal, sequential prediction of slot values. The model architecture captures inter-slot relationships and dependencies across domains that otherwise can be lost in sequential prediction. We report improvements in state tracking performance in MultiWOZ 2.0 against a strong GPT-2 baseline and investigate a simplified sparse training scenario in which DST models are trained only on session-level annotations but evaluated at the turn level. We further report detailed analyses to demonstrate the effectiveness of graph models in DST by showing that the proposed graph modules capture inter-slot dependencies and improve the predictions of values that are common to multiple domains.

翻译:国家对话跟踪系统是多领域任务导向对话系统的核心,负责从用户语句中提取信息。我们展示了一个新的混合结构,通过图形关注网络的表达方式,扩大GPT-2,从而能够对空档值进行因果相继预测。模型结构捕捉了在连续预测中可能失去的跨领域间断关系和依赖性。我们报告在多功能组织2.0国家跟踪业绩方面比强大的GPT-2基线有所改进,并调查一个简化的稀少培训情景,即DST模型仅接受会话级说明培训,但在转弯层次上得到评价。我们进一步报告详细分析,通过显示拟议的图形模块捕捉了多个领域共同值的相互依存性并改进了预测,以显示DST中图形模型的有效性。