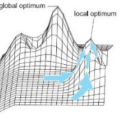

Correlation acts as a critical role in the tracking field, especially in recent popular Siamese-based trackers. The correlation operation is a simple fusion manner to consider the similarity between the template and the search region. However, the correlation operation itself is a local linear matching process, leading to lose semantic information and fall into local optimum easily, which may be the bottleneck of designing high-accuracy tracking algorithms. Is there any better feature fusion method than correlation? To address this issue, inspired by Transformer, this work presents a novel attention-based feature fusion network, which effectively combines the template and search region features solely using attention. Specifically, the proposed method includes an ego-context augment module based on self-attention and a cross-feature augment module based on cross-attention. Finally, we present a Transformer tracking (named TransT) method based on the Siamese-like feature extraction backbone, the designed attention-based fusion mechanism, and the classification and regression head. Experiments show that our TransT achieves very promising results on six challenging datasets, especially on large-scale LaSOT, TrackingNet, and GOT-10k benchmarks. Our tracker runs at approximatively 50 fps on GPU. Code and models are available at https://github.com/chenxin-dlut/TransT.

翻译:相关操作是一种简单的聚合方式, 来考虑模板和搜索区域特征之间的相似性。 然而, 相关操作本身是一个本地线性匹配过程, 导致语义信息丢失, 容易落到本地最佳状态, 这可能是设计高精度跟踪算法的瓶颈。 是否有比关联性更好的特性融合方法? 在变异器的启发下, 这项工作展示了一个基于关注的新特征融合网络, 有效地将模板和搜索区域特征合并在一起。 具体地说, 拟议的方法包括一个基于自我注意的自负字符增强模块, 和基于交叉注意的跨功能增强模块。 最后, 我们介绍一个基于类似暹米的特征提取主干线的变异跟踪方法, 设计基于关注的聚合机制, 以及分类和回归头。 实验显示, 我们的TransT在六种具有挑战性的数据设置上取得了非常有希望的结果, 特别是在大型的LASMAT/ Apprex ASU 上, ASVLADOR 和 ASVO ASU ASU ASU