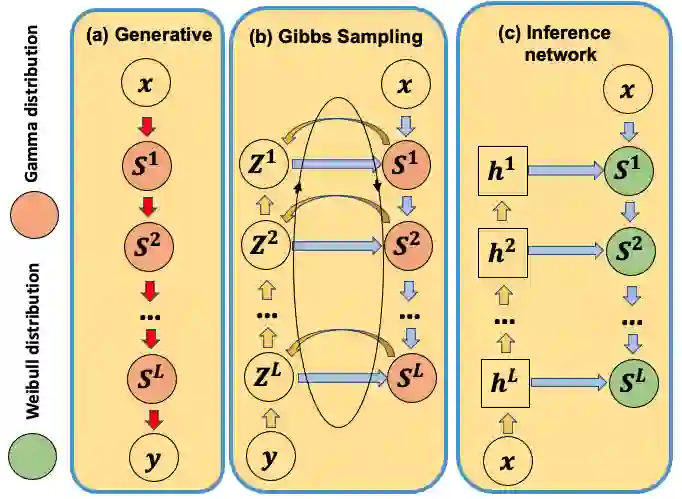

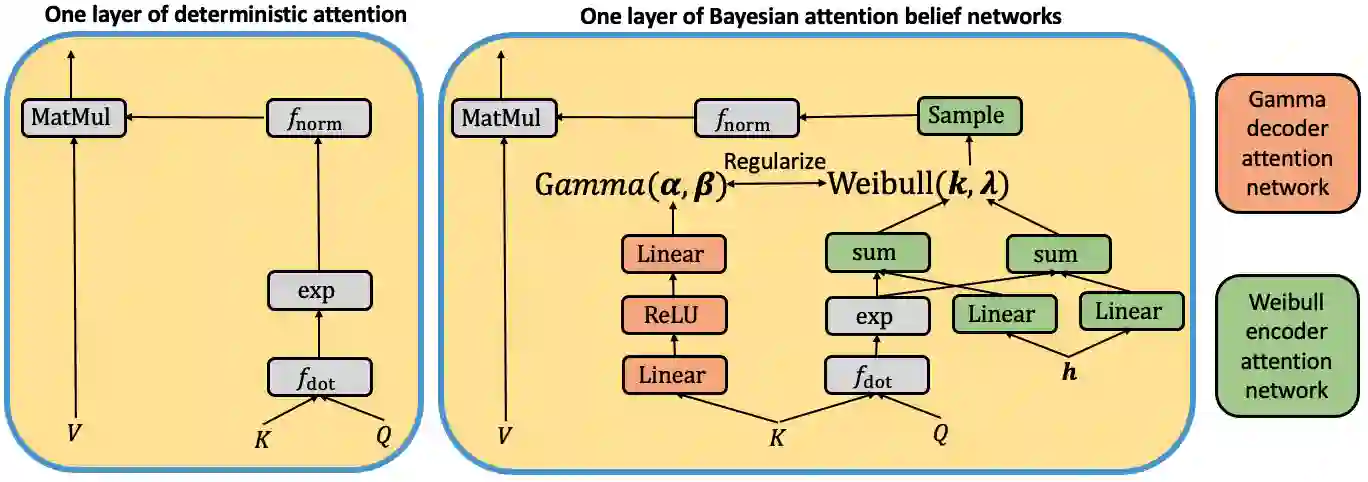

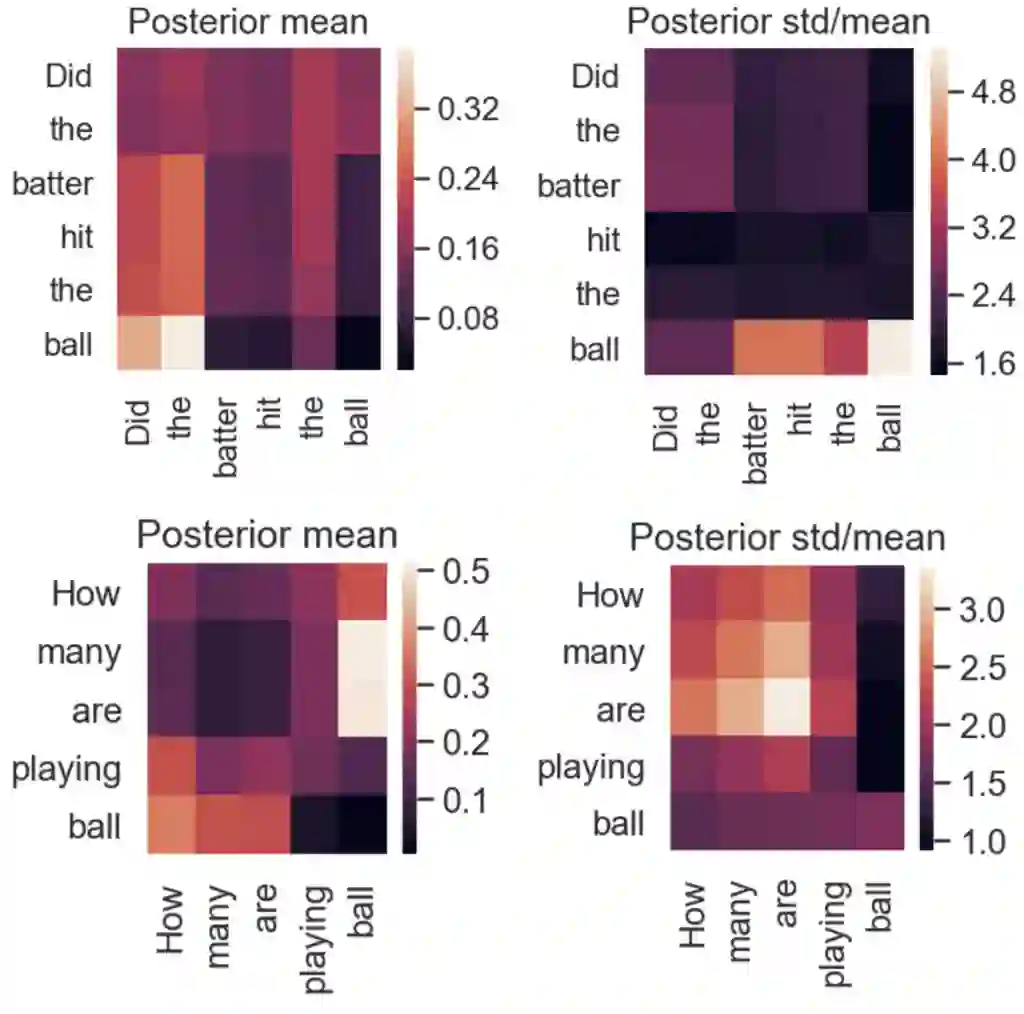

Attention-based neural networks have achieved state-of-the-art results on a wide range of tasks. Most such models use deterministic attention while stochastic attention is less explored due to the optimization difficulties or complicated model design. This paper introduces Bayesian attention belief networks, which construct a decoder network by modeling unnormalized attention weights with a hierarchy of gamma distributions, and an encoder network by stacking Weibull distributions with a deterministic-upward-stochastic-downward structure to approximate the posterior. The resulting auto-encoding networks can be optimized in a differentiable way with a variational lower bound. It is simple to convert any models with deterministic attention, including pretrained ones, to the proposed Bayesian attention belief networks. On a variety of language understanding tasks, we show that our method outperforms deterministic attention and state-of-the-art stochastic attention in accuracy, uncertainty estimation, generalization across domains, and robustness to adversarial attacks. We further demonstrate the general applicability of our method on neural machine translation and visual question answering, showing great potential of incorporating our method into various attention-related tasks.

翻译:基于关注的神经网络在一系列广泛的任务中取得了最先进的结果。 多数这类模型使用确定性关注,而由于优化困难或复杂的模型设计,对随机关注的探索较少。 本文介绍贝叶斯注意信仰网络,通过模拟伽马分布等级的不正常化关注权重,构建一个解码网络,通过将确定性关注权重与伽马分布等级挂钩,并通过堆叠Weibul分布,将确定性向上和自上向下的结构堆放到接近后方。 由此形成的自动编码网络可以以不同的方式优化,而变化程度较低。 将任何具有确定性关注的模型,包括预先培训的模型,转换到拟议的巴伊斯注意力信仰网络是简单易事。 关于多种语言理解任务,我们表明,我们的方法超越了确定性关注和状态的诊断性关注力,在准确性、不确定性估计、跨领域的总体化和对对抗性攻击的稳健性方面。 我们进一步展示了我们的方法在神经相关机器和视觉问题分析中的一般适用性, 展示了我们与神经相关和视觉问题有关的各种方法。