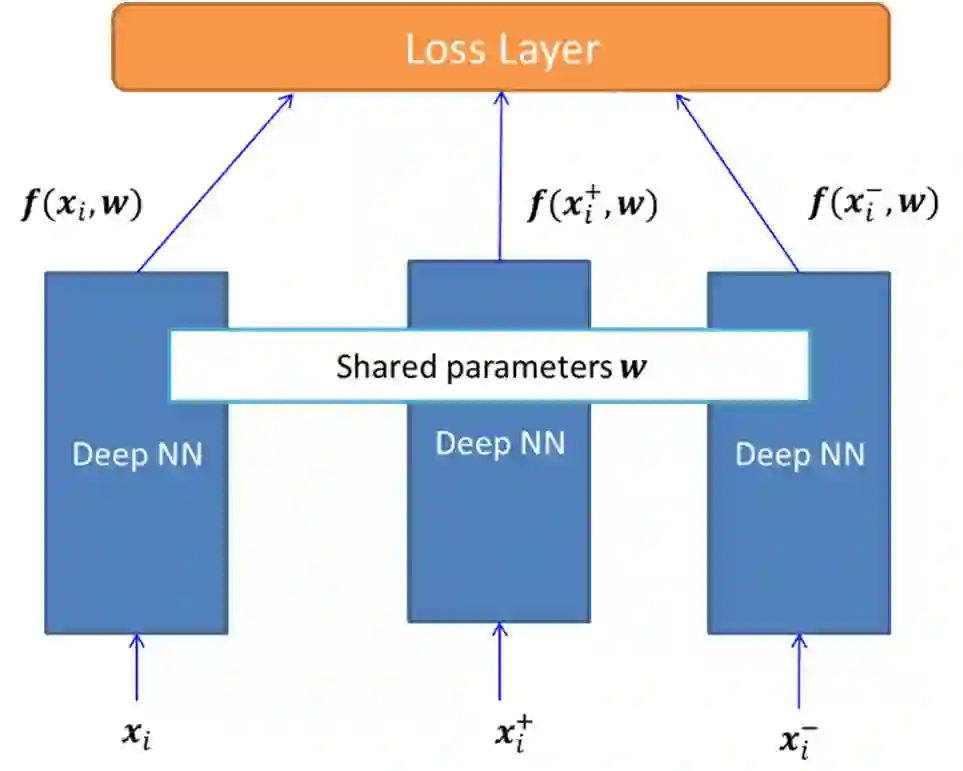

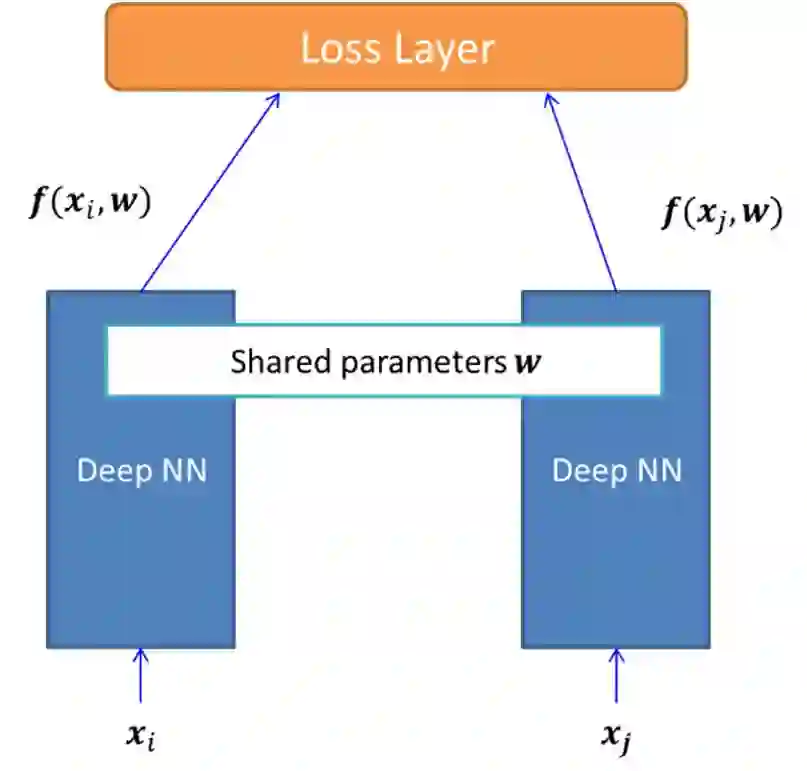

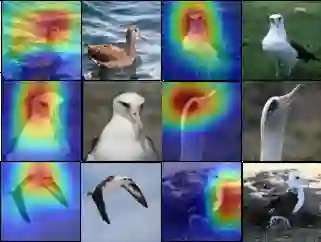

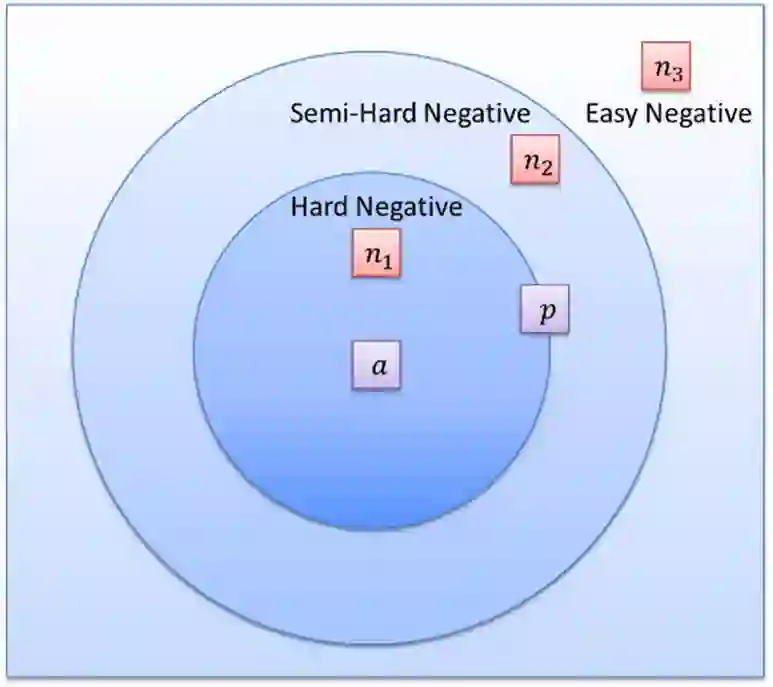

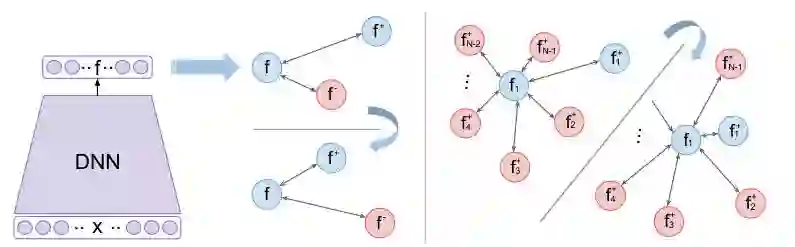

Metric learning algorithms aim to learn a distance function that brings the semantically similar data items together and keeps dissimilar ones at a distance. The traditional Mahalanobis distance learning is equivalent to find a linear projection. In contrast, Deep Metric Learning (DML) methods are proposed that automatically extract features from data and learn a non-linear transformation from input space to a semantically embedding space. Recently, many DML methods are proposed focused to enhance the discrimination power of the learned metric by providing novel sampling strategies or loss functions. This approach is very helpful when both the training and test examples are coming from the same set of categories. However, it is less effective in many applications of DML such as image retrieval and person-reidentification. Here, the DML should learn general semantic concepts from observed classes and employ them to rank or identify objects from unseen categories. Neglecting the generalization ability of the learned representation and just emphasizing to learn a more discriminative embedding on the observed classes may lead to the overfitting problem. To address this limitation, we propose a framework to enhance the generalization power of existing DML methods in a Zero-Shot Learning (ZSL) setting by general yet discriminative representation learning and employing a class adversarial neural network. To learn a more general representation, we propose to employ feature maps of intermediate layers in a deep neural network and enhance their discrimination power through an attention mechanism. Besides, a class adversarial network is utilized to enforce the deep model to seek class invariant features for the DML task. We evaluate our work on widely used machine vision datasets in a ZSL setting.

翻译:计量学习算法旨在学习一种远程功能,将精密相似的数据项目连接在一起,并将不同的数据项目保持在距离上。传统的Mahalanobis远程学习相当于找到线性投影。相反,深米学习(DML)方法建议从数据中自动提取特征,学习从输入空间到隐蔽空间的非线性转换。最近,许多DML方法建议侧重于通过提供新的抽样战略或损失功能,加强所学度度量的差别性力量。当培训和测试实例来自同一组类别时,这一方法非常有用。然而,在DML的许多应用中,如图像检索和人重新定位等,它不太有效。在这里,深米学习(DMDL)方法应当从观察的班级中学习一般的语义概念,并使用它们从输入空间嵌入空间内嵌入空间。忽略了所学代表性的一般能力,只是强调在所观测到的类中学习更具歧视性的模型可能导致过度适应问题。为了应对这一限制,我们建议一个框架,在DML网络的许多应用通用的图像网络中,在SL结构中,在使用一个普通的层次中,在SLSLSL 学会中学习一个常规结构中学习一个普通的SLSL 。在SL 学会中学习一个常规结构中学习一个普通结构图。