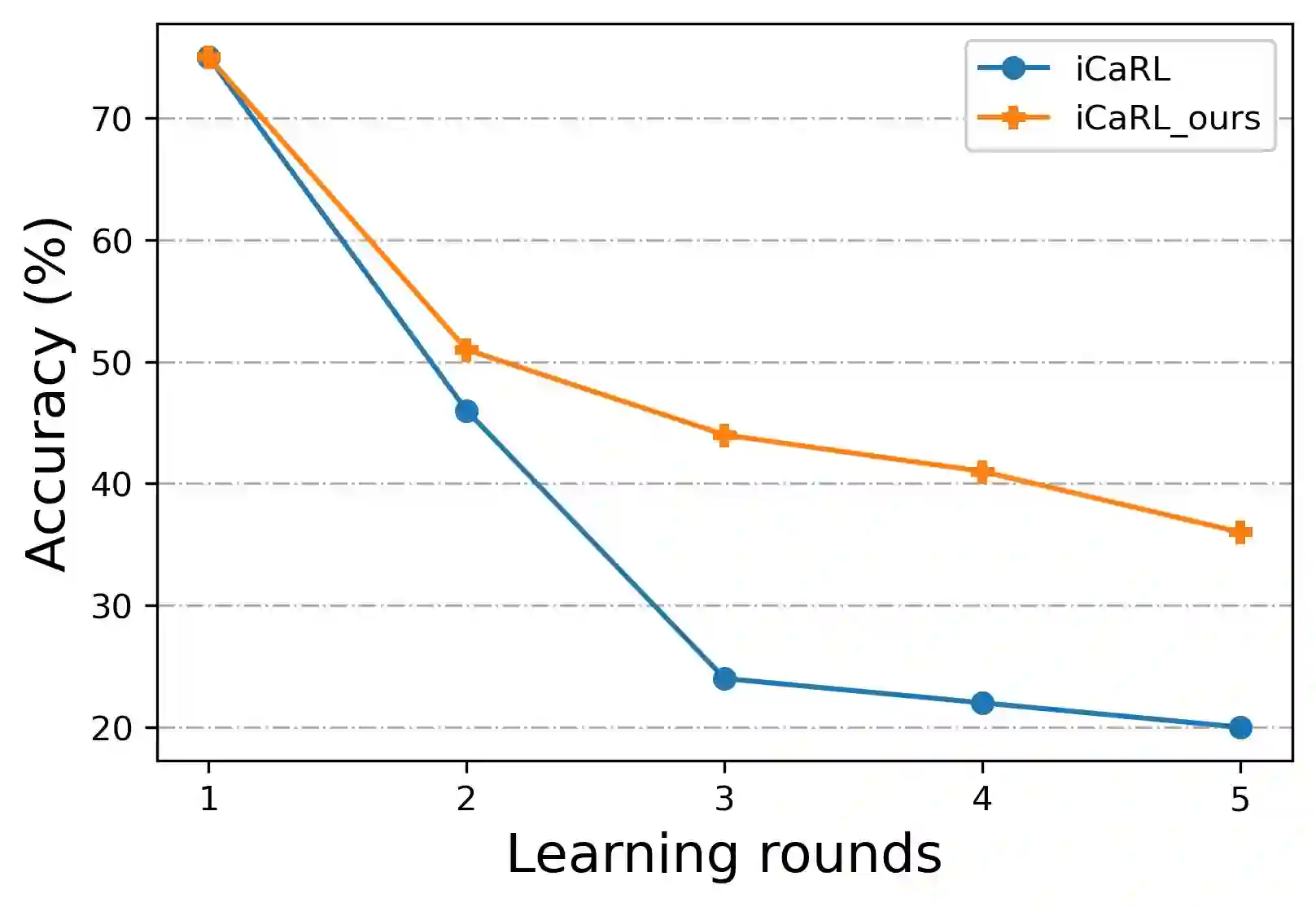

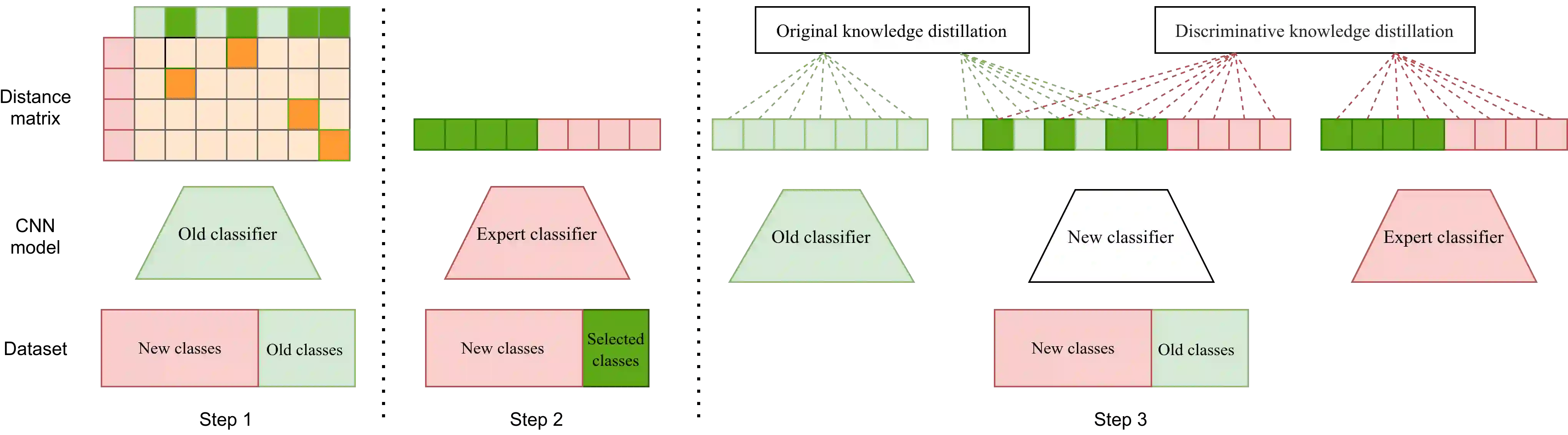

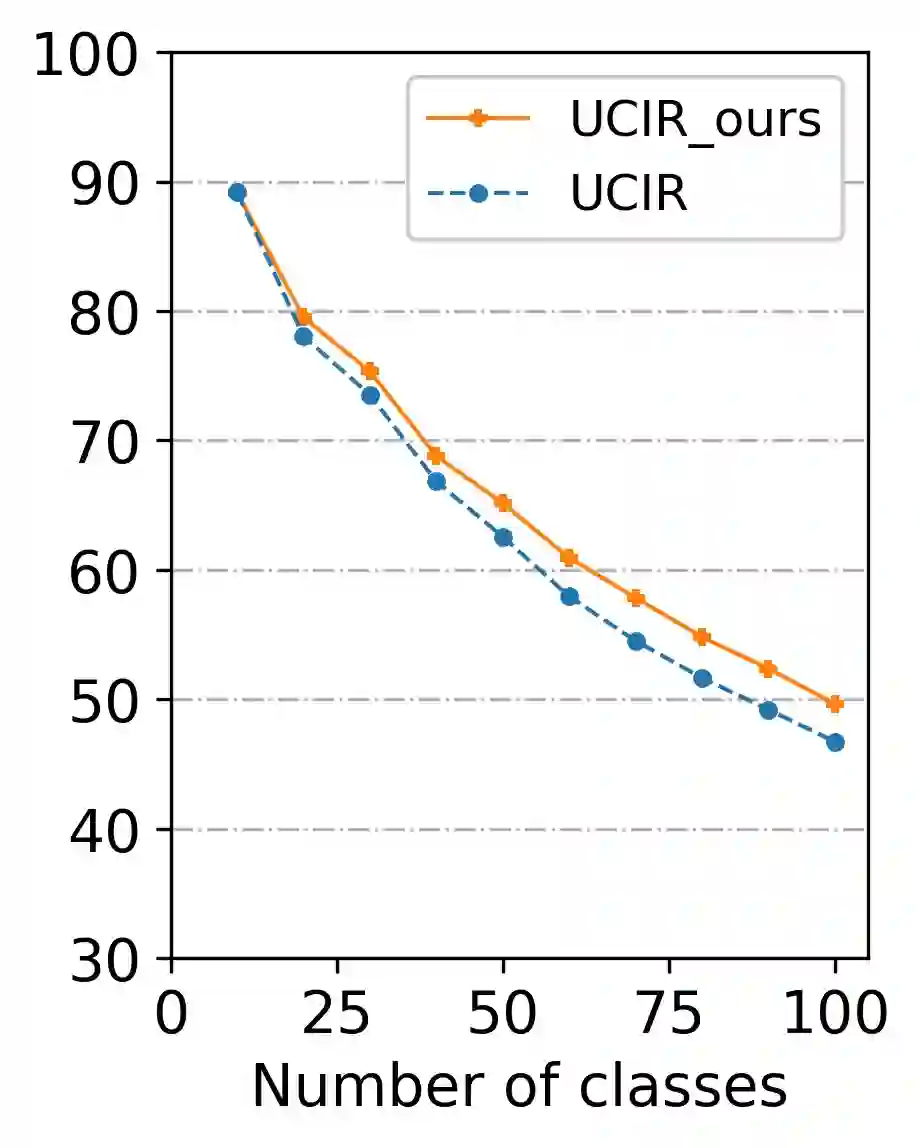

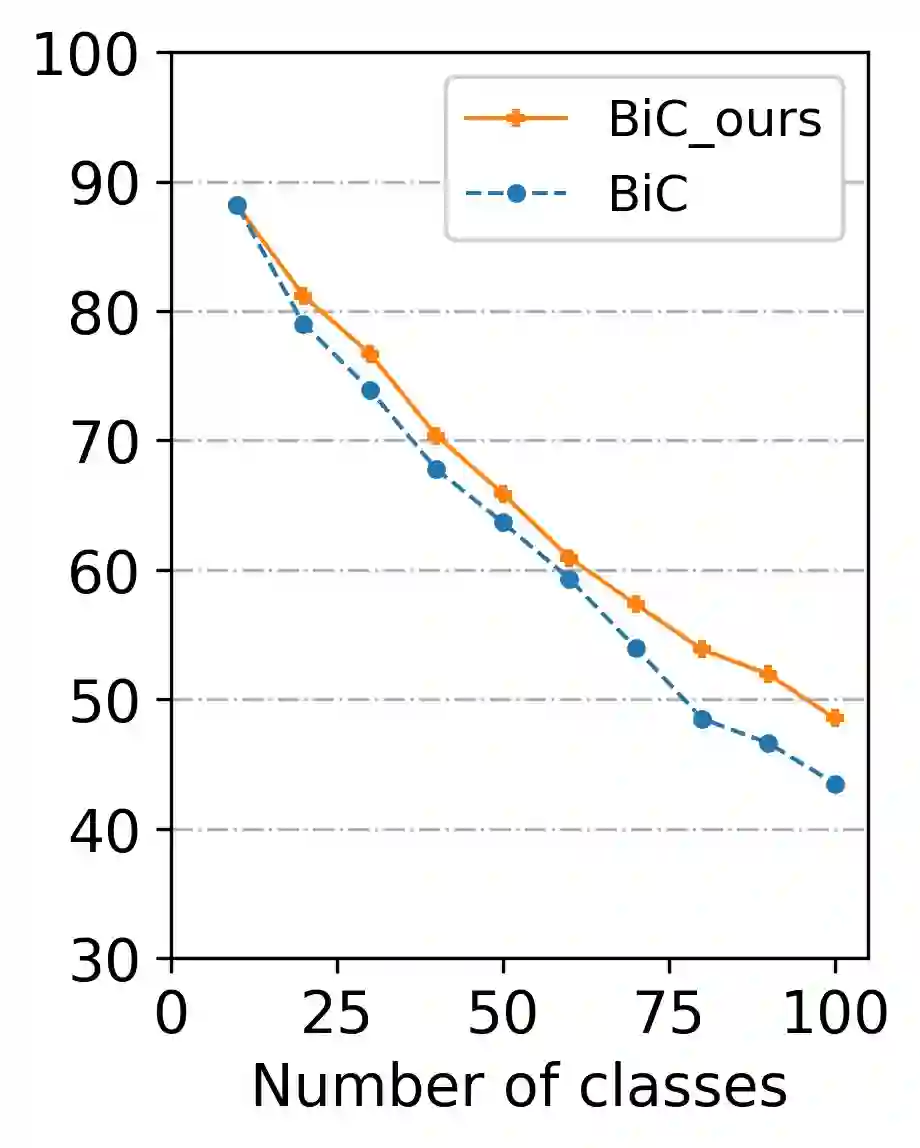

Successful continual learning of new knowledge would enable intelligent systems to recognize more and more classes of objects. However, current intelligent systems often fail to correctly recognize previously learned classes of objects when updated to learn new classes. It is widely believed that such downgraded performance is solely due to the catastrophic forgetting of previously learned knowledge. In this study, we argue that the class confusion phenomena may also play a role in downgrading the classification performance during continual learning, i.e., the high similarity between new classes and any previously learned classes would also cause the classifier to make mistakes in recognizing these old classes, even if the knowledge of these old classes is not forgotten. To alleviate the class confusion issue, we propose a discriminative distillation strategy to help the classify well learn the discriminative features between confusing classes during continual learning. Experiments on multiple natural image classification tasks support that the proposed distillation strategy, when combined with existing methods, is effective in further improving continual learning.

翻译:对新知识的不断成功学习将使智能系统能够识别越来越多的对象类别。 但是,当前的智能系统在更新以学习新类时往往无法正确识别以前学到的物体类别。 人们普遍认为,这种降级表现完全是由于灾难性地忘记了以前学到的知识。 在本研究中,我们认为,班级混乱现象也可能在不断学习期间降低分类表现方面起到作用,即新类与以前学到的任何类别之间的高度相似性也会导致分类者在认识这些旧类时犯错误,即使这些旧类的知识没有被遗忘。为了缓解班级混乱问题,我们提议了一项歧视性的蒸馏战略,以帮助对在不断学习期间混淆的班级之间的歧视性特征进行分类。关于多重自然图像分类的实验支持,如果与现有方法相结合,拟议的蒸馏战略将有效地进一步改进持续学习。

相关内容

Source: Apple - iOS 8