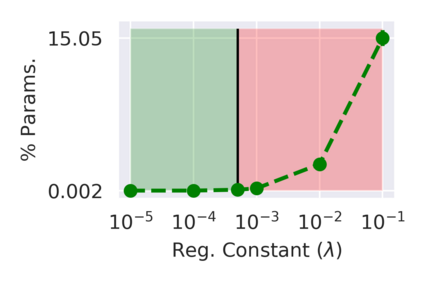

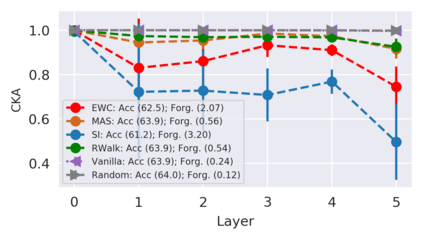

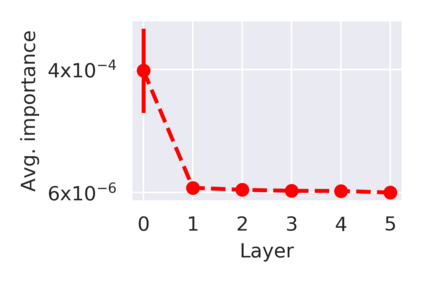

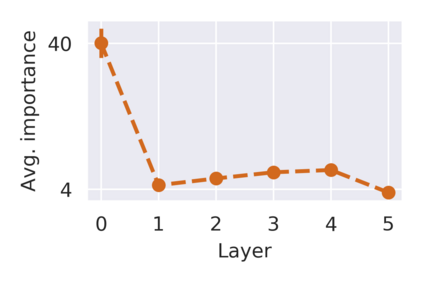

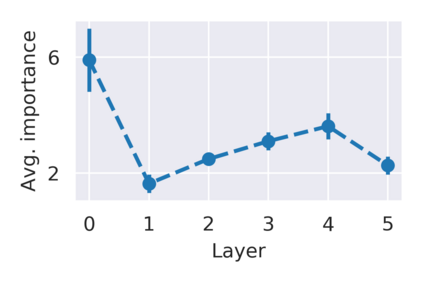

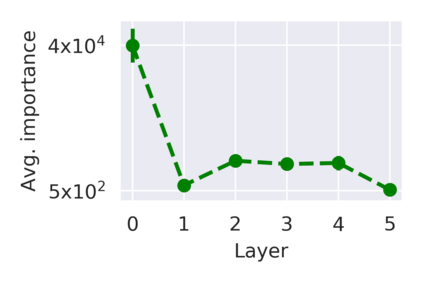

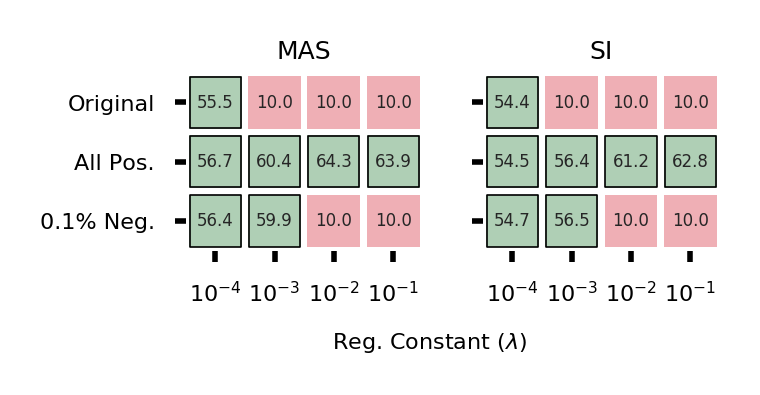

Quadratic regularizers are often used for mitigating catastrophic forgetting in deep neural networks (DNNs), but are unable to compete with recent continual learning methods. To understand this behavior, we analyze parameter updates under quadratic regularization and demonstrate such regularizers prevent forgetting of past tasks by implicitly performing a weighted average between current and previous values of model parameters. Our analysis shows the inferior performance of quadratic regularizers arises from (a) dependence of weighted averaging on training hyperparameters, which often results in unstable training and (b) assignment of lower importance to deeper layers, which are generally the cause for forgetting in DNNs. To address these limitations, we propose Explicit Movement Regularization (EMR), a continual learning algorithm that modifies quadratic regularization to remove the dependence of weighted averaging on training hyperparameters and uses a relative measure for importance to avoid problems caused by lower importance assignment to deeper layers. Compared to quadratic regularization, EMR achieves 6.2% higher average accuracy and 4.5% lower average forgetting.

翻译:在深神经网络(DNNs)中,二次曲线调节器通常被用于减轻灾难性的遗忘,但无法与最近的连续学习方法竞争。为了理解这一行为,我们在二次曲线规范化下分析参数更新,并证明这种规范化者通过暗含地执行当前和以往模型参数值加权平均值来防止忘记过去的任务。我们的分析表明,二次曲线调节器的低效性能源于(a) 加权平均法对培训超参数的依赖性,这往往导致培训不稳定,以及(b) 对更深层的区分重要性较低,而后者通常是DNNs中遗忘的原因。为了解决这些限制,我们建议采用扩展移动常规化(EMR),一种不断学习的算法,改变二次曲线规范化,以取消加权平均对培训超参数的依赖性,并使用相对的重要性衡量法,以避免较低重要性的对更深层分配造成的问题。与四度规范化相比,欧洲公路网平均精确度提高了62%,平均遗忘率低4.5%。