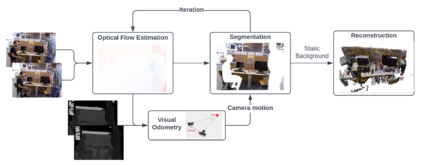

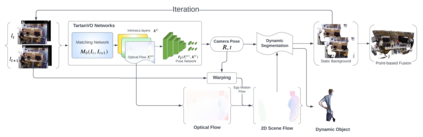

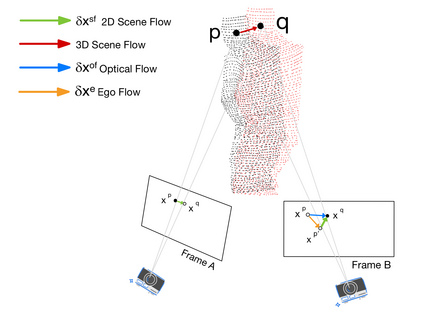

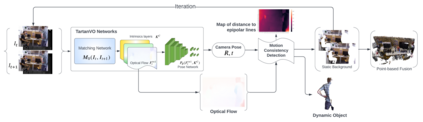

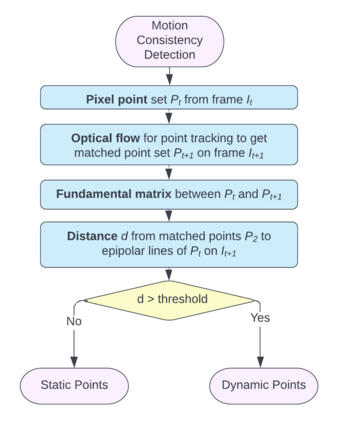

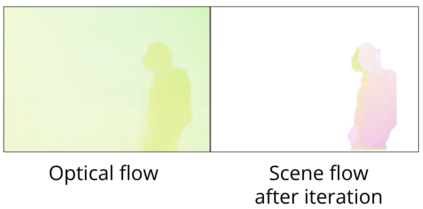

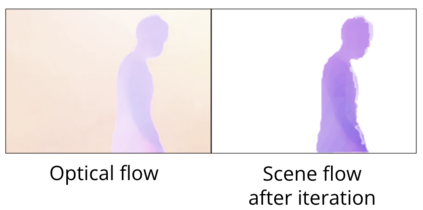

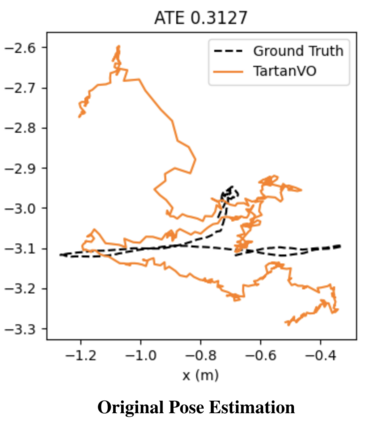

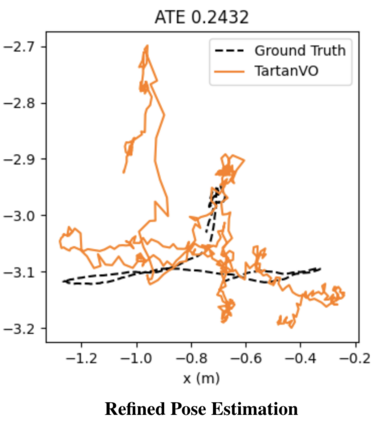

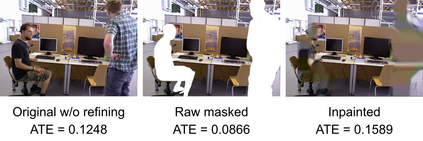

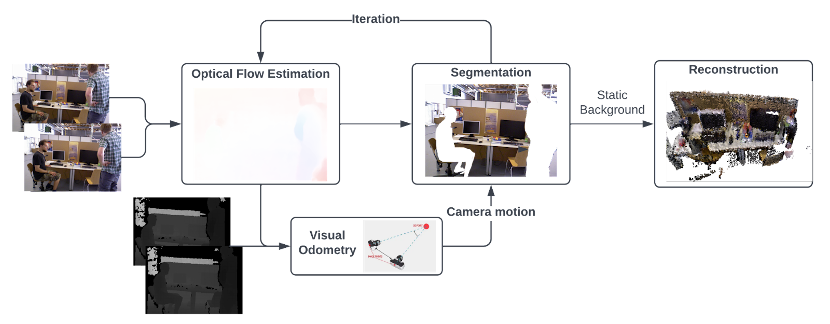

We propose a dense dynamic RGB-D SLAM pipeline based on a learning-based visual odometry, TartanVO. TartanVO, like other direct methods rather than feature-based, estimates camera pose through dense optical flow, which only applies to static scenes and disregards dynamic objects. Due to the color constancy assumption, optical flow is not able to differentiate between dynamic and static pixels. Therefore, to reconstruct a static map through such direct methods, our pipeline resolves dynamic/static segmentation by leveraging the optical flow output, and only fuse static points into the map. Moreover, we rerender the input frames such that the dynamic pixels are removed and iteratively pass them back into the visual odometry to refine the pose estimate.

翻译:我们建议采用基于学习的视觉观察测量法,即TartanVo,建立密集的动态RGB-D SLAM管道。TartanVo与其他直接方法一样,而不是基于地貌的方法,估计摄影机通过密集光学流形成,这种光学流只适用于静态场景,而无视动态物体。由于颜色恒定假设,光学流无法区分动态像素和静态像素。因此,为了通过这种直接方法重建静态地图,我们的管道通过利用光学流输出解决动态/静态分离问题,并且只将静态点连接到地图中。此外,我们重塑了输入框架,这样能将动态像素移走,并反复将它们传送到视觉视像仪中,以完善表面估计。