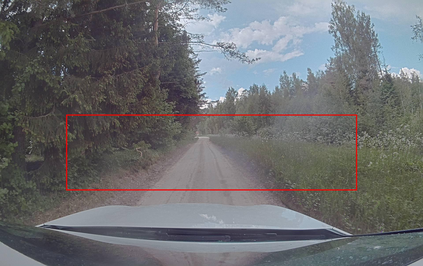

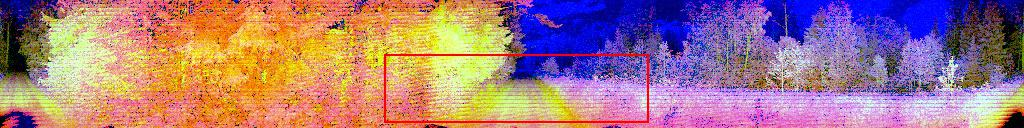

The core task of any autonomous driving system is to transform sensory inputs into driving commands. In end-to-end driving, this is achieved via a neural network, with one or multiple cameras as the most commonly used input and low-level driving command, e.g. steering angle, as output. However, depth-sensing has been shown in simulation to make the end-to-end driving task easier. On a real car, combining depth and visual information can be challenging, due to the difficulty of obtaining good spatial and temporal alignment of the sensors. To alleviate alignment problems, Ouster LiDARs can output surround-view LiDAR-images with depth, intensity, and ambient radiation channels. These measurements originate from the same sensor, rendering them perfectly aligned in time and space. We demonstrate that such LiDAR-images are sufficient for the real-car road-following task and perform at least equally to camera-based models in the tested conditions, with the difference increasing when needing to generalize to new weather conditions. In the second direction of study, we reveal that the temporal smoothness of off-policy prediction sequences correlates equally well with actual on-policy driving ability as the commonly used mean absolute error.

翻译:任何自主驾驶系统的核心任务是将感官输入转换成驾驶指令。 在终端到终端的驾驶中,这是通过神经网络实现的,其中一台或多台相机是最常用的输入和低级别驾驶指令,例如,方向角,作为输出。然而,模拟显示深度遥感,使端到端驾驶任务更容易。在一辆真正的汽车上,将深度和视觉信息结合起来可能具有挑战性,因为传感器难以获得良好的空间和时间对齐。为了减轻校准问题,Ouster LiDARs可以用深度、强度和环境辐射频道输出环视LIDAR图像。这些测量来自同一传感器,使其在时间和空间上完全吻合。我们证明,这种LIDAR图像足以完成实际车行驶任务,至少对测试条件下的摄影模型同样具有挑战性,在需要概括到新的天气条件时差异越来越大。在第二个研究方向中,我们揭示了离政策预测序列的时时时平滑性与绝对的驱动力能力是相同的。