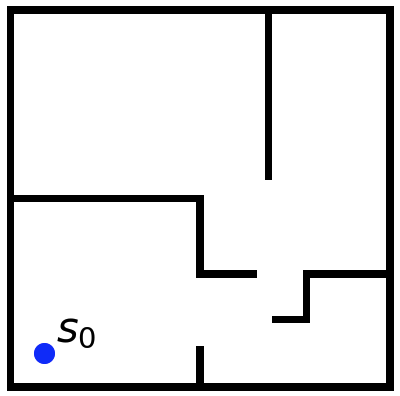

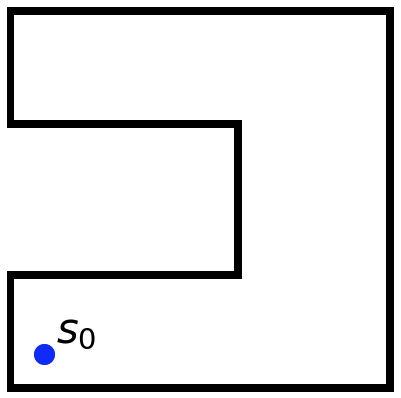

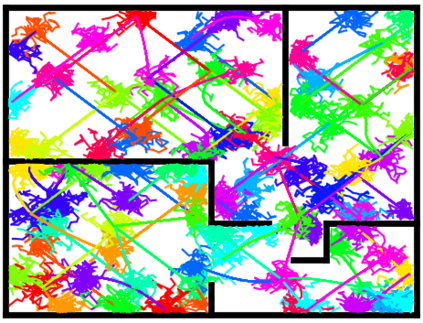

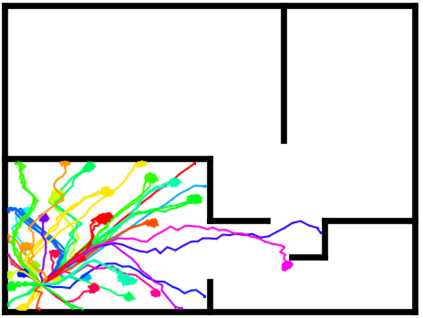

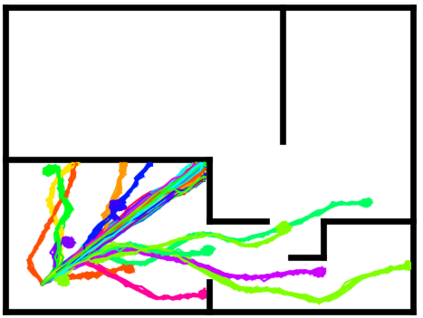

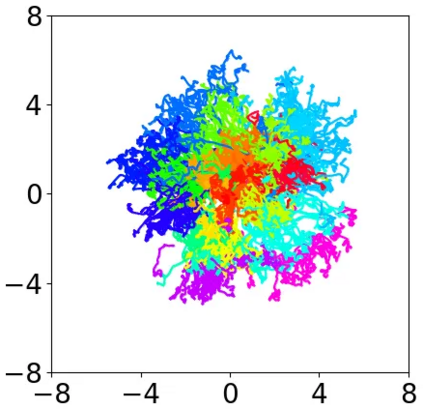

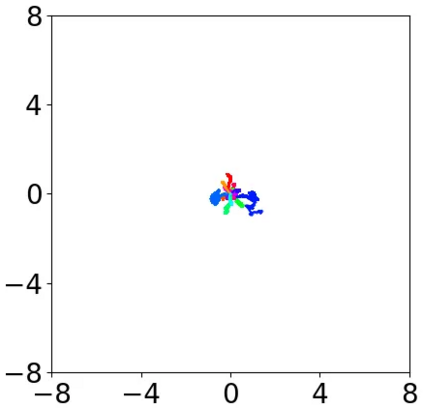

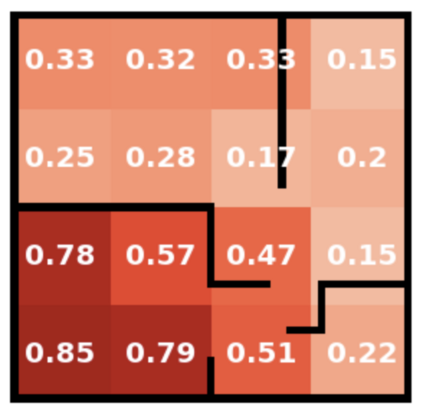

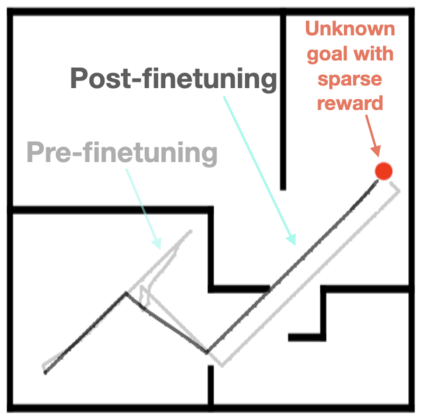

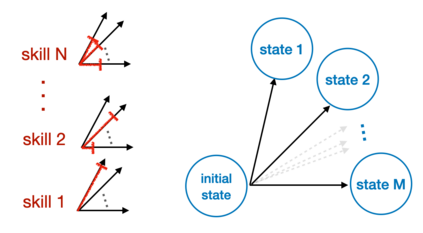

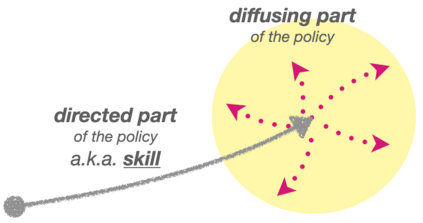

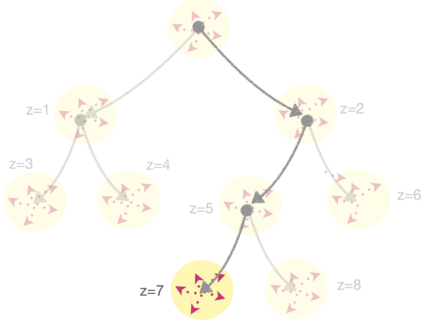

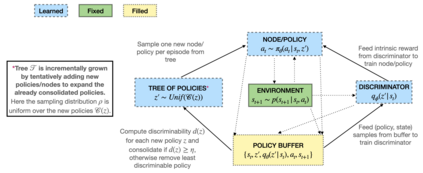

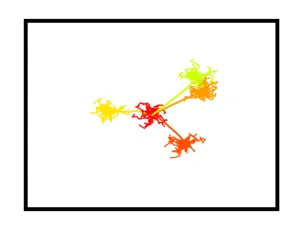

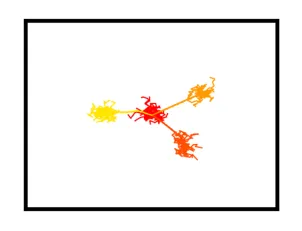

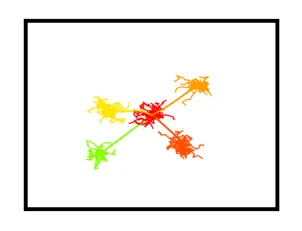

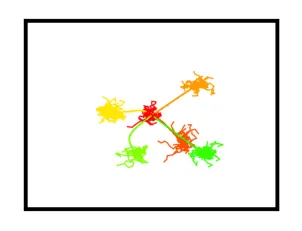

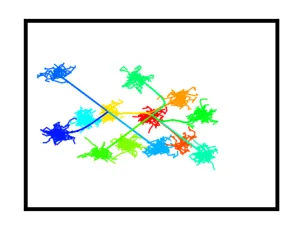

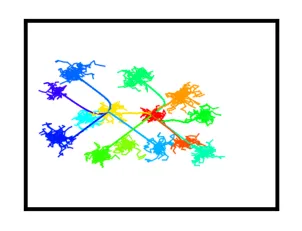

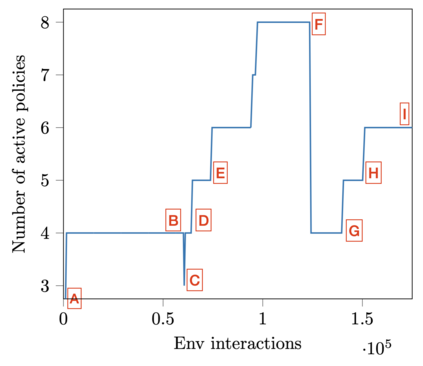

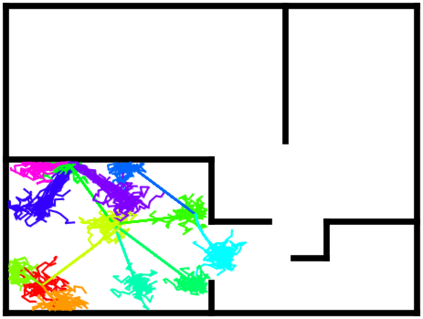

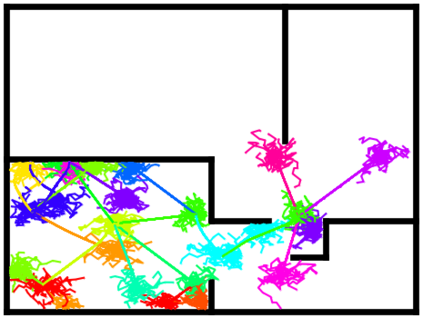

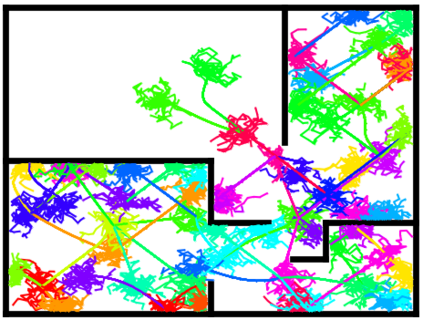

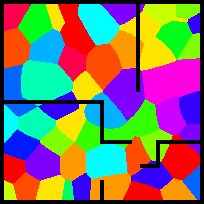

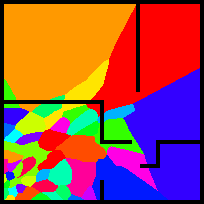

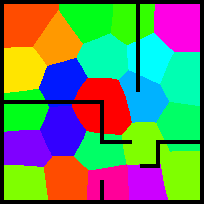

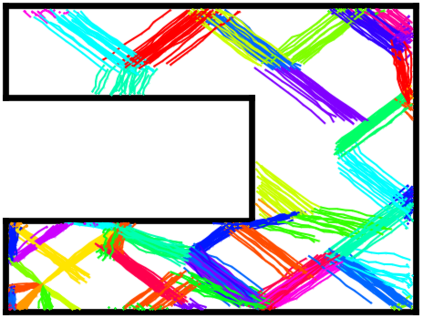

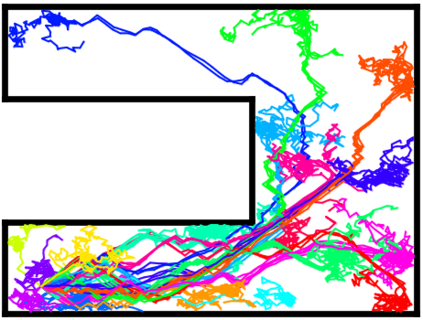

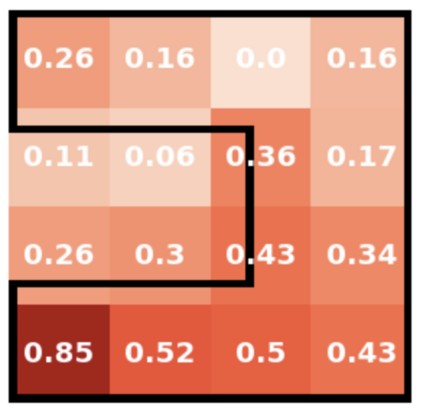

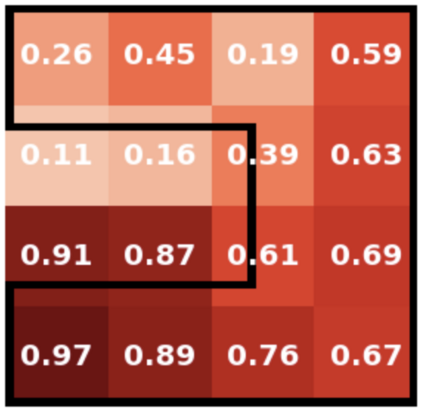

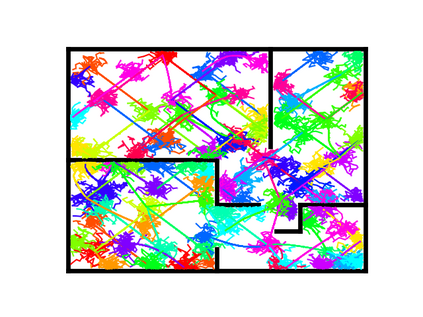

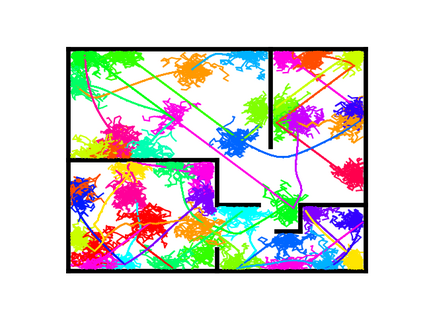

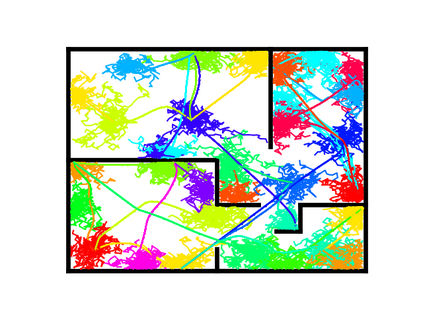

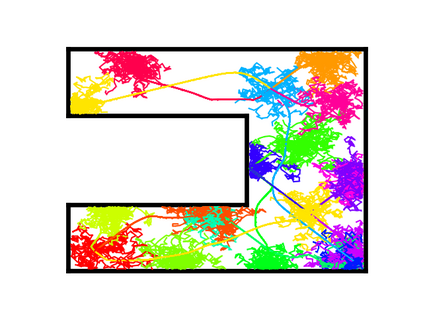

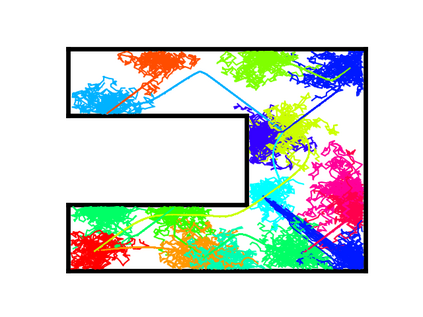

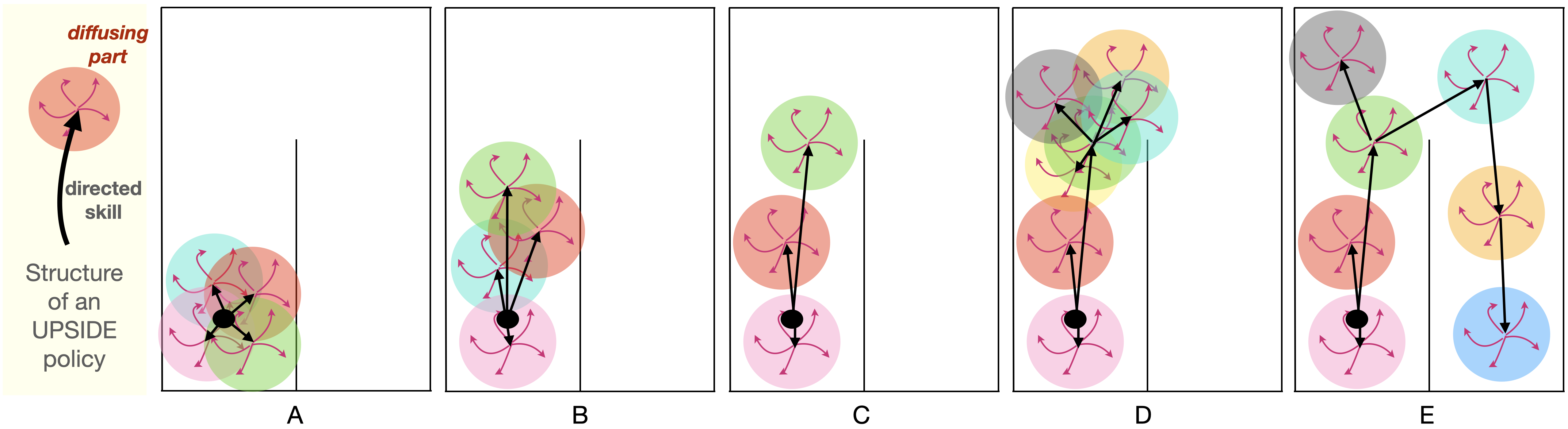

Learning meaningful behaviors in the absence of reward is a difficult problem in reinforcement learning. A desirable and challenging unsupervised objective is to learn a set of diverse skills that provide a thorough coverage of the state space while being directed, i.e., reliably reaching distinct regions of the environment. In this paper, we build on the mutual information framework for skill discovery and introduce UPSIDE, which addresses the coverage-directedness trade-off in the following ways: 1) We design policies with a decoupled structure of a directed skill, trained to reach a specific region, followed by a diffusing part that induces a local coverage. 2) We optimize policies by maximizing their number under the constraint that each of them reaches distinct regions of the environment (i.e., they are sufficiently discriminable) and prove that this serves as a lower bound to the original mutual information objective. 3) Finally, we compose the learned directed skills into a growing tree that adaptively covers the environment. We illustrate in several navigation and control environments how the skills learned by UPSIDE solve sparse-reward downstream tasks better than existing baselines.

翻译:在缺乏奖励的情况下学习有意义的行为是强化学习的一个困难问题。一个可取和具有挑战性且不受监督的目标,是学习一套全面覆盖国家空间的多种技能,同时指导这些技能,即可靠地到达环境的不同区域。在本文件中,我们以技能发现方面的相互信息框架为基础,并采用UPSIDE, 以下列方式处理覆盖和定向交易:1) 我们设计政策时采用一种分解的定向技能结构,受过培训,能够到达特定区域,然后传播部分,引起局部覆盖。2) 我们优化政策,在限制下,最大限度地利用其数量,使每个区域都到达环境的不同区域(即它们足够分散),并证明这与最初的相互信息目标的联系较小。3)最后,我们把学到的定向技能纳入一个不断增长的、适应性地覆盖环境的树中。我们在若干导航和控制环境中说明,UPSIDE所学的技能如何比现有基线更好地解决分散的下游任务。