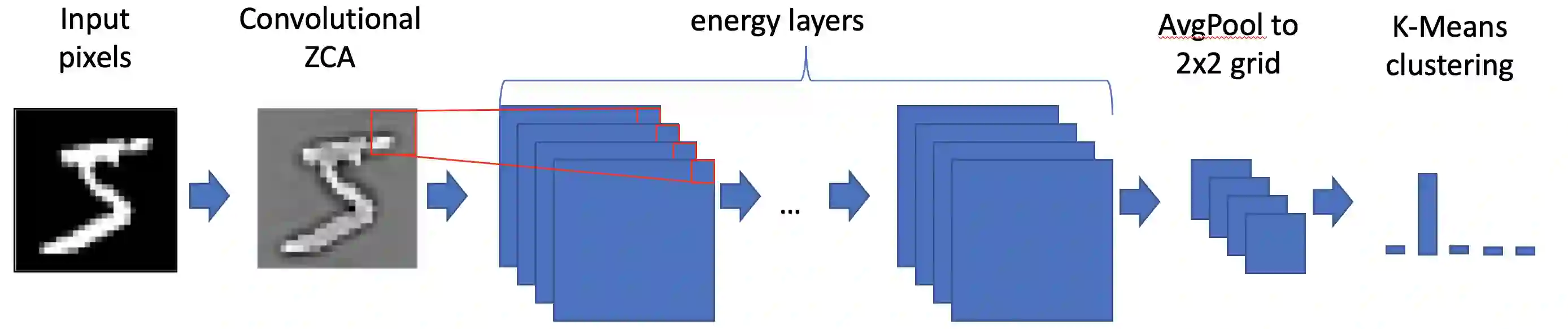

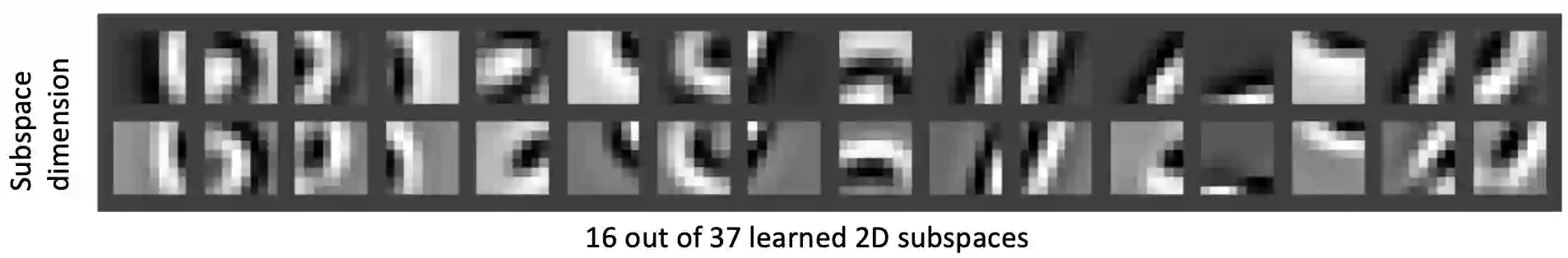

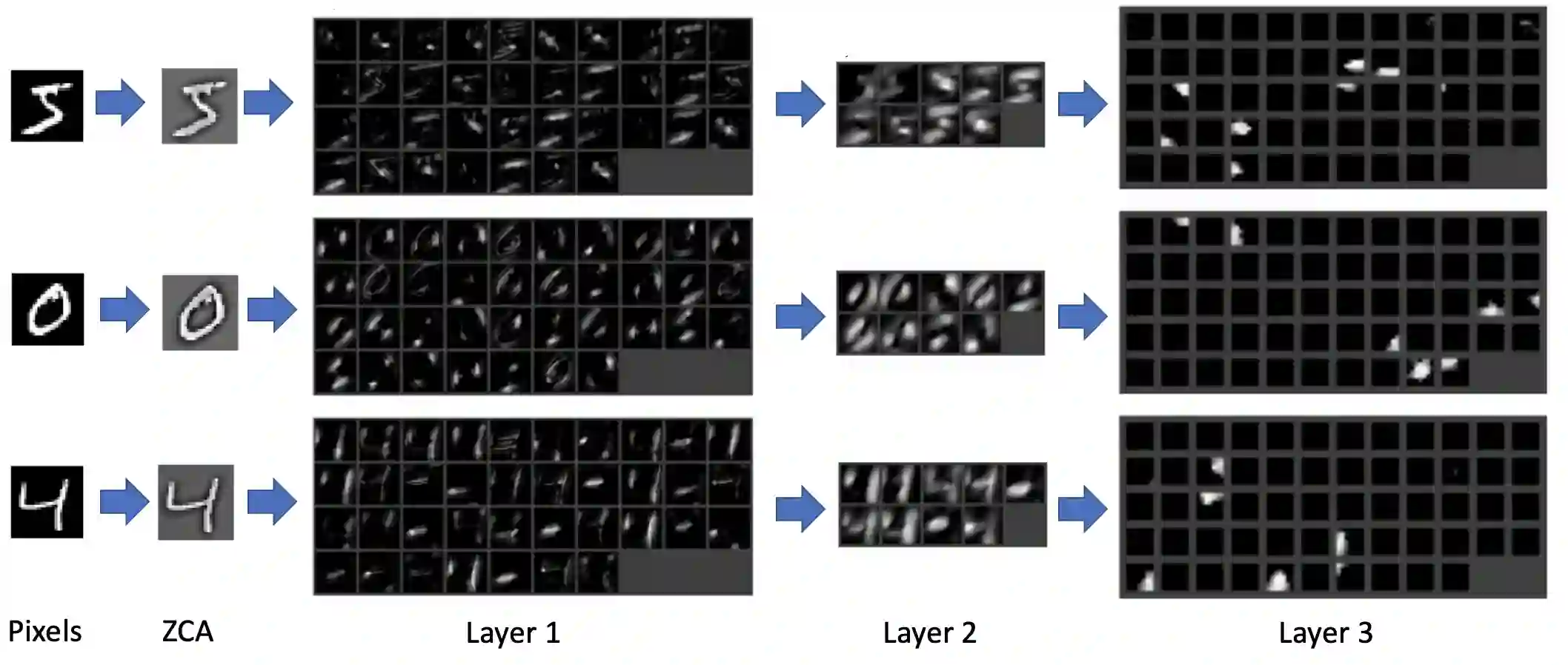

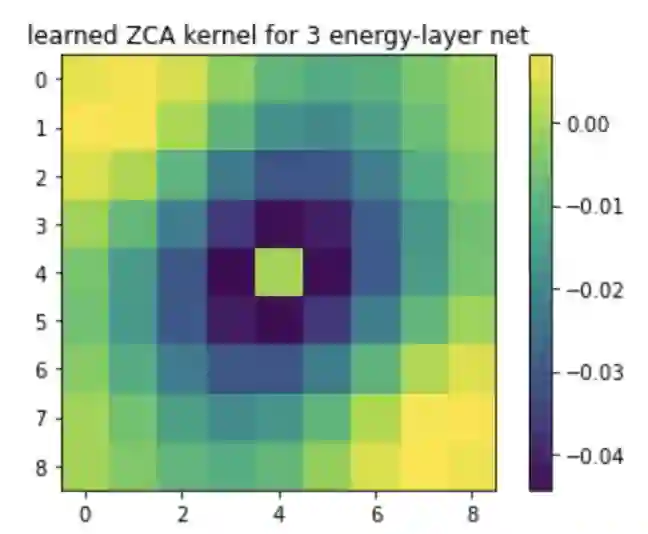

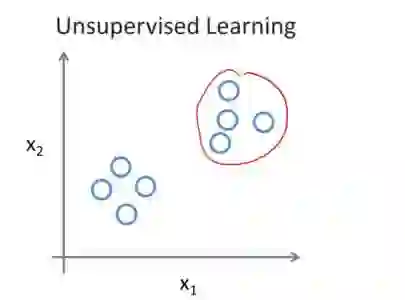

Stacked unsupervised learning (SUL) seems more biologically plausible than backpropagation, because learning is local to each layer. But SUL has fallen far short of backpropagation in practical applications, undermining the idea that SUL can explain how brains learn. Here we show an SUL algorithm that can perform completely unsupervised clustering of MNIST digits with comparable accuracy relative to unsupervised algorithms based on backpropagation. Our algorithm is exceeded only by self-supervised methods requiring training data augmentation by geometric distortions. The only prior knowledge in our unsupervised algorithm is implicit in the network architecture. Multiple convolutional "energy layers" contain a sum-of-squares nonlinearity, inspired by "energy models" of primary visual cortex. Convolutional kernels are learned with a fast minibatch implementation of the K-Subspaces algorithm. High accuracy requires preprocessing with an initial whitening layer, representations that are less sparse during inference than learning, and rescaling for gain control. The hyperparameters of the network architecture are found by supervised meta-learning, which optimizes unsupervised clustering accuracy. We regard such dependence of unsupervised learning on prior knowledge implicit in network architecture as biologically plausible, and analogous to the dependence of brain architecture on evolutionary history.

翻译:未经监督的学习(SUL)在生物学上似乎比反向分析(SUL)更可信,因为学习是针对每一层的。但SUL在实际应用中远远没有进行反向分析,从而破坏了SUL能够解释大脑学习的理念。在这里,我们展示了SUL算法,它可以完全不受监督地将MNIST数字分组,其精确度与基于反向分析的未经监督的算法相比,具有可比性。我们的算法只有在自我监督的方法下,才能要求通过几何扭曲来增强培训数据。我们未经监督的算法中唯一先前的知识在网络结构中隐含着。多变“能源层”包含由初级视觉皮层“能源模型”启发的不直线性总和。通过快速的小型应用K-子空间算法来学习进化的进化核心。高精度要求先用初始白层进行处理,在推断过程中比学习和调整获得控制要少得多。在网络结构结构结构结构中,我们对于网络结构结构结构的超常分数,这是通过监督的先入型的系统化的系统化的系统化研究而成型的系统化的成型结构学研究,这是我们对历史结构学的精确的系统学研究的理论的理论性研究发现。