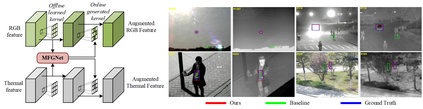

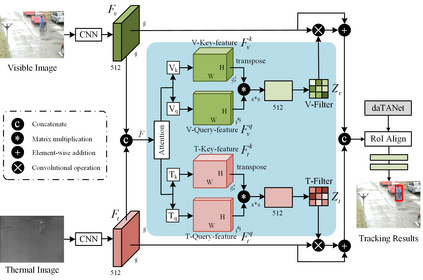

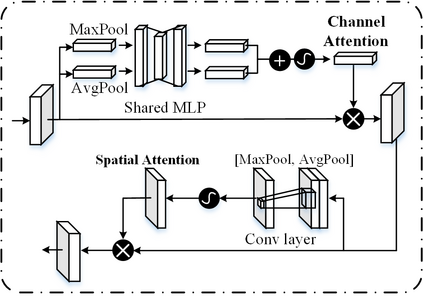

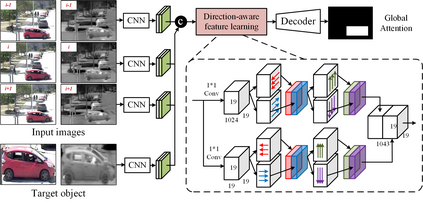

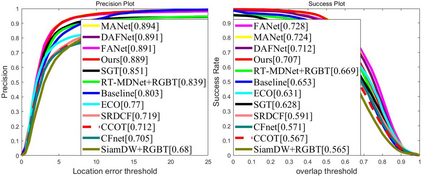

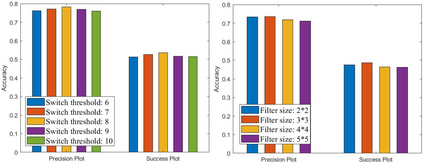

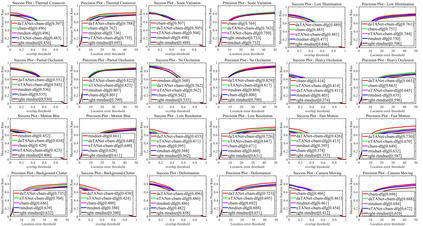

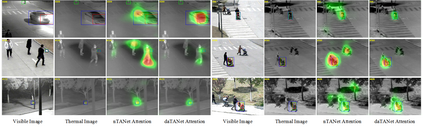

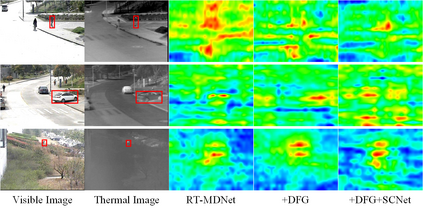

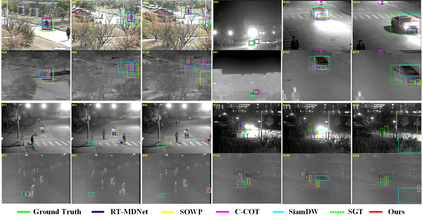

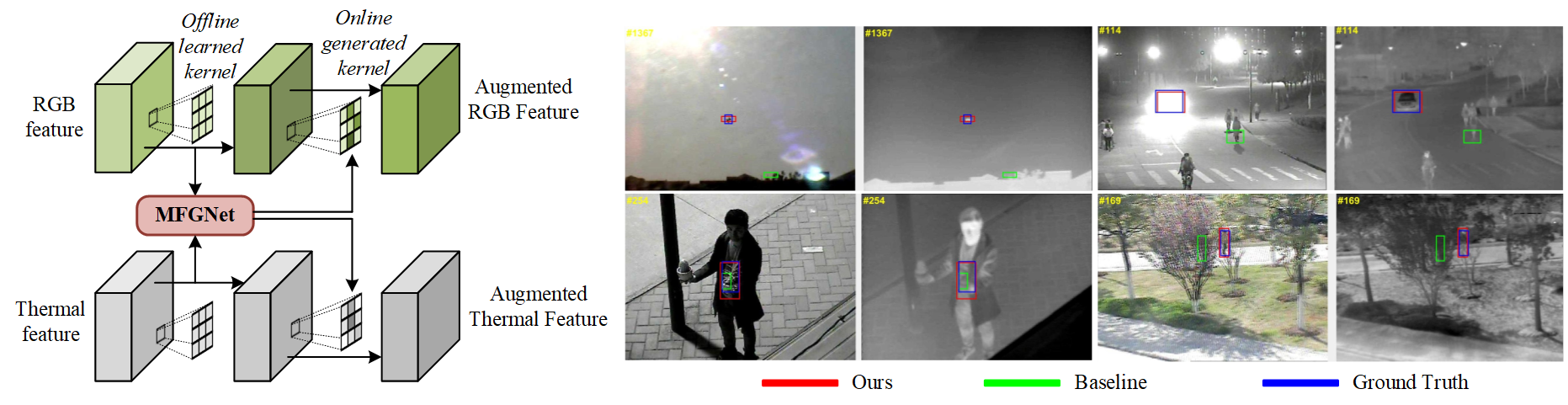

Many RGB-T trackers attempt to attain robust feature representation by utilizing an adaptive weighting scheme (or attention mechanism). Different from these works, we propose a new dynamic modality-aware filter generation module (named MFGNet) to boost the message communication between visible and thermal data by adaptively adjusting the convolutional kernels for various input images in practical tracking. Given the image pairs as input, we first encode their features with the backbone network. Then, we concatenate these feature maps and generate dynamic modality-aware filters with two independent networks. The visible and thermal filters will be used to conduct a dynamic convolutional operation on their corresponding input feature maps respectively. Inspired by residual connection, both the generated visible and thermal feature maps will be summarized with input feature maps. The augmented feature maps will be fed into the RoI align module to generate instance-level features for subsequent classification. To address issues caused by heavy occlusion, fast motion and out-of-view, we propose to conduct a joint local and global search by exploiting a new direction-aware target driven attention mechanism. The spatial and temporal recurrent neural network is used to capture the direction-aware context for accurate global attention prediction. Extensive experiments on three large-scale RGB-T tracking benchmark datasets validated the effectiveness of our proposed algorithm. The source code of this paper is available at \textcolor{magenta}{\url{https://github.com/wangxiao5791509/MFG_RGBT_Tracking_PyTorch}}.

翻译:许多 RGB- T 跟踪器试图通过使用适应加权机制( 或关注机制) 实现强强的特征代表。 与这些工程不同, 我们提议采用一个新的动态模式- 显示过滤器生成模块( 名为 MFGNet ), 通过适应性调整各种输入图像的聚合内核来提升可见数据和热数据之间的信息通信。 鉴于图像配对作为输入, 我们首先将其特征与主干网络编码。 然后, 我们将这些特征地图连接起来, 并用两个独立的网络生成动态模式- 显示过滤器。 可见和热过滤器将分别用于在相应的输入方位图中进行动态的同步操作。 受剩余连接的启发, 生成的可见和热特性地图都将与输入特性地图进行汇总。 增强的功能地图将输入 RoI 匹配模块, 生成实例级特性, 我们首先将其特征与主干解、 快速运动和 外观等同, 我们提议通过利用一个新的方向- 显示目标驱动的页面关注机制进行本地和全球联合搜索 。 空间和时间- 常规轨迹定位网络 用于全球的精确定位 数据 。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem