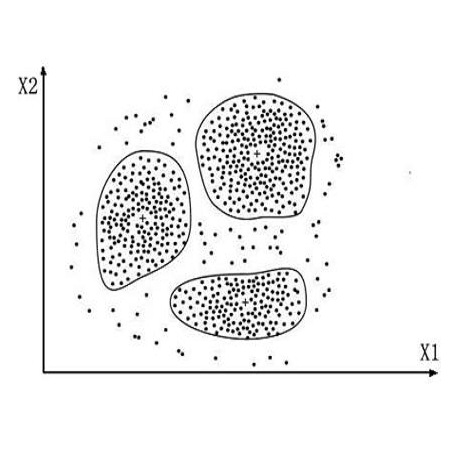

Effective representation learning is critical for short text clustering due to the sparse, high-dimensional and noise attributes of short text corpus. Existing pre-trained models (e.g., Word2vec and BERT) have greatly improved the expressiveness for short text representations with more condensed, low-dimensional and continuous features compared to the traditional Bag-of-Words (BoW) model. However, these models are trained for general purposes and thus are suboptimal for the short text clustering task. In this paper, we propose two methods to exploit the unsupervised autoencoder (AE) framework to further tune the short text representations based on these pre-trained text models for optimal clustering performance. In our first method Structural Text Network Graph Autoencoder (STN-GAE), we exploit the structural text information among the corpus by constructing a text network, and then adopt graph convolutional network as encoder to fuse the structural features with the pre-trained text features for text representation learning. In our second method Soft Cluster Assignment Autoencoder (SCA-AE), we adopt an extra soft cluster assignment constraint on the latent space of autoencoder to encourage the learned text representations to be more clustering-friendly. We tested two methods on seven popular short text datasets, and the experimental results show that when only using the pre-trained model for short text clustering, BERT performs better than BoW and Word2vec. However, as long as we further tune the pre-trained representations, the proposed method like SCA-AE can greatly increase the clustering performance, and the accuracy improvement compared to use BERT alone could reach as much as 14\%.

翻译:有效的演示学习对于短文本群集至关重要,因为短文本群集的精密、高维度和噪声特性。现有的预先培训模型(如Word2vec和BERT)与传统的Words-Bag-Words(BoW)模型相比,大大改善了短文本代表面的清晰度。然而,这些模型是用于一般目的的培训,因此对于短文本群集任务来说是不最优化的。在本文中,我们提出两种方法来利用未经监督的自动编码(AE)框架来进一步调整基于这些经过事先培训的精密文本模型的短文本代表面,以优化的组合性能。在我们的第一个方法中,“结构文本网络图”自动编码(STN-GAE),我们通过构建文本网络来利用结构文本信息,从而将结构特征与经过事先培训的短文本群集结合起来。在第二个方法中,Soft Croup Aut Autencoder(AEEE),我们采用额外的软组群集任务限制来进一步调整这些精细化的文本,我们只用软的B级阵列的阵列的演示的文本,我们只用测试的文本来学习了两个阵列。