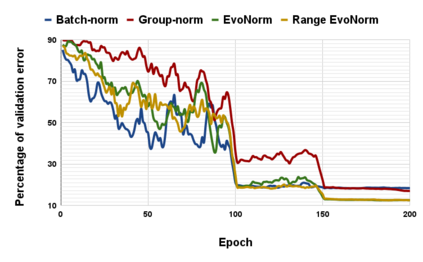

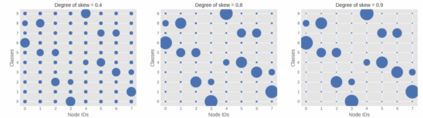

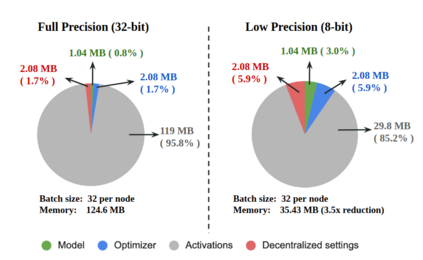

Decentralized distributed learning is the key to enabling large-scale machine learning (training) on the edge devices utilizing private user-generated local data, without relying on the cloud. However, the practical realization of such on-device training is limited by the communication and compute bottleneck. In this paper, we propose and show the convergence of low precision decentralized training that aims to reduce the computational complexity and communication cost of decentralized training. Many feedback-based compression techniques have been proposed in the literature to reduce communication costs. To the best of our knowledge, there is no work that applies and shows compute efficient training techniques such quantization, pruning, etc., for peer-to-peer decentralized learning setups. Since real-world applications have a significant skew in the data distribution, we design "Range-EvoNorm" as the normalization activation layer which is better suited for low precision training over non-IID data. Moreover, we show that the proposed low precision training can be used in synergy with other communication compression methods decreasing the communication cost further. Our experiments indicate that 8-bit decentralized training has minimal accuracy loss compared to its full precision counterpart even with non-IID data. However, when low precision training is accompanied by communication compression through sparsification we observe a 1-2% drop in accuracy. The proposed low precision decentralized training decreases computational complexity, memory usage, and communication cost by 4x and compute energy by a factor of ~20x, while trading off less than a $1\%$ accuracy for both IID and non-IID data. In particular, with higher skew values, we observe an increase in accuracy (by ~ 0.5%) with low precision training, indicating the regularization effect of the quantization.

翻译:分散分布式学习是利用私人用户生成的本地数据,在不依赖云的情况下,利用私人用户生成的本地数据,在边缘设备上进行大规模机器学习(培训)的关键。然而,由于通信和计算瓶颈,这种在线培训的实际实现受到限制。在本文件中,我们提出并展示了低精度分散化培训的趋同性,目的是降低分散培训的计算复杂性和通信成本。文献中提出了许多基于反馈的压缩技术,以降低通信成本。根据我们的最佳知识,没有应用高效培训技术,并展示了高效率的培训技术,如对同行和同行的精度的量化、修剪剪剪裁等。由于现实世界应用在数据分配方面有很大的偏差,因此我们设计了“Range-EvoNorm”作为正常化启动层,更适合低精度培训,而不是非II数据。此外,我们显示,低精度的精度培训可以用于与其他通信压缩方法的合力20 进一步降低通信成本。我们的实验表明,8位分散化培训在精确度方面损失了最低精度的精度,而精度II的精确性培训则通过不精确性数据进行。