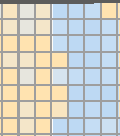

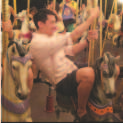

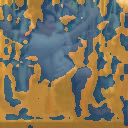

We propose a novel method for unsupervised semantic image segmentation based on mutual information maximization between local and global high-level image features. The core idea of our work is to leverage recent progress in self-supervised image representation learning. Representation learning methods compute a single high-level feature capturing an entire image. In contrast, we compute multiple high-level features, each capturing image segments of one particular semantic class. To this end, we propose a novel two-step learning procedure comprising a segmentation and a mutual information maximization step. In the first step, we segment images based on local and global features. In the second step, we maximize the mutual information between local features and high-level features of their respective class. For training, we provide solely unlabeled images and start from random network initialization. For quantitative and qualitative evaluation, we use established benchmarks, and COCO-Persons, whereby we introduce the latter in this paper as a challenging novel benchmark. InfoSeg significantly outperforms the current state-of-the-art, e.g., we achieve a relative increase of 26% in the Pixel Accuracy metric on the COCO-Stuff dataset.

翻译:我们基于地方和全球高层次图像特征之间的相互信息最大化,提出了未经监督的语义图像分割新颖方法。 我们工作的核心思想是利用本地和全球高层次图像展示学习的最新进展。 演示学习方法计算单个高层次特征, 捕捉整个图像。 相反, 我们计算多个高层次特征, 每个捕获某个语义类的图像部分。 为此, 我们提出一个新的两步学习程序, 包括分化和相互信息最大化步骤。 在第一步, 我们根据本地和全球特征对图像进行分化。 第二步, 我们最大限度地利用本地特征和各自班级高层次特征之间的相互信息。 为了培训, 我们只提供不贴标签的图像, 从随机网络初始化开始。 对于定量和定性评估, 我们使用既定的基准, 以及COCO-Persons, 我们在此文件中将后者作为具有挑战性的新基准。 InfSegeg明显地超越了当前状态, 例如, 我们实现了本地和全球特征的图像。 在 Pix- CO- custile 的CO- 度测量中, 我们实现了相对增长26%。