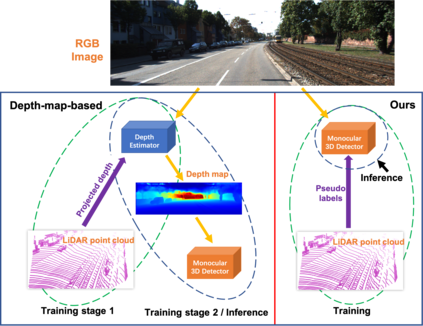

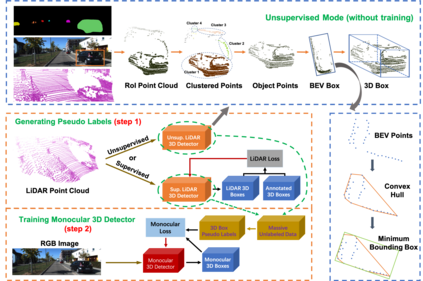

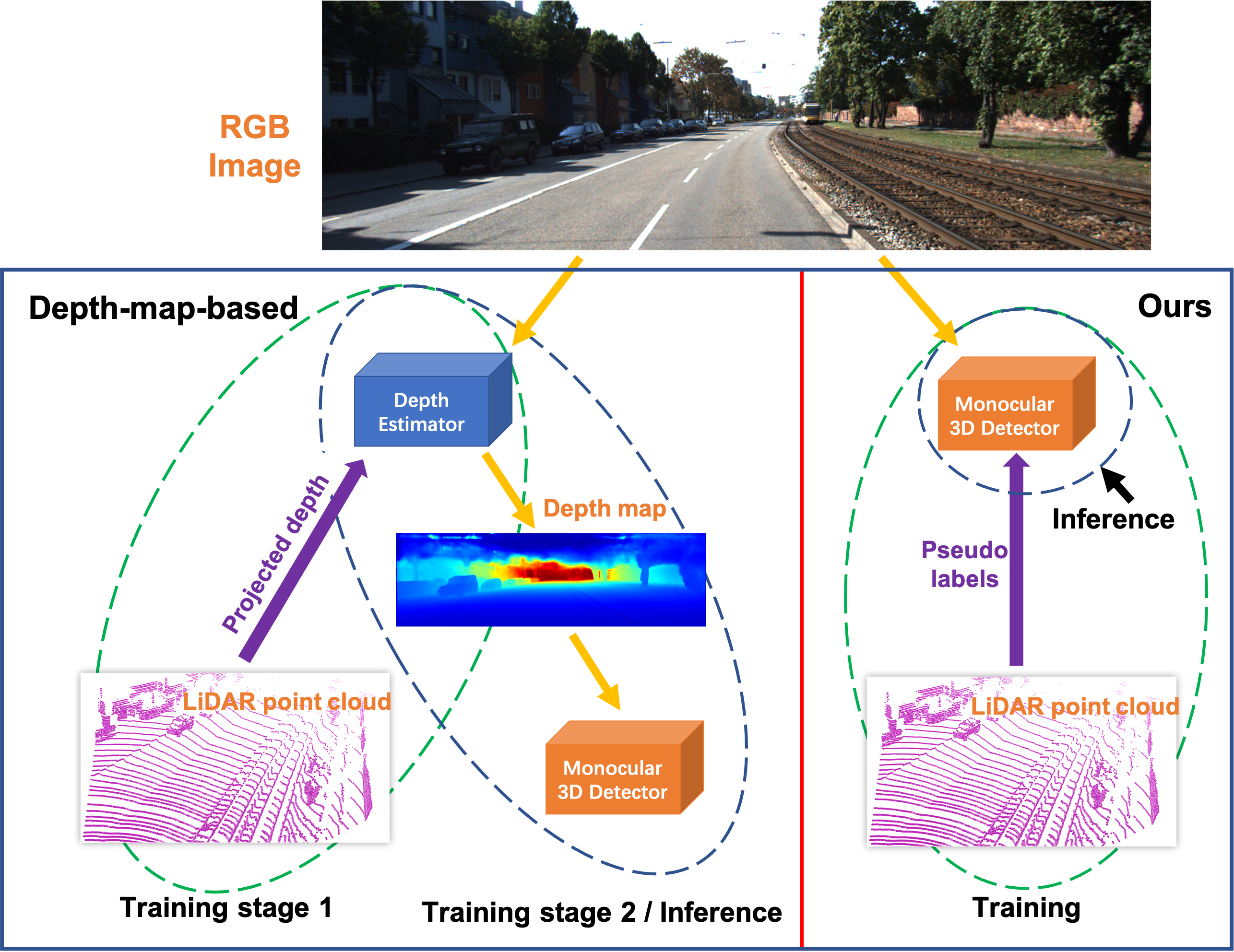

Monocular 3D detection currently struggles with extremely lower detection rates compared to LiDAR-based methods. The poor accuracy is mainly caused by the absence of accurate location cues due to the ill-posed nature of monocular imagery. LiDAR point clouds, which provide precise spatial measurement, can offer beneficial information for the training of monocular methods. To make use of LiDAR point clouds, prior works project them to form depth map labels, subsequently training a dense depth estimator to extract explicit location features. This indirect and complicated way introduces intermediate products, i.e., depth map predictions, taking much computation costs as well as leading to suboptimal performances. In this paper, we propose LPCG (LiDAR point cloud guided monocular 3D object detection), which is a general framework for guiding the training of monocular 3D detectors with LiDAR point clouds. Specifically, we use LiDAR point clouds to generate pseudo labels, allowing monocular 3D detectors to benefit from easy-collected massive unlabeled data. LPCG works well under both supervised and unsupervised setups. Thanks to a general design, LPCG can be plugged into any monocular 3D detector, significantly boosting the performance. As a result, we take the first place on KITTI monocular 3D/BEV (bird's-eye-view) detection benchmark with a considerable margin. The code will be made publicly available soon.

翻译:与基于 LiDAR 的方法相比,目前单体3D 检测率非常低。 准确性差的主要原因是由于单体图像的不正确性质而缺乏准确位置提示。 提供精确空间测量的LiDAR点云可以为单体方法的培训提供有益的信息。 为了使用LiDAR点云, 先前的工作预测它们形成深度地图标签, 随后训练一个密集的深度测深仪以提取清晰位置特征。 这种间接和复杂的方式引入了中间产品, 即深度地图预测, 花费了大量计算成本并导致亚优性性能。 在本文中, 我们提议 LPCG (LiDAR点点云导3D 单体天体探测), 它可以为使用LDAR 点云来指导对使用LDAR 点云进行单体3D 探测器的培训提供一个总体框架。 我们使用LDAR 点云来生成假体标签, 使单体3D 探测器能够从简单收集的大规模无标记的数据中获益。 LPCG在监督和不精确的基底线定位下工作良好地工作, 将快速检测到普通的3G 。