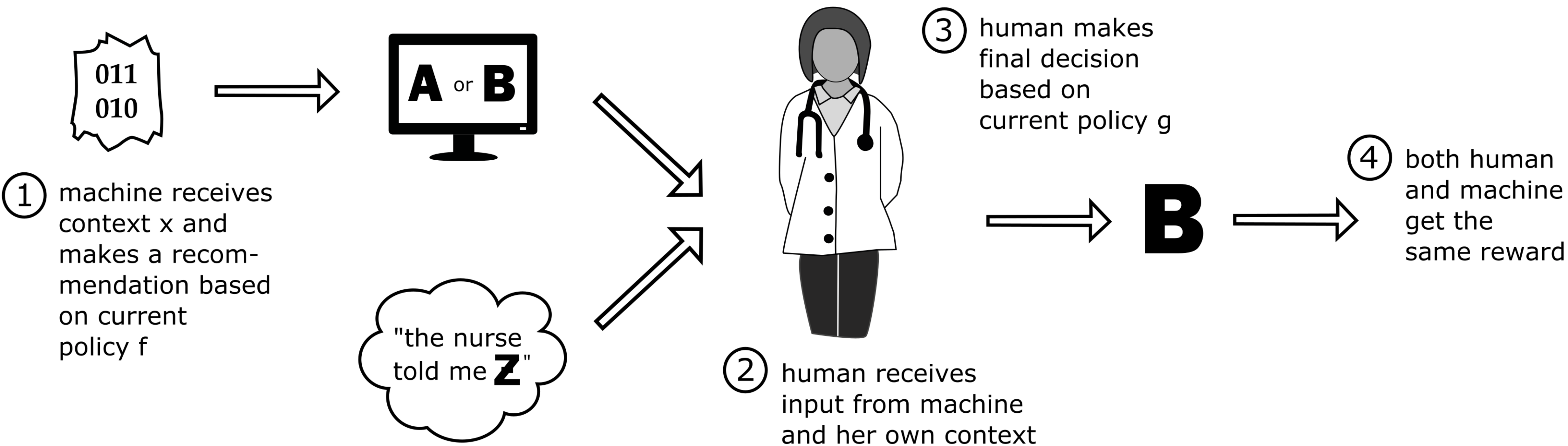

Applications of machine learning inform human decision makers in a broad range of tasks. The resulting problem is usually formulated in terms of a single decision maker. We argue that it should rather be described as a two-player learning problem where one player is the machine and the other the human. While both players try to optimize the final decision, the setup is often characterized by (1) the presence of private information and (2) opacity, that is imperfect understanding between the decision makers. We prove that both properties can complicate decision making considerably. A lower bound quantifies the worst-case hardness of optimally advising a decision maker who is opaque or has access to private information. An upper bound shows that a simple coordination strategy is nearly minimax optimal. More efficient learning is possible under certain assumptions on the problem, for example that both players learn to take actions independently. Such assumptions are implicit in existing literature, for example in medical applications of machine learning, but have not been described or justified theoretically.

翻译:机器学习的应用在广泛的任务中为人类决策者提供信息。 由此产生的问题通常由一个决策者来提出。 我们争辩说,它应该被描述为一个玩家是机器,另一个是人的两个玩家学习的问题。 虽然两个玩家都试图优化最终决定,但设置的特点是:(1) 私人信息的存在和(2) 决策者之间不完全理解的不透明。 我们证明两种特性都可能使决策变得相当复杂。 限制较低是给一个不透明或能够获得私人信息的决策者提供最佳建议的最差情况硬性的一个量化。 上限显示简单的协调战略几乎是最小的。 在对问题的某些假设下,效率更高的学习是可能的,例如,两个玩家都学会独立采取行动。这种假设隐含在现有的文献中,例如在机器学习的医学应用中,但是在理论上没有被描述或证明合理。